Navigating the AI Compliance Landscape: Insights from the 2025 Trends Report

The rapid advancement and widespread adoption of artificial intelligence are ushering in an era of transformative potential across various sectors. However, this technological revolution also brings forth significant compliance challenges that businesses must address proactively. The AI Trends Report 2025 from statworx provides crucial insights into the evolving AI landscape, highlighting key areas that demand a strong focus on compliance. This article delves into these compliance-related trends, drawing directly from the report to equip your organization with a comprehensive understanding of the AI compliance landscape.

The Foundational Impact of the EU AI Act

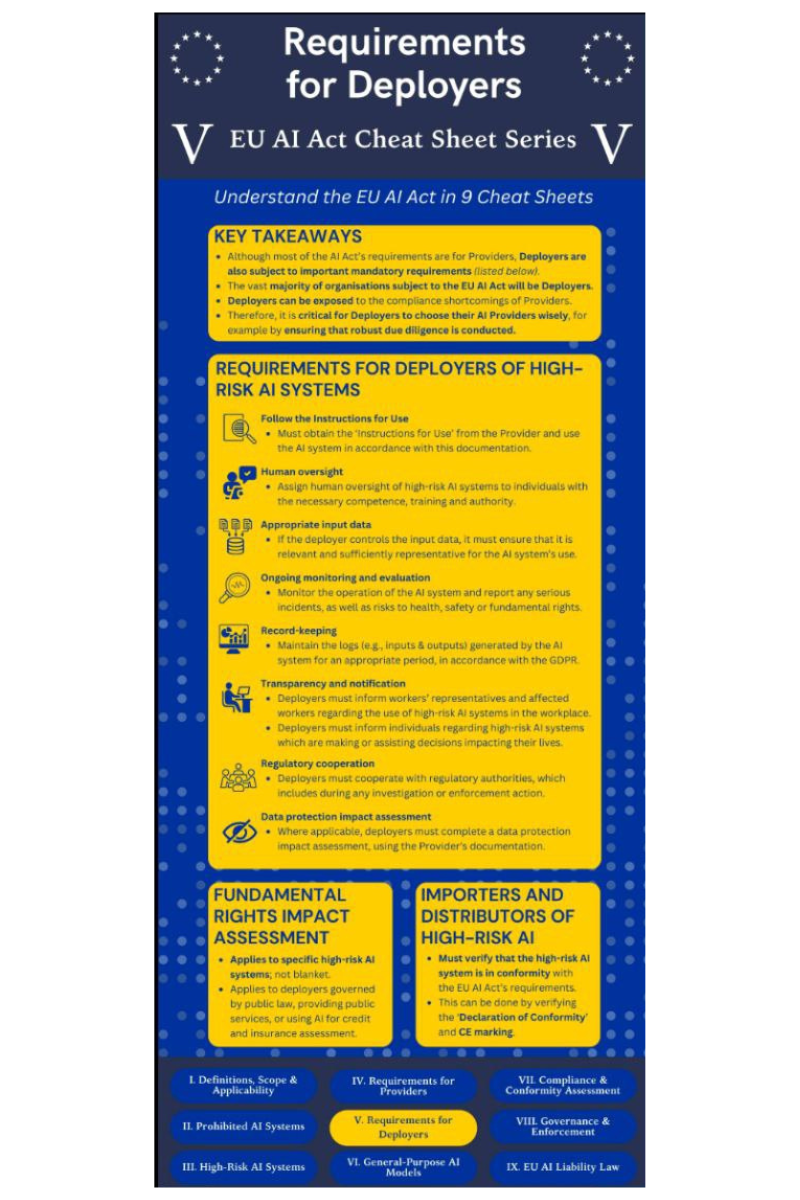

A central theme in the AI Trends Report 2025 is the EU AI Act, which has been gradually coming into force since 2024. This landmark legislation establishes legal frameworks for the application of AI technology, not only within Europe but also for international companies offering AI products in the EU. The AI Act is built upon a risk-based approach, categorizing AI systems based on their potential risk and imposing strict requirements for high-risk systems. Systems deemed to pose an “unacceptable risk,” such as social scoring systems or emotional recognition (with limited exceptions for border control), are prohibited. General-purpose AI models like GPT-4 will be regulated starting in August 2025, and by mid-2026, all regulations, including those for high-risk AI, will be in effect.@

The implications for compliance are substantial. Companies must be prepared to classify their AI systems according to the Act's risk levels and adhere to the corresponding obligations. Limited-risk systems, for instance, require transparency, such as labeling chatbots and deepfakes. Non-compliance can result in significant fines of up to 7% of a company's annual global turnover. The report notes that the AI Act has already led some major tech companies to restrict the availability of certain AI products in the EU or significantly delay their release, citing the “unpredictability of the European regulatory landscape”.

The Interplay with GDPR and Data Protection

The AI Trends Report 2025 emphasizes the interaction between the AI Act and the General Data Protection Regulation (GDPR). There is a noted tension between the AI Act and specific requirements in sectors like finance, medicine, and the automotive industry, leading experts to call for better coordination of regulations. Regarding data processing, EU data protection authorities permit the processing of personal data by AI models if there is a “legitimate interest,” subject to a three-stage test involving legitimacy, necessity, and the balancing of fundamental rights. This means companies like Meta, Google, and OpenAI can potentially process personal data for their AI models under this basis, provided they meet the stringent conditions. The report also mentions that in principle, data should be processed anonymously to prevent individual identification. Compliance teams must therefore navigate the complexities of both the AI Act and GDPR to ensure lawful and ethical AI deployments.

The Imperative of AI Governance

Recognizing the risks associated with AI, the AI Trends Report 2025 highlights the growing importance of robust AI governance frameworks. These frameworks encompass the processes, standards, and guardrails that ensure AI systems are used safely and ethically, guiding their research, development, and application to guarantee safety, fairness, and the protection of human rights. Effective governance includes oversight mechanisms to address risks such as discrimination, privacy breaches, and misuse, while simultaneously fostering innovation.

A recent study cited in the report reveals that a significant percentage of German companies have concerns about the use of sensitive data in AI models and about data protection and security. To mitigate these risks, the report recommends establishing clear accountability for AI-related issues, potentially by appointing an executive to manage these tasks centrally. Companies are urged to expand their governance beyond mere efficiency and cost reduction to foster innovation and transformation, building trust in the technology and securing its long-term strategic benefits. Principles such as empathy, bias control, transparency, and accountability are becoming increasingly vital for responsible AI governance. The report suggests that companies with solid AI governance in 2025 will benefit from strengthened customer trust and economic advantages through better-controlled and more efficient AI systems, particularly noting the financial industry as an example.

The Crucial Role of AI Literacy

The EU AI Act, specifically Article 4, introduces a mandatory AI training obligation for companies working with artificial intelligence, effective from February 2, 2025. Organizations must ensure that their employees who professionally use AI have sufficient knowledge of how AI works and its impact, as well as the capacity to weigh opportunities and risks. Employers are obligated to offer appropriate training courses to achieve a “sufficient level of AI literacy”. This includes a basic understanding of AI systems, their autonomy, safe usage, potential pitfalls, effective prompting, areas of assistance and error, and the risks of violating data protection, copyright, and personal rights.

The AI Trends Report 2025 reveals a concerning lack of AI skills in German companies, with a majority not providing any learning opportunities. This underscores the urgency for clear guidelines and proactive measures to meet the training obligations under the AI Act. Companies that take this training obligation seriously will benefit by minimizing legal risks, improving compliance, fostering a culture of responsibility and safety in AI usage, and ultimately enabling better and more innovative AI applications. The report suggests a modular training concept to address the diverse needs of employees, covering basic understanding, strategic considerations for managers, and technical details for IT experts.

Ethical Considerations and Potential Misuse

Beyond legal compliance, the AI Trends Report 2025 touches upon the significant ethical considerations surrounding AI development and deployment. The rise of AI avatars and generative video AI, for example, presents unprecedented creative possibilities but also raises serious questions about ethics, security, and regulation, including concerns about deepfakes, copyright, and misuse. Similarly, the increasing capabilities of AI models in areas like emotion recognition, while offering potential benefits, carry risks of bias against marginalized groups and misuse in sensitive areas, leading to restrictions under the AI Act. The report also notes the potential for AI models to exhibit manipulative behavior, highlighting the importance of aligning AI with human values. Compliance strategies must therefore extend beyond legal mandates to address these broader ethical implications and potential risks of misuse.

Conclusion

The AI Trends Report 2025 paints a picture of a dynamic and rapidly evolving AI landscape that presents both immense opportunities and complex compliance challenges. The EU AI Act, in conjunction with existing regulations like GDPR, forms a crucial framework that demands careful attention and proactive adaptation. Establishing robust AI governance frameworks and ensuring widespread AI literacy within organizations are not merely compliance requirements but also strategic imperatives for fostering trust, mitigating risks, and unlocking the full potential of AI responsibly. By understanding and addressing these key compliance-related trends highlighted in the report, your organization can navigate the AI revolution with confidence and ensure a future where innovation and compliance go hand in hand.