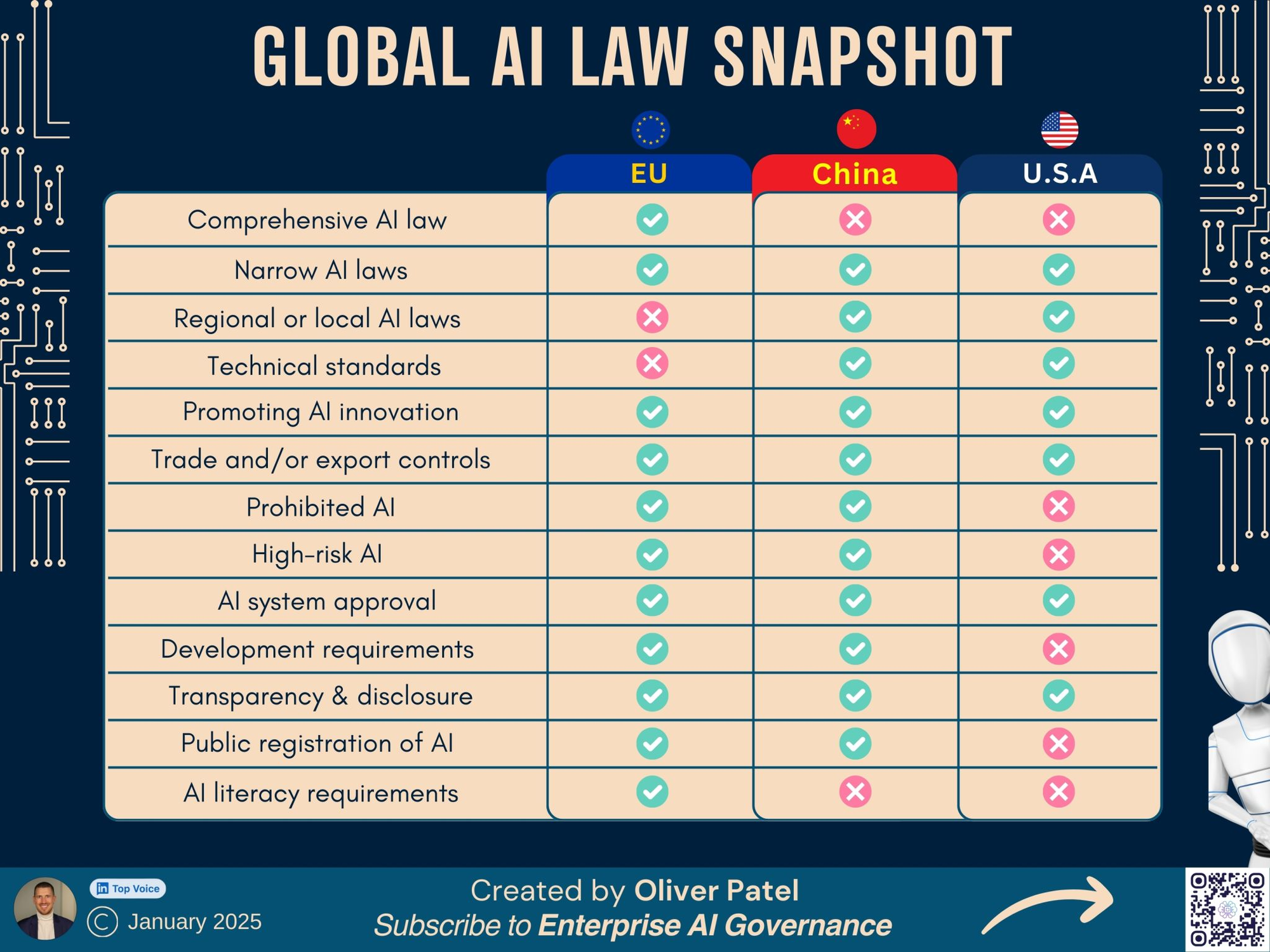

Global AI Law Snapshot: A Comparative Overview of AI Regulations in the EU, China, and the USA

As artificial intelligence (AI) continues to revolutionize industries worldwide, governments are racing to establish legal frameworks to regulate its development, deployment, and risks. The European Union (EU), China, and the United States (USA) have each taken unique approaches toward AI regulation, reflecting their economic priorities, governance philosophies, and risk mitigation strategies. This article provides a comparative snapshot of AI regulations in these three regions, highlighting key areas such as AI system approvals, prohibited AI practices, transparency requirements, and AI literacy mandates.

1. Comprehensive vs. Narrow AI Laws

The EU stands out as the only region with a comprehensive AI law, namely the EU AI Act, which categorizes AI systems based on risk levels (e.g., prohibited, high-risk, limited risk, and minimal risk). Meanwhile, China and the USA lack a singular overarching AI law but rely on sector-specific or provincial laws.

- ✅ EU: Comprehensive AI law (EU AI Act)

- ❌ China: No single AI law, but strict sectoral regulations exist.

- ❌ USA: No comprehensive AI law; AI regulation is fragmented.

2. Regional and Local AI Laws

Unlike the EU, where AI laws apply uniformly across member states, China and the USA employ regional or local AI laws:

- ✅ China: Different provinces have issued AI-related policies, with Beijing and Shanghai leading in governance.

- ✅ USA: State-level AI laws (e.g., California’s AI bias laws, Illinois’ biometric AI regulations).

- ✅ EU: Unified AI Act covering all member states.

3. Technical Standards and AI Innovation Promotion

All three regions emphasize technical standards to ensure AI safety and fairness. Moreover, they actively promote AI innovation through investments, public-private partnerships, and national AI strategies.

- ✅ EU, China, USA all have technical standards and policies to foster AI growth.

4. Trade and Export Controls

AI is increasingly seen as a strategic technology, leading to export controls on AI models, semiconductors, and algorithms. China and the USA have trade restrictions, while the EU is more lenient:

- ✅ China & USA: Strict AI-related trade/export controls (e.g., semiconductor export bans).

- ❌ EU: More flexible approach, focusing on AI risk management rather than trade bans.

5. Prohibited AI Practices

The EU and China prohibit certain AI practices (e.g., mass surveillance, emotion recognition in workplaces). In contrast, the USA lacks federal AI bans:

- ✅ EU: Bans social scoring and some high-risk AI applications.

- ✅ China: Regulates AI-generated content, deepfakes, and social scoring.

- ❌ USA: No federal prohibition on AI applications.

6. High-Risk AI Systems

High-risk AI includes applications in biometrics, law enforcement, critical infrastructure, and healthcare. Both the EU and China classify and regulate high-risk AI, while the USA lags behind:

- ✅ EU & China: Stringent rules for high-risk AI.

- ❌ USA: No federal framework; sector-specific rules exist.

7. AI System Approval and Development Requirements

Regulators in the EU and China mandate AI approvals and compliance frameworks, ensuring AI systems meet safety and ethical standards before deployment. The USA does not require AI system approvals, except for certain industries like healthcare and finance.

- ✅ EU & China: AI approval process required.

- ❌ USA: No mandatory approval process.

8. Transparency and Disclosure

Transparency is critical to AI accountability. Both the EU and China require disclosure of AI-generated content, while the USA has no universal transparency mandates.

- ✅ EU & China: AI-generated content must be labeled.

- ❌ USA: No nationwide transparency rule.

9. Public Registration of AI Systems

China and the EU enforce public registration of certain AI systems, while the USA does not require AI models to be registered.

- ✅ EU & China: AI models must be registered for accountability.

- ❌ USA: No public registration requirement.

10. AI Literacy Requirements

Only China mandates AI literacy programs, ensuring citizens and professionals understand AI ethics, risks, and applications.

- ❌ EU & USA: No mandatory AI literacy programs.

- ✅ China: AI literacy programs are mandatory.

Final Thoughts: Who is Leading AI Regulation?

- The EU is the most comprehensive in AI regulation, with the AI Act leading global governance.

- China has a strict but fragmented approach, focusing on state control and AI ethics.

- The USA relies on a market-driven approach, lacking federal oversight but allowing state-level AI regulations.

As AI continues to evolve, these regulatory frameworks will shape how businesses innovate, how governments manage AI risks, and how society interacts with AI-driven technologies.