August 2025 marks a pivotal moment in internet history as YouTube deploys AI-powered age verification across the United States, following similar implementations worldwide amid a coordinated push for digital identity verification under the banner of “child safety.”

The System Goes Live

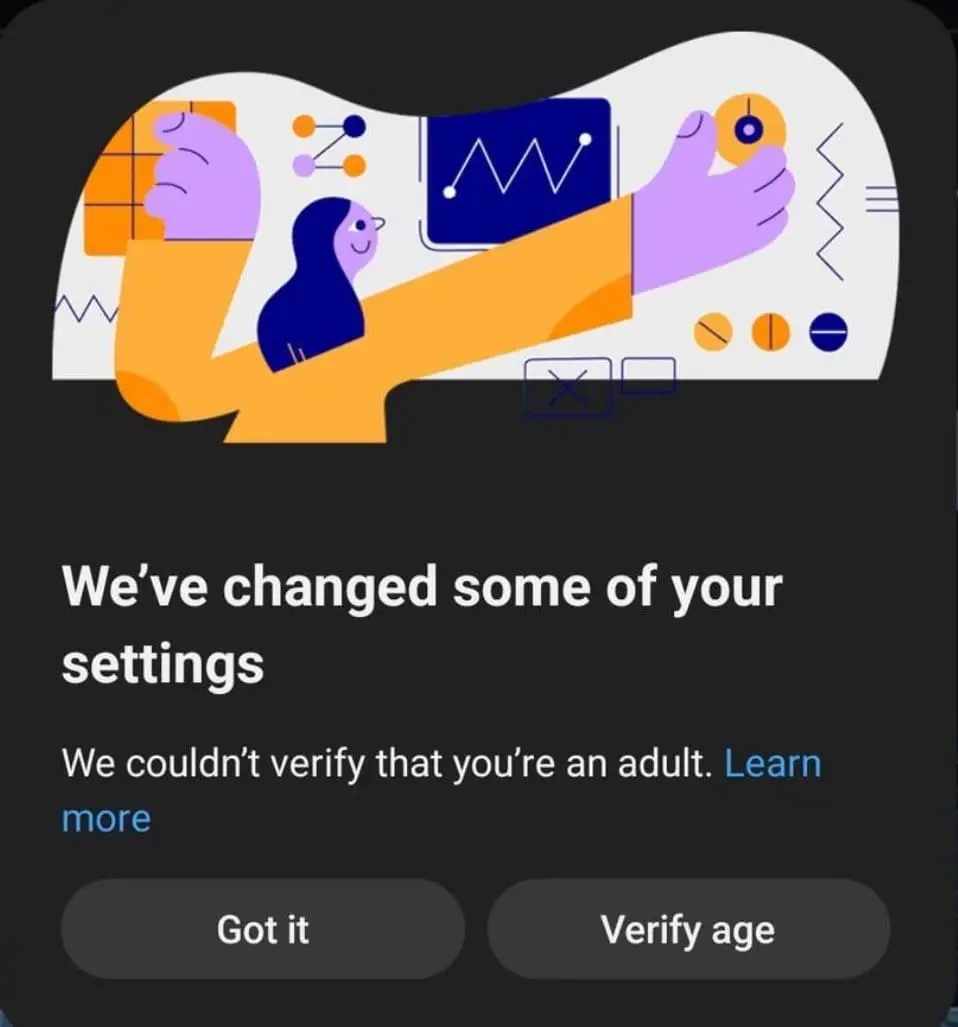

On August 13, 2025, YouTube began rolling out its artificial intelligence-powered age verification system to U.S. users, representing what may be the most significant change to internet access since the platform’s inception. The system uses machine learning to estimate users’ ages based on their viewing habits, account longevity, and platform activity, regardless of the birthday entered during account creation.

If YouTube’s AI determines a user is likely under 18, it automatically applies teen safety restrictions including non-personalized ads, “digital wellbeing” tools like bedtime reminders, and blocks access to age-restricted content. Users incorrectly flagged as minors must upload government ID, credit card information, or submit to facial recognition scans to prove their age.

What makes this particularly concerning is the scope of behavioral monitoring involved. The system analyzes patterns in user activity—such as watch history, search behavior, and engagement with content—to make age determinations, creating detailed psychological profiles of users under the guise of protection.

The Legislative Landscape: KOSA and Beyond

YouTube’s move doesn’t exist in a vacuum. It’s part of a broader legislative push exemplified by the Kids Online Safety Act (KOSA), which has been reintroduced in the 119th Congress with bipartisan support. KOSA would establish “duty of care” requirements for platforms, mandating they prevent minors from accessing content related to mental health issues, eating disorders, substance abuse, and other broadly defined “harms”.

The Senate version passed with a 91-3 vote in the previous Congress, and the bill has been reintroduced with support from Senate Majority Leader John Thune and Minority Leader Chuck Schumer. The legislation now has endorsements from over 250 organizations and tech giants including Apple, despite fierce opposition from digital rights groups.

The Electronic Frontier Foundation warns that KOSA “will not make kids safer” but will “make the internet more dangerous for anyone who relies on it to learn, connect, or speak freely”. The legislation’s vague definitions of harm and “compulsive usage” create a framework for widespread content censorship under the pretense of child protection.

In Addition to COPPA and KOSA for Child Safety Bills

The UK Template: A Privacy Nightmare Realized

The UK has already implemented what many see as the blueprint for this global trend. The Online Safety Act, which received Royal Assent in October 2023, went into full effect on July 25, 2025, requiring platforms to implement “highly effective age assurance” to prevent children from accessing pornography and other “harmful” content.

The results have been predictably dystopian. The UK government reports that an additional five million age checks are being performed daily, while VPN usage has surged by 1,400% as users seek to circumvent the surveillance system. Users are successfully bypassing photo-based age verification using images from video games like Death Stranding, highlighting both the system’s technical inadequacy and the absurdity of the entire enterprise.

Major platforms including Discord, Reddit, Bluesky, and Spotify have implemented age verification systems requiring users to upload government IDs or submit to facial recognition scans. Meanwhile, public interest platforms like Wikipedia have refused to comply and are challenging the law in court.

The Global Pattern: Australia, Texas, and Beyond

This isn’t limited to the UK and US. Australia is moving toward a nationwide ban of children under 16 from social media platforms, including YouTube, and has introduced age checks for search engines. Utah became the first U.S. state to pass legislation requiring app stores to verify users’ ages, while Texas is pursuing an expansive social media ban for minors.

The pattern is clear: under the emotionally charged banner of “protecting children,” governments worldwide are implementing comprehensive digital identity and surveillance systems that affect all users, not just minors.

The Technical and Privacy Implications

YouTube’s AI age estimation system represents a concerning evolution in surveillance technology. The system analyzes vast datasets of user behavior to create psychological profiles, determining not just what users watch, but how their viewing patterns correlate with age demographics. This behavioral analysis goes far beyond simple age verification—it creates detailed profiles of users’ interests, habits, and psychological patterns.

The verification methods for users flagged incorrectly include biometric data collection through facial recognition scans, government ID uploads, or financial information through credit card verification. Each of these methods creates massive databases of sensitive personal information linked to individuals’ online activities.

As one privacy expert noted, these systems create “massive honeypots of personal data” that inevitably get breached, making intimate details about people’s online activity public. The UK’s implementation has already demonstrated these risks, with easily fooled verification systems and growing security concerns.

The STOP HATE Act: How Congress Plans to Outsource Censorship to Advocacy Groups

The Censorship Infrastructure

What’s particularly troubling is how these “child safety” measures are creating infrastructure for broader content control. KOSA’s “duty of care” provisions could be interpreted to suppress LGBTQ+ content, as evidenced by co-sponsor Senator Marsha Blackburn’s comments about “protecting minor children from the transgender in this culture”.

The legislation relies on vague, subjective definitions of harm like “compulsive usage” for which there is no accepted clinical definition or scientific consensus. This creates a framework where platforms must guess at what content might be deemed harmful and err on the side of censorship.

In the UK, platforms must make judgments about whether content is “harmful to children” and implement age checks accordingly, effectively requiring private companies to act as government censors.

The Internet Bill of Rights: A Framework for Digital Freedom in the Age of Censorship

Industry Capture and Economic Incentives

The push for age verification isn’t just about control—it’s also creating new markets for surveillance technology. UK research shows that UK firms account for an estimated 23% of the global safety tech workforce, with 28% of safety tech companies based in the UK. Every verification using facial recognition or ID checking services represents money flowing to third-party companies that governments actively support.

Major tech companies and organizations have lined up to support KOSA, including Apple, which specifically endorsed the legislation saying it will have “meaningful impact on children’s online safety”. This support from major platforms suggests they see age verification as potentially beneficial to their business models, possibly by creating barriers to entry for competitors and consolidating market control.

The False Choice

Government officials have been particularly aggressive in dismissing criticism of these measures. UK Tech Secretary Peter Kyle deployed the classic authoritarian playbook, stating “If you want to overturn the Online Safety Act you are on the side of predators. It is as simple as that”. This rhetorical strategy deliberately frames any opposition to surveillance and censorship as support for child abuse.

This false dichotomy ignores the legitimate concerns about privacy, free speech, and the effectiveness of these measures. A petition calling for repeal of the UK’s Online Safety Act has attracted over 500,000 signatures, demonstrating significant public opposition despite government claims of support.

Technical Failures and Workarounds

The technical implementation of these systems reveals their fundamental flaws. Users are easily bypassing photo-based age verification using video game screenshots, highlighting how poorly implemented these facial recognition systems are. If the systems can’t distinguish between real people and video game characters, their ability to protect the sensitive biometric data they’re collecting is questionable at best.

The massive surge in VPN usage in the UK—with 1,400% increases reported—demonstrates that tech-savvy users, including the very teenagers these laws claim to protect, can easily circumvent these restrictions. Meanwhile, less technically sophisticated users are forced to submit to invasive surveillance.

The Broader Context: Digital Identity Infrastructure

These age verification systems are part of a larger push toward comprehensive digital identity infrastructure. The European Commission is launching its own digital identity system following the UK’s implementation, suggesting coordination among governments to create interoperable surveillance systems.

What’s being built under the guise of “child safety” is the technical and legal infrastructure for comprehensive monitoring and control of internet access. Once these systems are in place, expanding their scope to other forms of content control becomes trivial.

Resistance and Alternatives

Despite the coordinated push from governments and major platforms, significant resistance remains. The Wikimedia Foundation has refused to implement age verification for Wikipedia and is challenging the UK’s law in court, arguing that the regulations threaten the volunteer-contributor model that makes free knowledge possible.

Digital rights organizations including the Electronic Frontier Foundation, Fight for the Future, and the American Civil Liberties Union continue to oppose these measures, arguing that they will suppress legitimate speech while failing to protect children.

The technical workarounds—from VPN usage to bypassing facial recognition with video game images—demonstrate that determined users will find ways around these systems, making them ineffective for their stated purpose while imposing surveillance on compliant users.

The Path Forward

YouTube’s implementation of AI age verification represents a critical moment in the battle for internet freedom. What happens in the coming months—whether users accept this surveillance as normal or resist it—will determine the future of online privacy and free speech.

The pattern is clear: governments worldwide are using “child safety” as justification for implementing comprehensive digital surveillance and censorship infrastructure. The real question isn’t whether these systems will protect children (the evidence suggests they won’t), but whether they will fundamentally alter the nature of internet freedom.

EU Media Freedom Act: Protection or Paradox? An Analysis of Journalist Detention Provisions

As critics have raised concerns about privacy and access to platforms for users falsely flagged as underage, it’s worth remembering that the internet was built as a decentralized network precisely to resist this kind of centralized control. The current push for age verification represents an attempt to transform the internet from a free and open platform into a controlled, surveilled, and censored medium.

The choice facing internet users today is whether to accept this transformation in the name of “safety,” or to recognize it for what it is: a coordinated effort to establish digital control systems that will inevitably expand beyond their stated purpose. YouTube’s AI age verification isn’t just about protecting children—it’s about normalizing surveillance and building the infrastructure for a controlled internet.

The future of digital freedom may well depend on how we respond to this seemingly innocuous request to “verify your age for safety.”