X's Privacy Policy Pivot: From "Free Speech Absolutism" to EU Compliance — And Why Your Biometric Data Is Going to Israel

Breaking Analysis: Platform updates terms to remove "harmful content" under EU/UK pressure while partnering with Israeli intelligence-linked verification firm

December 19, 2025 | Privacy Analysis

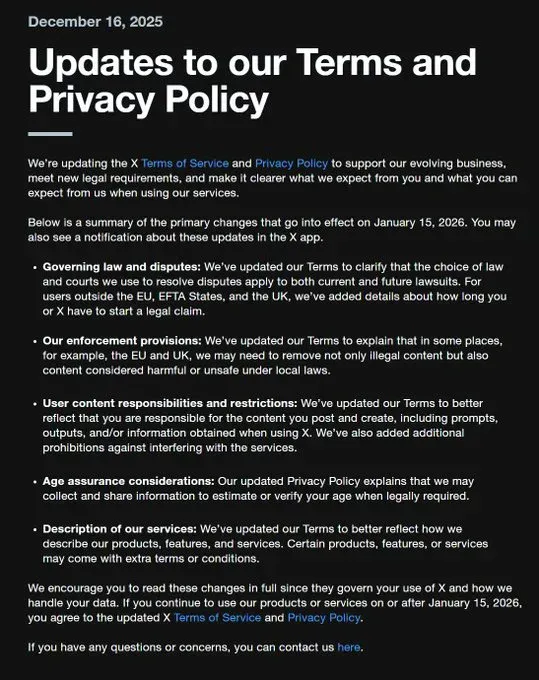

In what marks a significant shift from Elon Musk's much-touted "free speech absolutism," X (formerly Twitter) has quietly updated its Terms of Service and Privacy Policy with enforcement provisions that explicitly acknowledge compliance with EU and UK content removal demands. The changes, effective January 15, 2026, come as the platform faces mounting regulatory pressure from European authorities and raises fresh concerns about user privacy through its continued partnership with Israeli-based identity verification firm AU10TIX.

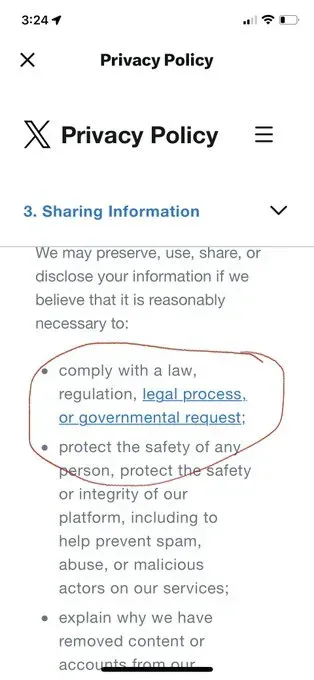

The Policy Changes: What X Is Now Admitting

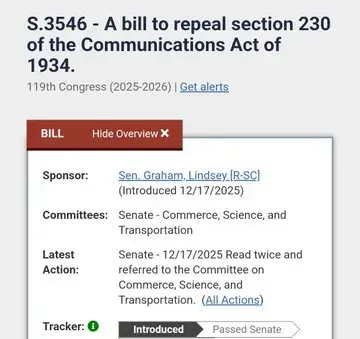

The December 2025 update to X's Terms of Service contains a critical new provision that represents a fundamental departure from the platform's previous positioning:

"Our enforcement provisions: We've updated our Terms to explain that in some places, for example, the EU and UK, we may need to remove not only illegal content but also content considered harmful or unsafe under local laws."

This language represents a significant concession to regulatory frameworks like the EU's Digital Services Act (DSA) and the UK's Online Safety Act — both of which X has publicly criticized and legally challenged. The updated terms also emphasize user liability: "You are responsible for the content you post and create, including prompts, outputs, and/or information obtained when using X."

The policy explicitly states that where content removal obligations apply, "the respective content will be made unavailable only within the required country or geographic area and will remain accessible elsewhere" — a geographic filtering approach that suggests X is implementing region-specific censorship infrastructure.

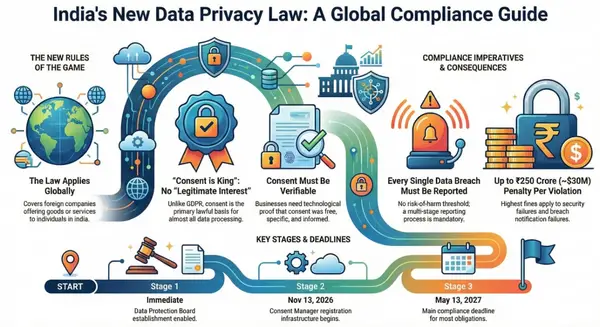

The EU's €120 Million Warning Shot

X's policy update doesn't exist in a vacuum. The European Commission has been systematically tightening the regulatory noose:

In July 2024, the Commission imposed preliminary findings that X violated the Digital Services Act through:

- Deceptive design of its blue checkmark verification system

- Lack of transparency in its advertising repository

- Failure to provide public data access to researchers

The fine ultimately reached €120 million for these transparency violations, with EU Competition Commissioner Margrethe Vestager making clear: "We're holding X accountable."

The implications go beyond monetary penalties. The DSA empowers the EU to demand content removal for material deemed "harmful" under local laws — a category far broader than illegal content and one that grants significant discretion to government authorities.

When "Harmful" Becomes Subjective: The UK Online Safety Act

The UK's Online Safety Act compounds these concerns by establishing a regulatory framework that effectively deputizes platforms as content police. Under this legislation, platforms must:

- Implement systems to identify and remove "legal but harmful" content

- Respond to government takedown demands

- Face substantial fines for non-compliance (up to 10% of global revenue)

Critics have warned this creates a two-tier internet where content availability depends on geographic location and gives governments unprecedented influence over online speech. X's new terms acknowledge this reality: the platform will comply with demands to remove content "considered harmful or unsafe under local laws."

Australia's eSafety Commissioner: A Preview of Global Enforcement

Australia's aggressive approach to content regulation offers a glimpse of what X faces globally. In April 2024, eSafety Commissioner Julie Inman Grant demanded X globally censor footage of a violent incident, threatening daily fines of AU$825,000 for non-compliance.

X challenged the order in court, with legal arguments that the Australian government was attempting to impose "global censorship" that would make content unavailable to users worldwide — not just in Australia. The case highlighted the tension between national sovereignty claims over online content and the global nature of internet platforms.

X ultimately won the legal battle, but the incident demonstrated how government authorities worldwide are becoming increasingly aggressive in demanding content removal, using hefty daily fines as leverage.

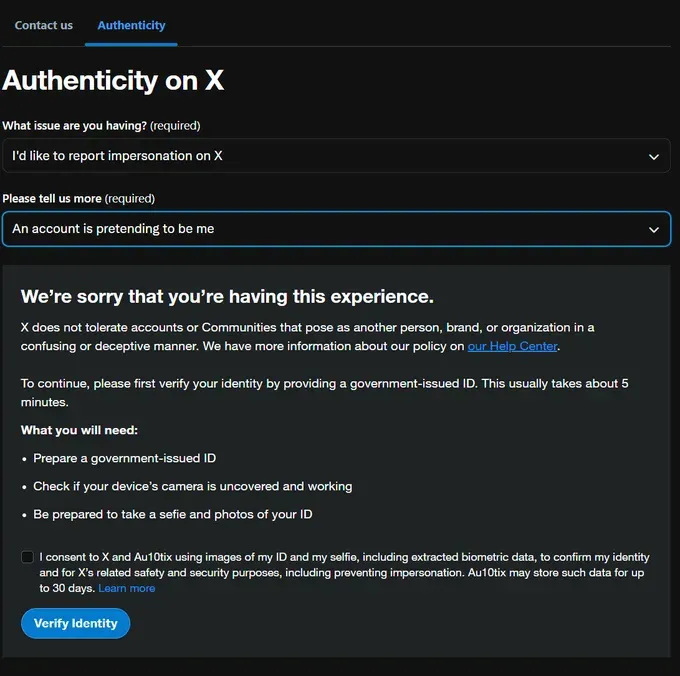

The AU10TIX Connection: Your Face, Israel's Database

While X navigates regulatory pressures for content moderation, its identity verification systems raise equally troubling privacy concerns. Since 2023, X has required users seeking identity verification to submit biometric data — including government-issued IDs and selfies — to AU10TIX, an Israeli-based technology firm.

Intelligence Agency Origins

AU10TIX's corporate lineage is deeply intertwined with Israeli intelligence services:

- Founded in 2002 as the technology arm of ICTS International

- ICTS was established by former members of Israel's Shin Bet domestic intelligence agency and ex-security officials from El Al airline

- AU10TIX founder Ron Atzmon served in Shin Bet's notorious Unit 8200 — Israel's signals intelligence unit responsible for surveillance operations

- Multiple AU10TIX engineers have worked in Unit 8200, which is widely known for developing sophisticated facial recognition and surveillance technologies

Unit 8200 veterans have founded over 1,000 companies, with 80% of Israeli cyber tech firms established by former unit members. The unit has been documented surveilling Palestinians, using collected information for political persecution and identifying potential informants.

What Data Gets Shared

When users complete X's identity verification process, they consent to:

- Biometric data sharing with AU10TIX including facial scans and government ID images

- 30-day data retention by both X and AU10TIX

- Verification for "safety and security purposes" including preventing impersonation

X claims this data is used solely for identity confirmation, but the structure raises questions: Why does a company with deep ties to intelligence services need to store this data? What secondary uses might exist under Israeli law or intelligence frameworks?

The Technology Behind the Verification

AU10TIX employs sophisticated identity intelligence systems that can:

- Detect patterns across millions of profiles globally

- Identify when the same identity appears in multiple countries simultaneously

- Determine a person's age and approximate location from biometric markers

- Cross-reference against large databases to detect fraud patterns

Digital rights advocates warn that this same technology — developed from Unit 8200 surveillance capabilities — could theoretically be repurposed beyond simple verification. Jessica Buxbaum, who has investigated Israeli surveillance tech extensively, notes that AU10TIX must maintain massive databases containing personal information of millions to detect the patterns it claims to identify.

The World Economic Forum Factor

Adding another layer of controversy, X's former CEO Linda Yaccarino (who served from June 2023 to July 2025) maintained extensive ties to the World Economic Forum:

- Chairman of WEF's Taskforce on Future of Work (since January 2019)

- Member of WEF's Media, Entertainment and Culture Industry Governors Steering Committee

- Active participant in the WEF's "Value in Media" initiative

- Partnered with Biden administration in 2021 to create COVID-19 vaccination campaign

Yaccarino's appointment sparked immediate backlash from free speech advocates who questioned whether someone with deep establishment and WEF connections would uphold Musk's stated commitment to minimal content moderation. During her tenure, X faced increasing pressure to moderate content and implement verification systems — pressures that have now manifested in updated terms explicitly acknowledging content removal obligations.

Trust & Safety: New Officers, Old Concerns

X's current trust and safety leadership adds further dimension to privacy concerns. According to reports circulating in cybersecurity communities, the platform's trust and safety officers include:

- Zach Schapira - reported former Israeli intelligence connections

- Michal Totchani - reported intelligence background

While X has not publicly confirmed detailed backgrounds of its trust and safety team, the pattern of Israeli intelligence connections throughout X's security infrastructure is notable: from biometric verification (AU10TIX with Unit 8200 ties) to reported personnel in content moderation roles.

The Technical Reality: Biometric Verification Is Now Mandatory

For users seeking certain platform features, biometric verification has become non-negotiable:

Since July 1, 2023: Any creator wishing to monetize activity on X must verify identity through AU10TIX Current requirement: Users reporting impersonation must submit to verification Coming requirements: As X expands verification features, more users face the "verify or lose access" ultimatum

The verification process requires:

- Uploading government-issued photo ID

- Taking a selfie for facial recognition matching

- Consenting to X and AU10TIX storing this data for 30 days

- Agreeing to biometric data processing including extracted features

Privacy Implications: What Could Go Wrong?

Multiple risk vectors emerge from this combination of regulatory compliance and biometric data sharing:

1. Government Access to Biometric Data

Under Israeli law, intelligence agencies maintain broad authority to access data held by Israeli companies, particularly when national security is invoked. AU10TIX's database of millions of verified X users could theoretically become accessible to Israeli intelligence.

2. Cross-Border Intelligence Sharing

Israel maintains intelligence-sharing agreements with Five Eyes nations (US, UK, Canada, Australia, New Zealand). Biometric data collected by AU10TIX could flow through these channels.

3. Breach Risk

Centralized biometric databases are high-value targets. If AU10TIX were compromised, attackers would gain access to government IDs and facial biometrics for potentially millions of users.

4. Mission Creep

Data collected for "identity verification" today could be repurposed tomorrow for surveillance, tracking, or other intelligence applications — particularly given AU10TIX's intelligence agency origins.

5. Political Targeting

For activists, journalists, and dissidents from regions in conflict with Israel or its allies, submitting biometric data to a company with intelligence ties creates exposure to potential targeting.

The Broader Pattern: Platforms as State Actors

X's evolution illustrates a broader pattern where social media platforms increasingly function as extensions of state power:

Content Moderation → Government Compliance Platforms implement content policies that align with government demands, enforced through threat of massive fines and regulatory action.

Identity Verification → Surveillance Infrastructure What's marketed as security and trust becomes a mechanism for collecting biometric data that can serve intelligence purposes.

Geographic Filtering → Sovereignty Enforcement Platforms implement technology to make content selectively unavailable based on user location, enabling state-level censorship.

Data Localization → Access Guarantees Storing data in specific jurisdictions or sharing with locally-based firms creates pathways for government access.

What This Means for Privacy-Conscious Users

For users concerned about privacy, surveillance, and data sovereignty, X's current trajectory presents difficult choices:

Immediate Actions:

- Avoid identity verification unless absolutely necessary

- Never upload government IDs to social platforms if alternatives exist

- Use privacy-focused alternatives for sensitive communications

- Assume geographic surveillance: Your content availability may depend on location

- Separate identities: Use different personas for different contexts

Recognition of Reality:

The era of anonymous, borderless internet platforms is ending. Major social networks are becoming geographically-filtered, government-compliant, biometrically-verified systems. Users must decide whether the trade-offs are acceptable for their use cases.

For High-Risk Users:

Journalists, activists, researchers, and anyone whose work involves sensitive topics or adversarial governments should treat X's biometric verification as a hard red line. The intelligence connections aren't conspiracy theory — they're documented corporate structure and personnel backgrounds.

The Unanswered Questions

Several critical questions remain unanswered:

- Does AU10TIX share data with Israeli intelligence agencies? The company has not addressed this despite repeated inquiries from media.

- What happens to biometric data after the 30-day retention period? Is it truly deleted, or do derived features persist?

- How does X's AI training use verified user data? The privacy policy states X may use collected information to train AI models.

- What recourse exists for users in privacy-forward jurisdictions? Can EU users invoke GDPR rights to prevent biometric processing?

- Will other platforms follow this model? If X's compliance proves profitable, will Meta, Google, and others implement similar verification through intelligence-linked firms?

Conclusion: Privacy, Compliance, or Exit

X's December 2025 policy update represents the inevitable collision between Musk's "free speech absolutism" rhetoric and the reality of operating a global platform under assertive regulatory regimes. The platform is explicitly acknowledging it will remove content deemed "harmful" under local laws — not just illegal content.

Combined with mandatory biometric verification through a company founded by intelligence agency veterans, X has evolved into something fundamentally different from the "digital town square" Musk promised when acquiring the platform.

Users face three options:

- Accept the new reality of verified, surveilled, regionally-filtered social media

- Push back through legal challenges, alternative platforms, and regulatory advocacy

- Exit to decentralized, privacy-preserving alternatives

What's no longer viable is the illusion that major social platforms can operate as neutral, privacy-respecting, borderless digital spaces. The question isn't whether platforms will comply with government demands and implement surveillance-enabling verification — it's how users will respond when they do.

Your data, your identity, your content — all increasingly subject to geographic jurisdictions, government compliance regimes, and intelligence-linked verification systems.

The only question is whether you consent.

Related Reading

- EU Fines X €120 Million Over Transparency Violations

- When Government Content Curation Meets Free Speech: The UK Online Safety Act vs US First Amendment Principles

- Australia's eSafety Commissioner Demands X Censor Murder Footage, Faces $825K Daily Fine Threat

Disclaimer: This article represents analysis and opinion based on publicly available information. X Corp, AU10TIX, and other entities mentioned were contacted for comment but did not respond by publication time.