The EU's Trusted Flagger System: When "Potentially Illegal" Becomes Policy

Digital Censorship or Consumer Protection? Europe's Controversial Content Moderation Framework

The European Union has implemented a controversial content moderation system that grants special status to designated organizations to flag "potentially illegal" content for removal from online platforms. Under the Digital Services Act (DSA), these "trusted flaggers" operate with expedited review privileges, raising significant questions about due process, free speech, and the dangerous elasticity of terms like "potentially illegal."

What Are Trusted Flaggers?

Trusted flaggers are organizations designated by national Digital Services Coordinators to identify and report illegal content on online platforms. Unlike regular users whose reports enter standard queuing systems, trusted flaggers receive priority treatment: their notices must be processed and decided upon "without undue delay" by platform operators.

The system creates a two-tiered content moderation framework where some reports are more equal than others. When a trusted flagger submits a notice, platforms face heightened pressure to act quickly, often leading to content removal before thorough investigation or due process.

The "Potentially" Problem

The most concerning aspect of this system lies in a single word: "potentially." This qualifier transforms objective legal standards into subjective interpretation, creating a content moderation framework built on uncertainty rather than clear legal violations.

Consider the implications:

Traditional legal framework: Content is either illegal or it isn't. Courts determine illegality based on established law, evidence, and due process.

Trusted flagger framework: Content is flagged as "potentially illegal," shifting the burden of proof and creating pressure for preemptive removal to avoid regulatory consequences.

This semantic shift has profound practical effects. As detailed in our analysis of DSA-GDPR compliance, platforms face significant penalties under the DSA for failing to remove illegal content, creating strong incentives to err on the side of removal when faced with trusted flagger notices. The question becomes: How many lawful posts get deleted because they're "potentially" problematic?

Who Decides What's "Potentially Illegal"?

National Digital Services Coordinators designate trusted flaggers based on criteria including:

- Particular expertise and competence in detecting illegal content

- Independence from online platforms

- Diligent, accurate, and objective activities

- Work conducted for the public interest

But expertise in what, exactly? The DSA covers an expansive range of potentially illegal content, from clearly criminal material like child exploitation to far more subjective categories like "hate speech," "disinformation," or content that might violate intellectual property rights.

Different trusted flaggers may specialize in different areas, but all operate under the same expedited framework. An organization expert in copyright infringement receives the same priority treatment as one focused on political speech or religious content.

The Accountability Gap

Here's where the system becomes particularly problematic: trusted flaggers face minimal consequences for incorrect flags. While they can lose their status for repeated inaccurate notices, there's no immediate penalty for flagging lawful content, no compensation for users whose speech gets suppressed, and no transparent appeals process.

The asymmetry is stark:

Platforms: Face massive fines (up to 6% of global revenue) for failing to remove illegal content

Trusted flaggers: Face status review for repeated errors, but no immediate penalties

Users: Face content removal with limited recourse or explanation

This creates a predictable dynamic: trusted flaggers can flag aggressively with minimal risk, platforms remove content defensively to avoid penalties, and users bear the cost of a system optimized for removal rather than accuracy.

Real-World Implications

The trusted flagger system doesn't operate in a vacuum. It intersects with other DSA provisions, creating cumulative effects. As we documented in our comprehensive analysis of the Internet lockdown, the combination of regulatory pressure, payment processor censorship, and activist campaigns is fundamentally altering online freedoms.

For political speech: During election periods, anything deemed "disinformation" becomes potentially illegal. Trusted flaggers with political perspectives can flag opposition viewpoints for expedited review. A July 2025 House Judiciary Committee report revealed that European regulators classified common political statements like "we need to take back our country" as "illegal hate speech" that platforms are required to censor under the DSA.

For religious content: Material offensive to some religious groups might be flagged as hate speech, even if it constitutes legitimate theological debate or criticism.

For journalistic content: Investigative reporting on sensitive topics could be flagged as potentially illegal under various pretexts, forcing removal during the most time-sensitive publication window.

For satire and commentary: Parody, sarcasm, and social criticism might be flagged as misleading or defamatory, forcing platforms to make quick judgment calls on nuanced expression.

The Chilling Effect

Perhaps most concerning is what doesn't get posted in the first place. When creators know that certain topics trigger trusted flagger attention and expedited removal, rational self-censorship follows. Why invest time creating content that might disappear within hours, with your account potentially flagged for posting "potentially illegal" material?

This chilling effect is impossible to measure but potentially more significant than actual removals. The trusted flagger system doesn't just remove content; it shapes what gets created by establishing unwritten boundaries around controversial topics.

As explored in our article on freedom of speech battles in the UK, the European approach to content moderation is creating a continent-wide system that critics warn represents the most significant threat to online freedom since the internet's creation.

Parallels to US Government Censorship During COVID

The EU's trusted flagger system isn't the first time governments have pressured platforms to remove content based on subjective determinations of what's "harmful" or "misinformation." The Biden administration's coordinated campaign to suppress COVID-19 content on social media provides a cautionary tale about government-directed censorship.

The Biden White House Pressure Campaign

A House Judiciary Committee investigation uncovered extensive evidence that the Biden administration pressured Facebook, Twitter, and other platforms to censor content about COVID-19, including:

True information flagged as "malinformation": The Virality Project—a collaboration between Stanford University, multiple federal agencies, and taxpayer-funded activist groups—reported factual social media posts to Twitter and Facebook as problematic. They flagged "true content which might promote vaccine hesitancy" for suppression, including "stories of true vaccine side effects" and information about countries banning certain vaccines.

Political speech suppression: Content questioning COVID-19 policies, vaccine mandates, or the lab leak theory was systematically suppressed. A federal judge ruled that the government had "assumed a role similar to an Orwellian 'Ministry of Truth'" during the pandemic.

Humor and satire censored: Mark Zuckerberg revealed that Biden administration officials, including those from the White House, "repeatedly pressured" Facebook for months to take down "certain COVID-19 content including humor and satire." When Facebook didn't immediately comply, officials "expressed a lot of frustration."

Algorithm manipulation: Rather than outright banning content (which would be public), the White House pressured Facebook to manipulate algorithms to suppress reach. Tucker Carlson videos received "50% demotion for seven days" despite not violating Facebook's policies. The Daily Wire, Tomi Lahren, and other conservative voices had their content artificially suppressed through algorithm tweaks that were impossible to prove without internal access.

Threats and coercion: The administration leveraged the threat of Section 230 modifications and regulatory action to coerce compliance. Rob Flaherty, director of digital strategy at the Biden White House, directly instructed Facebook which posts and accounts to throttle and demanded changes to moderation policies.

Legal Findings and Fallout

Multiple federal courts found that the Biden administration violated the First Amendment:

- A Louisiana district court judge issued a preliminary injunction finding that "the Government has used its power to silence the opposition" on topics including COVID-19 vaccines, masking, lockdowns, the lab-leak theory, election validity, and the Hunter Biden laptop story.

- The Fifth Circuit Court of Appeals upheld key parts of the ruling, finding that government communications "coerced or significantly encouraged social media platforms to moderate content" in violation of the First Amendment.

- Mark Zuckerberg later admitted: "I believe the government pressure was wrong and I regret that we were not more outspoken about it."

This episode demonstrates the dangers when governments—whether through direct pressure in the US or institutionalized systems like EU trusted flaggers—gain power to determine what speech is "harmful" or "potentially illegal."

Comparing Systems: The US Approach vs. The EU Model

The United States traditionally took a fundamentally different approach through Section 230 of the Communications Decency Act, which provides platforms broad immunity while preserving user speech rights. While imperfect, the US system places legal accountability on content creators rather than platforms, allowing for more speech rather than less.

However, as the COVID-19 censorship campaign revealed, even the US system proved vulnerable to government pressure when officials leveraged regulatory threats to coerce private companies into suppressing lawful speech.

The EU's approach inverts the traditional model entirely, making platforms legally responsible for user content while granting special organizations power to trigger removal. This represents a philosophical divergence: the US nominally prioritizes speech with post-hoc legal consequences for truly illegal content, while the EU prioritizes preemptive content control.

The Brussels Effect: Global Speech Control

As detailed in our analysis of cross-border DSA compliance impacts, the DSA doesn't just affect Europe. As the world's largest regulatory market, EU rules often become de facto global standards through what's known as the "Brussels Effect."

When platforms implement systems to comply with European regulations, those systems frequently get deployed globally. American users might find their content removed not because it violates US law, but because it's potentially illegal under EU standards as interpreted by European trusted flaggers. Asian creators might face removal for content that's lawful in their jurisdictions but problematic under European norms.

Our comprehensive report on how EU/UK regulations are reshaping US business operations documents how European censorship requirements are infiltrating American digital spaces. The House Judiciary Committee found that "nonpublic documents reveal that European regulators use the DSA: (1) to target core political speech that is neither harmful nor illegal; and (2) to pressure platforms, primarily American social media companies, to change their global content moderation policies in response to European demands."

The trusted flagger system thus represents an effort to export European content standards globally through regulatory leverage over platform operations.

The Transparency Deficit

Despite DSA requirements for transparency reporting, critical information remains hidden:

- Which organizations are designated as trusted flaggers in each member state?

- What specific content categories does each organization focus on?

- What percentage of their flags result in removal?

- How many removed items were later determined to be lawful?

- What appeals process exists for affected users?

Without this transparency, the trusted flagger system operates as a partially visible content control mechanism, where the power to suppress speech remains concentrated and largely unaccountable. This mirrors the opacity of the Biden administration's censorship campaign, where internal communications revealed the extent of government pressure only after congressional investigation.

The Slippery Slope of "Potentially"

The evolution of content moderation shows a consistent pattern: temporary measures become permanent, narrow categories expand, and subjective standards replace objective ones. The trusted flagger system accelerates this pattern by institutionalizing "potentially illegal" as a removal standard.

What starts as flagging clearly illegal content inevitably expands:

- Today: Flagging content potentially violating existing laws

- Tomorrow: Flagging content potentially violating emerging regulatory interpretations

- Eventually: Flagging content potentially problematic under evolving social standards

The word "potentially" contains infinite elasticity, limited only by the perspectives and priorities of those doing the flagging.

We've documented this expansion already. Our coverage of Chat Control legislation shows how "child protection" measures morph into mass surveillance infrastructure. The UK's Online Safety Act implementation demonstrates how age verification requirements become comprehensive identity tracking systems.

The Path Forward: Necessary Reforms

If the trusted flagger system is to continue, several reforms would improve accountability and protect legitimate speech:

Transparency requirements: Public disclosure of all trusted flaggers, their specializations, accuracy rates, and removal statistics

User notification: Mandatory immediate notification when content is flagged by a trusted flagger, with clear appeals process

Penalty symmetry: Trusted flaggers should face consequences for flagging lawful content, not just lose their status after repeated errors

Narrow scope: Limitation of expedited treatment to clearly defined illegal categories (child exploitation, terrorist content, etc.) rather than subjective determinations

Judicial review: Establishment of rapid judicial review for content flagged as potentially illegal before removal

Cross-border protections: Mechanisms to prevent European standards from being imposed on non-European users

The Broader Digital Rights Context

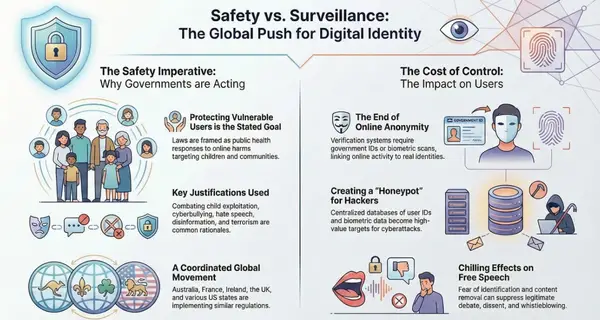

The trusted flagger system exists within a broader assault on digital rights across multiple jurisdictions. As documented in our Internet Bill of Rights framework, governments worldwide are systematically undermining digital freedoms under the guise of protecting children and combating "hate speech."

This includes:

- The UK's Online Safety Act requiring invasive age verification

- Australia's social media age ban with sophisticated surveillance requirements

- EU Chat Control proposals for mass scanning of private messages

- Coordinated efforts between governments and payment processors to defund disfavored platforms

The trusted flagger system fits perfectly into this ecosystem—a seemingly reasonable mechanism for identifying illegal content that in practice becomes a tool for suppressing lawful but controversial speech.

The Core Question

The trusted flagger system ultimately forces a fundamental question: Should content moderation prioritize aggressive removal of anything potentially problematic, or should it preserve lawful speech while targeting clearly illegal content?

The EU has chosen the former path, creating a system where "potentially illegal" becomes sufficient grounds for suppression. The US government's COVID-era censorship campaign showed that even in jurisdictions with strong constitutional speech protections, government pressure can achieve similar results through informal coercion.

The consequences of this choice extend far beyond European borders, shaping what can and cannot be said in an increasingly interconnected digital world.

When advisors and trusted organizations gain power to flag content for expedited removal based on potential illegality rather than proven violation, we create a system optimized for censorship rather than justice. The word "potentially" doesn't make this power more legitimate—it makes it more dangerous.

The COVID-19 censorship experience in the United States demonstrated that governments will pressure platforms to suppress inconvenient truths, legitimate scientific debate, and political dissent when given the opportunity. The EU's trusted flagger system institutionalizes and legitimizes this dynamic, creating permanent infrastructure for content suppression based on subjective determinations of what's "potentially" harmful.

History suggests these systems are rarely used with the restraint their creators promise. They expand in scope, grow in power, and eventually serve purposes far beyond their original justification. The trusted flagger system is no exception—it's a mechanism for controlling online discourse masquerading as consumer protection.

Related Reading

For more on digital censorship and compliance:

- Freedom of Speech and Censorship: The Growing Battle in the UK

- The Great Internet Lockdown: How Regulations Are Reshaping the Digital Landscape

- Digital Compliance Alert: UK Online Safety Act and EU DSA Cross-Border Impact

- Global Digital Compliance Crisis: EU/UK Regulations Reshaping US Operations

- The EU's Digital Services Act: A New Era of Online Regulation

- Brussels Set to Charge Meta Under Digital Services Act

- EU Chat Control Vote Postponed: A Temporary Victory for Privacy Rights

- The Internet Bill of Rights: A Framework for Digital Freedom