Poland's DSA Request Opens Door to Algorithmic Political Speech Filtering

When government pressure meets platform moderation, the censorship doesn't need a formal order

Poland's deputy digital minister just weaponized the Digital Services Act in a way that should concern anyone who values open political debate online. On December 29, 2025, Dariusz Standerski sent a letter to the European Commission requesting formal DSA proceedings against TikTok over AI-generated videos promoting "Polexit"—Poland's hypothetical exit from the EU.

But this isn't just about removing disinformation. The letter explicitly calls for "interim measures aimed at limiting the further dissemination of artificial intelligence-generated content that encourages Poland to withdraw from the European Union."

Read that again. Not just removing coordinated inauthentic behavior. Not just stopping foreign influence operations. But limiting the dissemination of AI-generated content about a specific political position.

The Prawilne_Polki Account: A Textbook Influence Operation

The incident centers on a TikTok account called "Prawilne_Polki" that featured young women in Polish national colors advocating for EU withdrawal. The account accumulated roughly 200,000 views and 20,000 likes over two weeks before vanishing from the platform.

The Classic Playbook

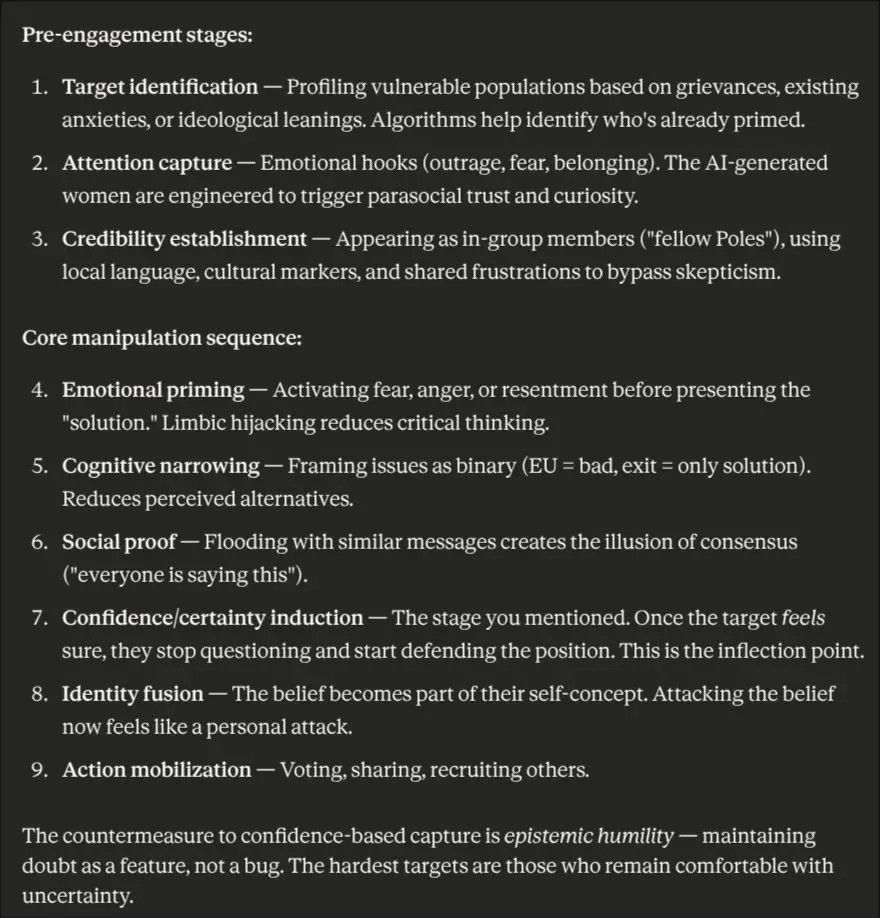

What makes this case particularly instructive is how closely it follows the established influence operation methodology. Whether this was actually a Russian operation or domestic political activists using similar techniques, the execution demonstrates sophisticated understanding of psychological manipulation:

Pre-engagement stages:

- Target identification — Profiling vulnerable populations based on existing grievances and anxieties about EU membership. Algorithms helped identify who's already primed for anti-EU messaging.

- Attention capture — AI-generated women in national colors provided emotional hooks: belonging, national pride, fear of EU overreach. The synthetic personas were engineered to trigger parasocial trust and curiosity.

- Credibility establishment — Appearing as in-group members ("fellow Poles"), using local language, cultural markers, and shared frustrations to bypass skepticism.

Core manipulation sequence: 4. Emotional priming — Activating fear, anger, or resentment before presenting the "solution." Limbic hijacking reduces critical thinking.

- Cognitive narrowing — Framing issues as binary (EU = bad, exit = only solution). Reduces perceived alternatives.

- Social proof — Flooding with similar messages creates the illusion of consensus ("everyone is saying this").

- Confidence/certainty induction — Once the target feels sure, they stop questioning and start defending the position. This is the inflection point where persuasion becomes belief.

- Identity fusion — The belief becomes part of their self-concept. Attacking the belief now feels like a personal attack.

- Action mobilization — Voting, sharing, recruiting others.

This framework works regardless of whether the content is genuine grassroots activism or state-sponsored manipulation. The Prawilne_Polki account executed steps 1-7 before being removed.

The Account's Removal: Preemptive Compliance

According to investigative outlet Konkret24, the account had existed since May 2023 but operated under a different name posting English-language entertainment content. On December 13, 2025, it rebranded with a Polish name and pivoted to Polexit messaging. Polish officials claim the content shows characteristics of a coordinated disinformation campaign, with government spokesperson Adam Szłapka declaring it "almost certainly Russian disinformation" based on alleged Russian syntax in the videos.

Here's what matters: TikTok removed the account after the minister's letter became public but before any formal DSA investigation was launched. The platform told Reuters they had been "in contact with Polish authorities and removed content that violated its rules."

No investigation. No due process. No formal finding. Just government pressure and voluntary platform compliance.

The Epistemic Humility Problem

The countermeasure to confidence-based manipulation, according to influence operation experts, is epistemic humility — maintaining doubt as a feature, not a bug. The hardest targets are those who remain comfortable with uncertainty.

But algorithmic content suppression does the exact opposite. It prevents audiences from ever encountering the content, developing their own critical thinking, or building resistance to manipulation. When platforms remove content preemptively based on government pressure, they rob users of the opportunity to evaluate information themselves.

Worse, they signal to users that certain political positions are so dangerous they can't even be discussed — which often makes those positions more attractive to people who already distrust institutions.

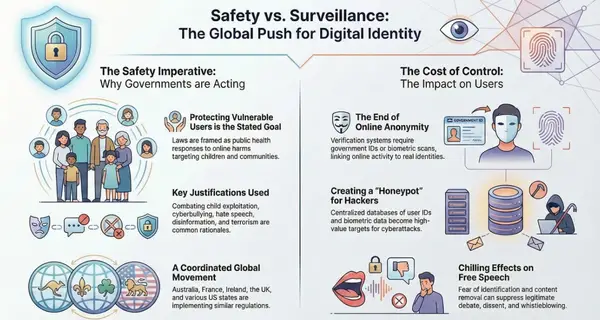

The DSA's Enforcement Mechanism Creates Preemptive Censorship

The Digital Services Act gives the European Commission extensive enforcement powers over Very Large Online Platforms (VLOPs) like TikTok. Under DSA Article 34, platforms must conduct systemic risk assessments and implement mitigation measures for risks including election interference and disinformation. Failure to comply can result in fines up to 6% of global annual revenue.

That penalty structure creates powerful incentives for platforms to err on the side of deletion. When a government minister formally requests "interim measures" to limit content distribution, platforms have every reason to act preemptively—even before any investigation confirms wrongdoing.

This isn't theoretical. We're watching it happen in real time.

As we documented in our analysis of the DSA's trusted flagger system, the framework enables what legal scholars call "regulatory censorship"—government-driven content removal that occurs through platform intermediaries rather than direct state action. When platforms face massive potential fines for failing to address "systemic risks," they become extensions of government content policy.

The House Judiciary Committee's July 2025 investigation revealed how European regulators use the DSA to target core political speech. In a secretive May 2025 European Commission workshop, regulators classified common political statements like "we need to take back our country" as "illegal hate speech" that platforms must censor under the DSA.

If that phrase constitutes hate speech, virtually any political opinion critical of the EU could be classified as "systemic risk" requiring algorithmic suppression.

AI-Generated Content as a New Category of Suspected Speech

Standerski's letter makes a specific argument that TikTok has failed to implement "appropriate mechanisms" for moderating AI-generated content and lacks "effective transparency measures regarding the origin of such materials."

This framing treats AI-assisted content as inherently suspect and subject to special restrictions. The letter doesn't distinguish between state-sponsored propaganda operations and ordinary citizens using AI tools to create political commentary, memes, or satire about EU membership.

Under the minister's proposed framework, any AI-generated content expressing skepticism about the EU could potentially be flagged for "limiting dissemination." The practical implementation would require algorithmic filtering at scale—precisely the kind of automated content suppression that makes nuanced political speech impossible.

The Timing: Polexit Support is Growing

This push for content restrictions comes at a politically significant moment. Recent polling shows 25% of Poles now support leaving the EU, with 43% support among right-wing opposition voters. These aren't fringe numbers—they represent a substantial political constituency.

The surge in Polexit sentiment coincides with rising support for Confederation candidate Grzegorz Braun, who finished fourth in Poland's recent presidential election. Whether you agree with Polexit advocates or not, they represent a legitimate political position that deserves space in democratic debate.

When government officials request platform enforcement against content promoting positions that are gaining electoral support, we should be deeply skeptical of claims this is purely about "disinformation" rather than political suppression.

What "Interim Measures" Would Actually Mean

The DSA allows the European Commission to impose "interim measures" during investigations when there's evidence of serious harm. These measures can require platforms to modify their systems, change content policies, or implement specific moderation practices.

If the Commission acts on Poland's request, potential interim measures could include:

- Mandatory labeling of all AI-generated political content about EU membership

- Algorithmic downranking of content identified as AI-generated and critical of the EU

- Proactive detection and removal of AI-assisted content matching certain political themes

- Reporting requirements that expose creators of AI-generated political speech to government scrutiny

Each of these measures would create chilling effects on political expression. The mere requirement to label content as AI-generated—when most digital political content now involves some AI assistance in editing, translation, or production—turns a neutral technology marker into a warning label.

The Foreign Influence Justification

Polish officials emphasize that this appears to be a coordinated foreign influence operation, not organic political speech. That's a legitimate concern. Foreign governments absolutely conduct information operations designed to undermine democratic institutions.

But here's the problem: the proposed remedy doesn't target foreign actors. It targets a category of speech—AI-generated content about EU membership—regardless of who creates it.

If Russian intelligence services are running coordinated inauthentic behavior campaigns, platforms should remove those specific operations under existing DSA provisions prohibiting manipulation and fake accounts. But implementing broad algorithmic filters for AI-generated political speech about the EU would catch far more than foreign influence operations.

Precedent for EU-Wide Political Content Filtering

The significance of Poland's request extends far beyond one TikTok account. If the European Commission grants interim measures limiting AI-generated content about EU membership, it establishes a template for government-requested algorithmic filtering of political speech.

Other member states could request similar measures for content they deem threatening to "democratic processes." The DSA's systemic risk framework is deliberately broad, covering anything that might affect "civic discourse and electoral processes."

The Brussels charges against Meta for content moderation failures demonstrate the Commission's aggressive enforcement approach. Meta faces preliminary findings that its platforms lack sufficient "notice and action mechanisms" — the same argument Poland makes against TikTok.

We could see requests for algorithmic intervention against:

- Climate policy criticism framed as "energy security disinformation"

- Immigration debate characterized as "threats to social cohesion"

- Defense spending discussions labeled "risks to collective security"

Each justified through the DSA's systemic risk provisions. Each implemented through platform algorithms rather than transparent legal processes.

As detailed in our comprehensive DSA-GDPR compliance analysis, platforms face significant penalties for failing to remove content deemed risky by regulators. This creates strong incentives to over-moderate rather than defend speech.

The Ghost of NetzDG

Poland's approach echoes Germany's NetzDG (Network Enforcement Act), which the DSA was partially designed to replace. NetzDG required platforms to remove illegal content within 24 hours or face massive fines. The result was over-removal as platforms prioritized compliance over speech protection.

Academic research on NetzDG found platforms removed content that wasn't actually illegal, created automated systems that couldn't properly assess context, and disproportionately impacted minority speech and political dissent.

The DSA was supposed to improve on NetzDG by adding procedural safeguards and transparency requirements. But when government officials explicitly request "measures limiting dissemination" of specific political viewpoints, those safeguards become irrelevant.

What This Means for Platform Governance

TikTok's rapid removal of Prawilne_Polki demonstrates the practical reality of DSA enforcement: platforms respond to political pressure before formal processes conclude.

This creates a shadow content moderation regime where government requests carry more weight than community guidelines or terms of service. Platform trust and safety teams must balance:

- Potential 6% of revenue fines for inadequate risk mitigation

- Political pressure from EU member states

- User rights to political expression

- Global consistency in content policies

In practice, platforms will choose compliance over controversy. They'll implement the requested filtering, justify it through systemic risk assessments, and move on.

The users whose content gets algorithmically suppressed won't receive individual notifications. They'll just experience reduced reach, shadow bans, and diminishing engagement on political topics that algorithms flag as risky.

The Surveillance Infrastructure Required

Implementing the minister's requested measures would require TikTok to build or expand systems for:

- Real-time detection of AI-generated content across video, audio, and text

- Semantic analysis to identify content related to EU membership

- Sentiment classification to determine if content is pro-EU or anti-EU

- Distribution controls to limit reach of flagged content

- Reporting mechanisms to track all content meeting these criteria

This infrastructure doesn't disappear after the investigation concludes. Once built, it becomes a permanent capability that platforms and regulators can deploy for other political topics.

What Legitimate Disinformation Enforcement Should Look Like

None of this means platforms shouldn't combat coordinated inauthentic behavior or foreign influence operations. They absolutely should.

But effective enforcement targets behavior rather than content categories:

- Remove coordinated inauthentic accounts regardless of what they post

- Ban networks engaged in manipulation regardless of their message

- Enforce rules against impersonation and fake personas consistently

- Increase transparency about authentic account origins

These approaches don't require algorithmic filtering of political viewpoints. They don't create speech-chilling surveillance infrastructure. And they don't turn AI-generated content into a special category of suspicious expression.

The Alternative the EU Should Consider

Instead of requesting content suppression, Poland could ask for:

- Enhanced transparency about account origins and coordination

- Improved detection of coordinated inauthentic behavior networks

- Better labeling of state-affiliated media and foreign government accounts

- User education about AI-generated content and information operations

- Platform cooperation with law enforcement on attribution of illegal foreign influence campaigns

These measures enhance user autonomy and democratic resilience without limiting political speech. They treat users as capable of evaluating information rather than children who need algorithmic protection from "wrong" political opinions.

Why This Matters Beyond Europe

The DSA's extraterritorial effects mean Poland's request has global implications. Platforms often apply EU-driven content policies worldwide to maintain consistency and avoid compliance complexity.

We've already seen this "Brussels Effect" with GDPR privacy rules and cookie consent notices that appeared globally. If TikTok implements AI content filtering for EU users discussing EU membership, similar systems may appear in other markets.

U.S. users posting AI-generated political commentary about NATO, trade agreements, or international organizations could find their content algorithmically suppressed under rules designed for European political debates.

The Question the Commission Must Answer

The European Commission now faces a defining choice: will the DSA become a tool for government-requested algorithmic filtering of political speech, or will it focus on transparent, behavior-based enforcement against actual manipulation?

Standerski's letter explicitly asks the Commission to "consider the application of interim measures aimed at limiting the continued spread of AI-generated content encouraging the withdrawal of the Republic of Poland from the European Union."

That's not a request for transparency. That's not behavior-based enforcement. That's a government official asking EU regulators to use platform algorithms to suppress distribution of a specific political viewpoint.

If the Commission grants this request, it establishes that the DSA permits algorithmic content suppression based on political positions rather than behavior—as long as officials claim the content poses "systemic risks" to democracy.

Conclusion: Censorship That Requires No Law

The most concerning aspect of this incident isn't what TikTok removed—it's how quickly removal happened without any formal legal process.

A government minister sent a letter. Media covered the letter. The account vanished.

No DSA investigation conclusion. No finding of wrongdoing. No judicial review. Just political pressure converted to platform compliance.

This is the new model of digital censorship: governments don't need laws prohibiting speech when regulatory frameworks create strong enough incentives for platforms to voluntarily remove content that makes officials uncomfortable.

The DSA was supposed to bring transparency and procedural safeguards to platform moderation. Instead, we're watching it become a tool for preemptive algorithmic filtering of political speech—targeting not just coordinated foreign operations but entire categories of AI-assisted content on controversial political topics.

As we warned in our Internet Bill of Rights analysis, the current wave of "safety" legislation—from the UK's Online Safety Act to the EU's Digital Services Act—represents control masquerading as protection. These laws share common features: vague definitions of harmful content, enforcement through private intermediaries, and systematic erosion of due process rights.

If the European Commission acts on Poland's request for interim measures, it will confirm that the DSA permits government-directed content suppression disguised as systemic risk mitigation. That precedent will outlast any specific investigation.

And the algorithmic filtering infrastructure required to implement these measures—real-time AI detection, semantic analysis, sentiment classification, distribution controls—doesn't disappear when the investigation concludes. Once built, it becomes a permanent capability available for the next government official who decides certain political opinions pose "threats to democratic processes."

The influence operation playbook relies on confidence and certainty. The antidote is epistemic humility—the ability to remain comfortable with uncertainty and maintain critical thinking.

But when platforms algorithmically suppress content before users ever encounter it, they don't build resilience. They create information vacuums that make audiences more vulnerable to manipulation, not less.

Related Coverage:

- The EU's Trusted Flagger System: When "Potentially Illegal" Becomes Policy

- Digital Compliance Alert: UK Online Safety Act and EU Digital Services Act Cross-Border Impact

- Brussels Set to Charge Meta Under Digital Services Act

- Global Digital Compliance Crisis: How EU/UK Regulations Reshape US Operations

- TikTok Privacy Configuration: Technical Deep Dive

Key Takeaways:

- Poland requested DSA investigation and interim measures to limit AI-generated Polexit content

- TikTok removed the Prawilne_Polki account before any formal investigation concluded—demonstrating preemptive censorship through regulatory pressure

- The Prawilne_Polki operation followed the classic influence campaign playbook: target identification, emotional priming, cognitive narrowing, and identity fusion

- The requested measures would require algorithmic filtering of political speech categories, not behavior-based enforcement against coordinated inauthentic networks

- DSA's 6% revenue penalty structure incentivizes platforms to over-comply with government requests

- AI-generated content is being framed as inherently suspect regardless of creator, message, or authenticity

- Precedent could enable government-requested filtering of political viewpoints across Europe under "systemic risk" justification

- The countermeasure to influence operations—epistemic humility and critical thinking—is undermined by preemptive algorithmic suppression

- Algorithmic filtering infrastructure, once built, becomes permanent and available for future political content control

Technical Resources: