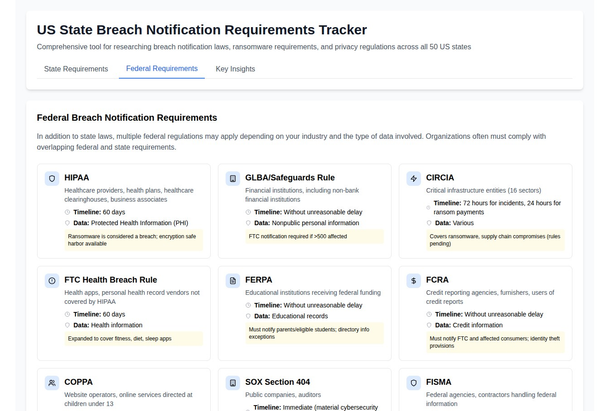

Navigating the Technical Landscape of EU AI Act Compliance

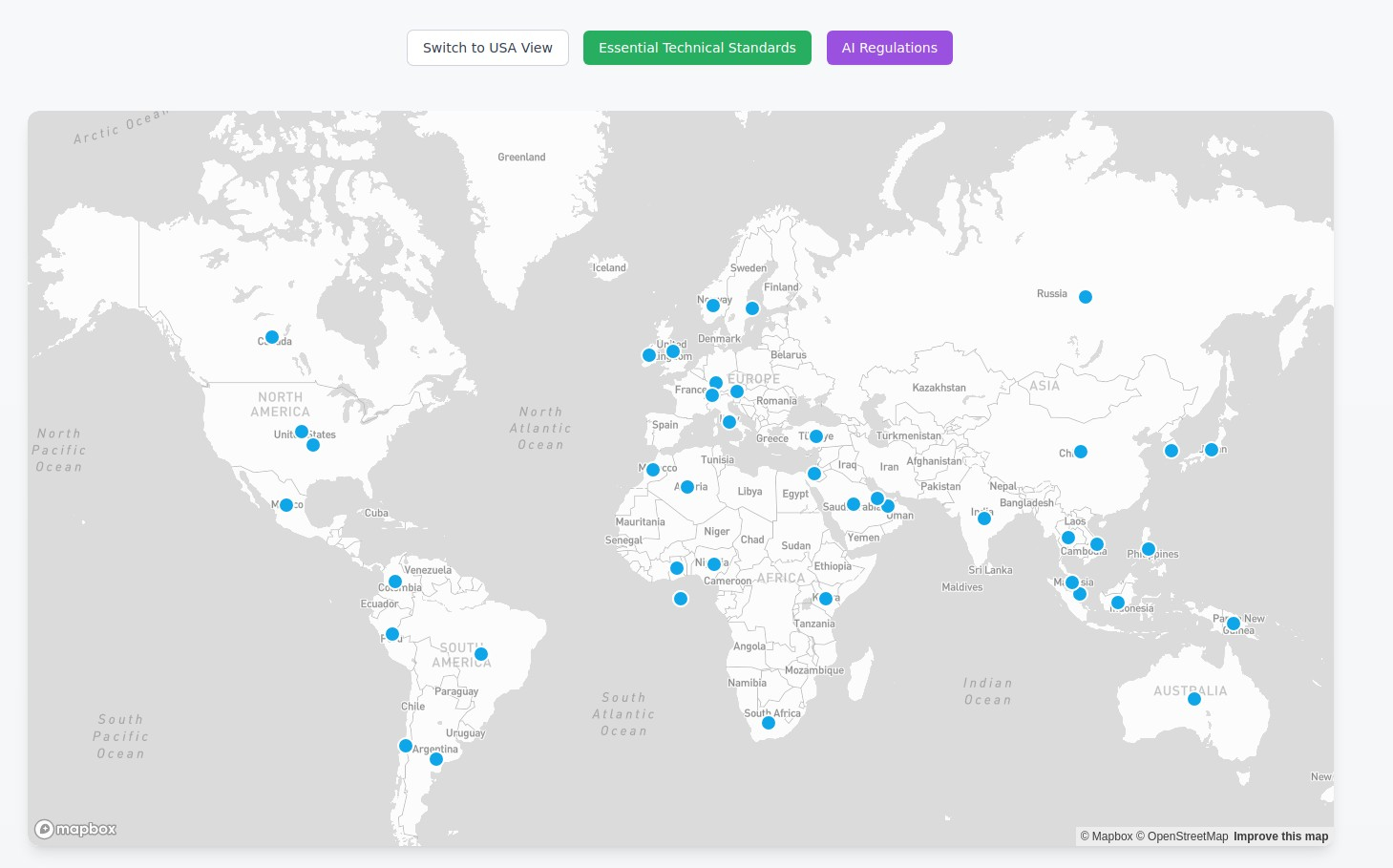

The European Union’s Artificial Intelligence Act (EU AI Act) is poised to reshape the development, deployment, and use of AI systems within the EU and for organizations whose AI outputs are used within the EU. Compliance with this regulation necessitates a deep understanding of its technical definitions, risk classifications, and the specific obligations imposed on various actors across the AI value chain. This article provides an in-depth look at the technical compliance aspects of the EU AI Act, drawing on the key concepts outlined in the provided sources.

Defining the Scope: What Constitutes an “AI System”?

At its core, the EU AI Act applies to “AI systems,” defined as “a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments”. This definition is intentionally technology-neutral and innovation-proof, aiming to distinguish AI systems from simpler traditional software or rule-based programming. Notably, systems based solely on rules defined by natural persons to automatically execute operations are excluded.

Furthermore, the Act specifically addresses “General-purpose AI models” (GPAI models), which are defined as “An AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market”. This includes large generative AI models that can flexibly generate content and handle diverse tasks. Specific rules apply to GPAI models, even when integrated into AI systems. It's crucial to note that models used solely for research, development, or prototyping before market placement are excluded from this definition.

The Risk-Based Approach: Classifying AI Systems

The EU AI Act adopts a risk-based approach to regulation. AI systems and GPAI models are classified based on the level of risk they pose and their intended purpose. This classification is not mutually exclusive, meaning a system can fall into multiple categories simultaneously (e.g., high-risk and subject to transparency obligations). The assessment of this classification must be performed on a case-by-case basis, documented, regularly reviewed, and kept up-to-date.

The key risk categories are:

- Prohibited AI Systems: These are AI systems deemed to pose unacceptable risks and will be banned in the EU. Examples include AI systems that deploy subliminal techniques beyond a person’s consciousness or are purposefully manipulative, and certain AI systems for real-time remote biometric identification in publicly accessible spaces for law enforcement (with limited exceptions). Compliance here means not developing, placing on the market, putting into service, or using such systems within the EU.

- High-Risk AI Systems: These systems are subject to stringent pre-market and post-market obligations. They are categorized into two main areas:

- AI systems intended to be used as a safety component of a product or which are themselves products covered by specific EU legislations listed in Annex I and subject to a third-party conformity assessment procedure. For those listed in Annex I Section B, the requirements of the EU AI Act will be integrated into the product legislation's technical specifications and procedures.

- AI systems listed in Annex III, which are deemed to pose a high risk of harm to health, safety, or fundamental rights. Examples include AI systems used for assessing education levels, monitoring student behavior during tests, and potentially those used in employment, essential private and public services, and law enforcement (further details are in Annex III of the full Act, which is referenced but not fully provided in the sources). Providers who believe their Annex III AI system is not high-risk must document their assessment but will still need to register it.

- AI Systems Subject to Specific Transparency Obligations: These systems are subject to certain disclosure requirements. This category includes:

- AI systems intended to interact directly with natural persons (with exceptions for systems authorized by law for crime detection/prevention). Providers and deployers must design these systems to inform individuals that they are interacting with an AI system, unless it is obvious to a reasonably well-informed person.

- AI systems generating synthetic audio, image, video, or text content (including GPAI) (with exceptions for assistive editing tools, systems that don't substantially alter input, and those authorized for law enforcement). Providers must mark these outputs in a machine-readable and detectable format as artificially generated or manipulated, employing effective, interoperable, robust, and reliable technical solutions. Deployers must disclose that the content has been artificially generated or manipulated, with exceptions for artistic/satirical content where disclosure doesn't hinder enjoyment.

- Emotion recognition systems and biometric categorization systems (with exceptions for legally permitted law enforcement uses with appropriate safeguards). Providers and deployers have obligations related to informing individuals exposed to these systems.

- Limited Risks AI Systems: The EU AI Act doesn't impose specific obligations beyond the AI literacy obligation. Providers and deployers must take measures to ensure their staff have the necessary skills and understanding regarding AI deployment, opportunities, risks, and potential harm.

- GPAI Models: These models are subject to specific rules, with additional obligations for those classified as having systemic risk. A GPAI model is considered to have high impact capabilities (a presumption for systemic risk) if the cumulative computation used for its training exceeds 10^25 floating point operations. Models can also be deemed systemic risks based on equivalent capabilities/impact, considering parameters, dataset quality/size, and training computation. Providers of GPAI models meeting systemic risk criteria must notify the European Commission within two weeks. The European Commission can also classify models as systemic risks on its own initiative or following a scientific panel alert, and a list of such models will be published.

Obligations Across the AI Value Chain

The EU AI Act applies to all actors across the AI value chain, including providers, deployers, importers, distributors, and authorized representatives. The applicability is not solely based on location within the EU but also on the use of an AI system's output within the EU. The qualification of an organization (e.g., as a provider or deployer) must be assessed for each specific AI system or GPAI model and documented, as a single organization can have different roles depending on the AI concerned.

Providers are natural or legal persons who develop an AI system or GPAI model and place it on the market or put it into service in the EU under their own name or trademark. This includes providers located inside or outside the EU whose AI system's output is intended to be used in the EU. Providers of high-risk AI systems have extensive obligations:

- Implement a risk management system to identify, estimate, evaluate, and mitigate risks throughout the AI system's lifecycle. This includes examining and mitigating potential biases.

- Establish data governance and management practices for training, validation, and testing data, ensuring they meet quality criteria.

- Draft technical documentation containing elements specified in Annex IV, which may be simplified for SMEs.

- Design the AI system with specific logging capabilities, particularly for remote biometric identification systems.

- Design the AI system to ensure transparency, enabling deployers to interpret its output and use it appropriately, and draft instructions for use with comprehensive information.

- Design the AI system to ensure effective human oversight to prevent or minimize risks to health, safety, and fundamental rights.

- Design and implement technical and organizational measures to ensure an appropriate level of accuracy, robustness, and cybersecurity throughout the AI system's lifecycle, including resilience against errors, faults, biases, and unauthorized third-party attempts to exploit vulnerabilities. Accuracy levels and metrics must be declared.

- Implement a Quality Management System encompassing policies, procedures, and instructions covering regulatory compliance, design control, development, testing, technical specifications, and record-keeping.

- Undergo a Conformity Assessment prior to placing the system on the market or putting it into service.

- Draw up an EU declaration of conformity and affix the CE marking to the AI system.

- Inform relevant stakeholders and implement corrective actions in case of non-conformity or risk, including withdrawal or recall.

- Establish a post-market monitoring system to actively collect and analyze data on the AI system's performance throughout its lifetime to ensure ongoing compliance. This system must be based on a post-market monitoring plan.

- Report serious incidents to national market surveillance authorities and investigate them.

- Indicate their name, registered trade name/trademark, and address on the AI system. If this isn't possible on the system itself, it must be on the packaging or accompanying documentation.

- For providers established outside the EU, appoint an authorized representative within the EU.

- Design the AI system in compliance with EU law accessibility requirements.

- Implement AI literacy measures.

Providers of GPAI models also have specific obligations:

- Draft technical documentation of the GPAI model, including training, testing processes, and evaluation results, as detailed in Annex XI. This documentation must be kept up-to-date.

- Draft and make available information and documentation to downstream providers who integrate the GPAI model into their AI systems, enabling them to understand the model's capabilities and limitations and comply with their own obligations.

- Implement a copyrights and related rights policy, outlining steps taken to comply with EU copyright laws, including the reservation of rights for text and data mining. This applies regardless of where the copyright-relevant acts occurred if the model is placed on the EU market.

- Draft and make publicly available a detailed summary of the content used for training the GPAI model, facilitating copyright holders' ability to exercise their rights.

- For providers established outside the EU, appoint an authorized representative within the EU.

- Keep track, document, and report serious incidents related to the GPAI model to competent authorities.

- Providers of GPAI models with systemic risk have additional obligations:

- Continuously assess possible systemic risks at the EU level throughout the model's lifecycle and implement mitigation measures.

- Perform GPAI model evaluations to identify and mitigate systemic risks, including adversarial testing, prior to placing the model on the market.

- Notify the European Commission within two weeks if the model meets or will meet the criteria for systemic risk.

Deployers of high-risk AI systems also have specific responsibilities:

- Implement technical and organizational measures to ensure the AI system is used in accordance with the instructions for use.

- Assign responsibility to competent individuals to oversee the AI system and provide them with necessary support.

- Implement practices to ensure data quality of the input data they control, ensuring it's relevant and representative.

- Monitor the operation of the AI system based on the instructions for use and inform the provider as per their post-market monitoring plan.

- Inform relevant stakeholders and suspend the use of the AI system if they have reason to believe its use may result in a risk to health, safety, or fundamental rights.

- Report serious incidents to relevant stakeholders immediately.

- Keep logs of the AI system's operation according to its intended purpose and their obligations.

- Where applicable, register in the EU database for high-risk AI systems before putting the system into service or using it (this primarily applies to public authorities, EU institutions, and those acting on their behalf for Annex III systems, excluding critical infrastructure). If the system isn't already registered, they must not use it and inform the provider/distributor.

- Inform individuals that they will be subject to the use of the AI system when it's used to make or assist in decisions related to them, including the purpose and type of decisions, and inform them about their right to an explanation.

- Implement AI literacy measures.

- Certain deployers (public bodies/private entities providing public services using Annex III AI, and those using AI for creditworthiness evaluation or risk assessment/pricing for life/health insurance) must conduct a Fundamental Rights Impact Assessment (FRIA) prior to deployment and notify the competent market surveillance authority.

Importers must verify the provider's compliance before placing high-risk AI systems on the EU market, ensure the system's compliance while under their responsibility, indicate their details on the system, keep relevant documentation, and inform stakeholders in case of risk.

Distributors must verify the provider's and importer's compliance before making high-risk AI systems available, ensure the system's compliance while under their responsibility, inform stakeholders and implement corrective actions in case of non-conformity or risk.

Authorized Representatives (for providers established outside the EU) must be appointed by written mandate, provide this mandate to authorities upon request, verify the provider's compliance (including technical documentation and conformity assessment), keep relevant documentation, and register high-risk AI systems in the EU database. They must also terminate the mandate if the provider infringes the EU AI Act and inform the AI Office.

Cybersecurity Considerations for AI Compliance

While the EU AI Act focuses on safety and fundamental rights risks, the "trendmicroaiblueprint.pdf" and "wefAIsecurity.pdf" sources highlight the critical role of cybersecurity in ensuring the reliability and trustworthiness of AI systems. Technical compliance with the EU AI Act's requirements for robustness and accuracy (Article 15 for high-risk AI) inherently involves addressing cybersecurity risks.

The Trend Micro blueprint outlines a six-layer cybersecurity framework for AI applications, emphasizing the need to secure data, AI models, infrastructure, users, access to AI services, and defend against zero-day exploits. Implementing measures like Data Security Posture Management (DSPM), container security, AI Security Posture Management (AI-SPM), deepfake detection, endpoint security, AI gateways, and network intrusion detection/prevention systems (IDS/IPS) are crucial for a holistic approach to AI security.

The World Economic Forum report stresses that cybersecurity requirements should be considered in tandem with business requirements for AI adoption. Organizations need to understand their business context, identify potential risks and vulnerabilities (including those specific to AI like data poisoning, prompt injection, and model evasion), assess negative impacts, and implement mitigation options throughout the AI lifecycle ("shift left, expand right and repeat"). Basic cyber hygiene remains foundational, but specific controls need to be tailored and new ones developed to address AI-related cyber risks.

Enforcement and Key Dates

Compliance with the EU AI Act will be enforced by national market surveillance authorities and the AI Office. Significant penalties can be imposed for non-compliance, with fines reaching up to EUR 35 million or 7% of total worldwide annual turnover, whichever is higher, for non-compliance with the prohibition of AI practices. Non-compliance with obligations for providers/deployers/importers/distributors/authorized representatives of high-risk AI systems and those with specific transparency obligations can result in fines up to EUR 15 million or 3% of turnover. Supplying incorrect, incomplete, or misleading information carries fines up to EUR 7.5 million or 1% of turnover. Smaller enterprises and startups will face lower fine amounts.

The EU AI Act will come into force with varying application dates for different provisions. Key dates include:

- February 2025: Establishment of the EU AI Office. EU Member States must have designated their National Competent Authorities by August 2, 2025.

- August 2, 2025: Entry into application of obligations applicable to General-Purpose AI (GPAI) models.

- February 2, 2026: Entry into application of general provisions, prohibited AI practices, governance provisions, and penalties (except for GPAI provider fines). The European Commission will adopt a template for post-market monitoring by this date.

- August 2, 2026: Entry into application of obligations for high-risk AI systems (Annex III), AI systems subject to transparency obligations, measures for innovation support, the EU Database for High-Risk AI Systems, remedies, and codes of conduct/guidelines. Enforcement measures for GPAI models will also begin to apply one year after the compliance deadline. Operators of high-risk AI systems placed on the market before this date only need to comply if they undergo significant design changes after this date.

- August 2, 2027: Entry into application of obligations for high-risk AI systems intended as safety components of products under Annex I, subject to third-party conformity assessment, and the provision related to fines for Providers of GPAI models. Providers of GPAI models placed on the market before August 2, 2025, must comply by this date.

- August 2, 2030: Providers and Deployers of High-risk AI systems intended for use by public authorities must comply by this date. Operators of AI systems that are components of large-scale IT systems in Freedom, Security, and Justice established before August 2, 2027, must comply by December 31, 2030.

Conclusion: A Proactive and Multi-faceted Approach to Compliance

Technical compliance with the EU AI Act demands a comprehensive and proactive approach. Organizations must meticulously assess their AI systems and GPAI models against the Act’s definitions and risk classifications. Understanding the specific obligations for their roles as providers, deployers, importers, distributors, or authorized representatives is crucial. Furthermore, integrating robust cybersecurity measures, as highlighted in the other sources, is essential for meeting the Act’s requirements for accuracy and robustness and for ensuring the overall trustworthiness of AI systems. Early inventory, classification, gap analysis, and continuous monitoring of regulatory developments are vital steps towards achieving and maintaining compliance. By embracing a multi-faceted approach that combines technical understanding, risk management, and robust security practices, organizations can navigate the new regulatory landscape of AI and foster responsible innovation within the EU.