Navigating the Golden State's Digital Future: A 2025 Compliance Deep Dive into California's Privacy and AI Legislation

As California's legislative session concludes for the year, the state reaffirms its position as a pioneering force in digital regulation, pushing forward an array of ambitious bills aimed at shaping data privacy and artificial intelligence (AI) across the nation. For compliance professionals, understanding these developments is not merely an option but a necessity, as California's stringent laws frequently set de facto national standards for businesses operating across the United States. This in-depth look explores the key legislative measures from the 2025 session, highlighting their implications and the ongoing challenges in their implementation.

The Evolving Data Privacy Landscape: Strengthening Consumer Control

California continues to strengthen consumer privacy rights, building upon its foundational California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA). The 2025 session saw significant advancements aimed at empowering individuals and increasing transparency, particularly in how personal data is collected, used, and shared.

- Universal Opt-Out Signals (AB 566 – The California Opt Me Out Act):

- The Mandate: Passed by the legislature and effective January 1, 2027, AB 566 requires browser developers to include a readily locatable and configurable setting that enables consumers to send an opt-out preference signal to businesses. This addresses the long-standing issue of consumers being "overwhelmed" by having to opt-out from hundreds or thousands of individual companies. The California Privacy Protection Agency (CPPA), the bill's sponsor, emphasizes that this will allow consumers to "exercise their opt-out rights at all businesses with which they interact online in a single step".

- Influence & Challenges: This bill, similar to one vetoed by Governor Newsom last year over concerns about mandating operating system developers, now focuses specifically on browsers and browser engines. Its success could establish a universal browser-level privacy control, pressuring major browser vendors like Google (Chrome), Apple (Safari), and Microsoft (Edge)—which collectively hold over 90% of the desktop market share—to natively support these signals. This could significantly reduce "opt-out friction" and empower consumers nationwide to manage their data.

- Expanded Data Broker Transparency (SB 361):

- The Mandate: SB 361, passed by the legislature and slated to go into effect on January 1, 2026, dramatically expands the disclosure requirements for data brokers when they register annually with the CPPA. Brokers will now need to reveal if they collect sensitive personal information, such as account logins, government identification numbers, citizenship data, union membership status, sexual orientation, gender identity, and biometric data.

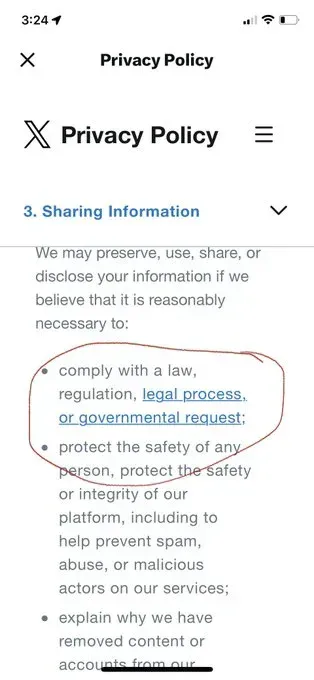

- Influence & Impact: Crucially, data brokers must also disclose whether they have sold or shared consumer data with foreign actors, federal/state governments, law enforcement, or developers of generative AI systems or models in the past year. This information will be publicly accessible on the CPPA's website, increasing transparency in an often-opaque industry. This bill complements the Delete Act (SB 362, 2023), which established a centralized deletion mechanism allowing consumers to request all registered data brokers to delete their personal information through a single request, with triennial audits to ensure compliance beginning January 1, 2028. The increased transparency could force data brokers nationwide to adopt similar disclosure practices.

- Insurance Consumer Privacy Protection Act of 2025 (SB 354) – Failed:

- The Intent: Though this bill failed to pass the legislature in the 2025 session, its provisions highlight ongoing legislative interest in modernizing privacy standards in data-intensive sectors. SB 354 aimed to establish a comprehensive framework for insurance licensees and their third-party service providers, including data minimization practices, written records retention policies, opt-in consent for non-insurance transaction purposes, and clear prohibitions against "dark patterns" to obtain consent. It also sought to require specific reasons for adverse underwriting decisions and detailed disclosures of information used.

- Challenges: The bill faced opposition from various insurance industry groups, including the American Council of Life Insurers and the California Chamber of Commerce, concerned about its impact on joint marketing activities and the broad scope of entities covered. Despite its failure, its detailed approach could serve as a blueprint for future efforts to regulate insurance privacy in California or other states, particularly given that existing insurance privacy laws date back to 1980 and are considered inadequate for the digital age.

- CIPA Data Privacy Amendment (SB 690) – Failed:

- The Intent: SB 690, which failed to advance this year, aimed to amend the California Invasion of Privacy Act (CIPA) by providing a "commercial business purpose" exception for standard online technologies like session replay, chatbots, cookies, and pixels. This was intended to curb a "flood of lawsuits" leveraging CIPA's outdated wiretap provisions against modern online practices.

- Challenges: The bill was amended to apply only prospectively, meaning it would not affect existing lawsuits. Consumer privacy groups opposed it, arguing it would put "the safety and privacy of millions of Californians at risk". Its failure means businesses still face significant legal risks under CIPA until the law is modernized.

California's Ambitious Stride into AI Regulation: Transparency, Accountability, and Child Safety

The 2025 legislative session saw a strong focus on AI, reflecting California's proactive approach to regulating this rapidly advancing technology. Lawmakers addressed concerns about algorithmic bias, deceptive bots, and the protection of children in AI-driven environments.

- Age Verification Signals (AB 1043 – The Digital Age Assurance Act):

- The Mandate: Passed by the legislature and effective January 1, 2027, this act requires operating system providers to collect age information from account holders (parents/guardians for minors) and provide a privacy-preserving age bracket signal to apps in covered app stores. Developers are then "deemed to have actual knowledge of the user’s age range" upon receiving this signal. The bill as amended removed earlier parental consent requirements that had raised First Amendment concerns.

- Influence & Challenges: This "device-based tagging" approach is designed to be privacy-preserving by avoiding direct collection of government IDs or biometrics, and resistant to circumvention by minors. It aims to place age certification "further up the tech stack," potentially absolving individual apps of some liability and creating a consistent mechanism for parental controls across various applications. However, concerns were raised by groups like Chamber of Progress that such mechanisms could be "weaponized" in abusive households, cutting off vulnerable youth (e.g., LGBTQ+ youth) from vital online support networks. The Motion Picture Association (MPA) also expressed concerns about potential confusion with existing content rating systems. Despite these, Meta and Snap supported the bill, viewing it as a "more balanced and privacy-protective approach" than other age verification laws. This bill could lead to a standardized approach for online age verification across the U.S..

- Companion Chatbots for Children (AB 1064 – LEAD for Kids Act & SB 243):

- The Mandate: These two complementary bills, both passed by the legislature, target AI chatbots interacting with minors. AB 1064 specifically prohibits operators from making companion chatbots available to children (under 18) if they are foreseeably capable of:

- Encouraging self-harm, suicidal ideation, violence, drug/alcohol consumption, or disordered eating.

- Offering unsupervised mental health therapy or discouraging professional help.

- Promoting illegal activity or creating child sexual abuse materials.

- Engaging in erotic or sexually explicit interactions.

- Prioritizing user validation over factual accuracy or safety.

- Optimizing engagement that supersedes safety guardrails.

- SB 243 requires operators to notify users if they are interacting with a bot (if not obvious), disclose suitability for minors, prevent harmful content, and institute measures against sexually explicit interactions with minors. It also mandates annual disclosures to the Office of Suicide Prevention and provides a private right of action for violations.

- Influence: These bills set a high bar for child safety in AI development, particularly for interactive AI, and could drive companies nationwide to implement similar safety guardrails for AI tools used by children.

- The Mandate: These two complementary bills, both passed by the legislature, target AI chatbots interacting with minors. AB 1064 specifically prohibits operators from making companion chatbots available to children (under 18) if they are foreseeably capable of:

- AI in Employment (SB 7 – The "No Robo Bosses Act"):

- The Mandate: This bill, passed by the legislature, requires employers to provide written notice to employees and job applicants when using automated decision systems (ADS) for employment-related decisions. It also creates a right to access certain data used by an ADS and receive post-use notices for disciplinary, termination, or deactivation decisions.

- Influence: SB 7 could establish a national model for regulating AI in the workplace, focusing on transparency and employee rights concerning algorithmic decision-making. It requires extensive analysis, including its overlap with existing CCPA regulations on automated decision-making technologies. Opposition from groups like CCIA cited concerns that the "broad language" could apply to low-risk ADS and burden small businesses.

- Transparency in Frontier AI (SB 53 – The Transparency in Frontier AI Act):

- The Mandate: As a successor to a previously vetoed measure (SB 1047), SB 53, passed by the legislature, requires "large artificial intelligence (AI) developers" to publish safety frameworks, disclose transparency reports, and report critical safety incidents to the Office of Emergency Services. It also creates enhanced whistleblower protections for employees reporting AI safety violations and establishes a consortium for public AI research ("CalCompute").

- Influence: This bill reflects an evolving approach to AI safety and could influence national standards for responsible AI development, particularly for "frontier models" that pose credible risks, by mandating transparency and incident reporting. Earlier versions faced strong opposition, with warnings that such legislation could "hurt the California economy and state leadership in the global artificial intelligence (AI) industry".

- AI Liability (AB 316):

- The Mandate: This bill, passed by the legislature, explicitly states that in an action against a defendant whose AI caused harm, it shall not be a defense to assert that "the artificial intelligence autonomously caused the harm to the plaintiff".

- Influence: This could significantly shape legal liability frameworks for AI-related harms nationally, preventing developers from disclaiming responsibility by citing AI autonomy.

- Bot Disclosure (AB 410) – Failed:

- The Intent: This bill, which failed to pass the legislature in the 2025 session, aimed to broaden bot disclosure requirements. It would have mandated that all bots disclose their artificial nature before interacting with a person, truthfully answer queries about their identity, and refrain from misrepresenting themselves as human. The bill was supported by the National Youth AI Council and the California State Association of Psychiatrists.

- Challenges: AB 410 faced significant opposition, including from the Electronic Frontier Foundation (EFF), which argued that an "across-the-board disclosure requirement" could violate the First Amendment, particularly if not limited to malicious or deceptive chatbots. Concerns about "technical feasibility" and the potential for unwittingly assigning "personhood" to GenAI bots were also raised. The bill's failure reflects the complexities of regulating AI-generated speech and balancing transparency with free speech rights. Despite stalling, the underlying concern about deceptive AI and bots is a national issue that will likely continue to inform future legislation.

Overarching Challenges and Compliance Imperatives

California's aggressive legislative agenda, while positioning the state as a global leader in tech regulation, also highlights several persistent challenges for advancing privacy and AI laws.

- Constitutional Scrutiny (First Amendment Concerns): Many proposed bills, especially those touching on speech or content (like AB 302, SB 435, AB 1043, and AB 410), consistently encounter First Amendment challenges. Courts have historically scrutinized laws that broadly restrict speech to protect children, as they can "unnecessarily broad[ly] suppress speech addressed to adults". The distinction between "publicly available" and "sensitive" information, as debated in SB 435, also underscores the complex interplay between privacy and the right to publish truthful information.

- Technical Feasibility and Operational Burdens: Implementing novel regulatory mandates presents significant technical and operational hurdles. This includes establishing reliable and privacy-preserving age verification mechanisms without requiring intrusive data like government IDs (AB 1043). Similarly, mandating universal opt-out signals in browsers (AB 566) requires cooperation from major tech companies and seamless integration. Rapid data deletion requirements (as seen in AB 302's initial 72-hour proposal) can also pose significant challenges for businesses and government agencies.

- Industry Opposition and Innovation Concerns: A diverse array of organizations, including business, realtor, and technology groups (e.g., California Chamber of Commerce, TechNet, Computer & Communications Industry Association, Lenovo), frequently oppose these bills. Their concerns often center on the compliance costs, potential stifling of innovation, and the sheer complexity of adapting to new regulations. Critics argue that overly broad regulations could "shackle its AI companies with a patchwork of conflicting state regulations".

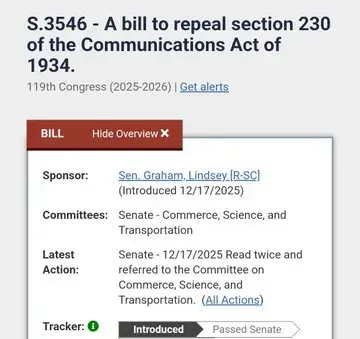

- The "Patchwork" Problem and Federal Preemption: California's proactive approach contributes to a growing "patchwork of inconsistent AI standards" and privacy laws across states. This inconsistency creates duplicative costs and significant compliance burdens for businesses operating nationally, leading to calls for federal preemption to streamline regulation. The recent exclusion of a federal moratorium on state-level AI regulation from the One Big Beautiful Bill Act (OBBBA) in July 2025 further highlights this tension.

In conclusion, California's 2025 legislative session underscores its unwavering commitment to leading the charge in digital governance. The bills that have passed and those that stalled illustrate a complex balancing act between protecting individual rights, ensuring public safety, and fostering technological innovation. For compliance professionals, staying abreast of these developments is paramount, as California's legislative actions are not just local mandates but often serve as a crucial bellwether for national trends and necessitate proactive adjustments in corporate policy and practice across the country.