Navigating the EU AI Act: A Comprehensive Guide for Deployers of High-Risk AI Systems

The European Union's Artificial Intelligence Act (EU AI Act) marks a significant milestone in the regulation of AI technologies. While much attention has been focused on AI providers, deployers of high-risk AI systems face equally important responsibilities. This guide breaks down the key requirements and considerations for deployers under the EU AI Act.

Understanding the Deployer's Role

Deployers are organizations or individuals who use AI systems in their operations. Under the EU AI Act, deployers of high-risk AI systems have specific obligations to ensure safe and compliant use of these technologies.

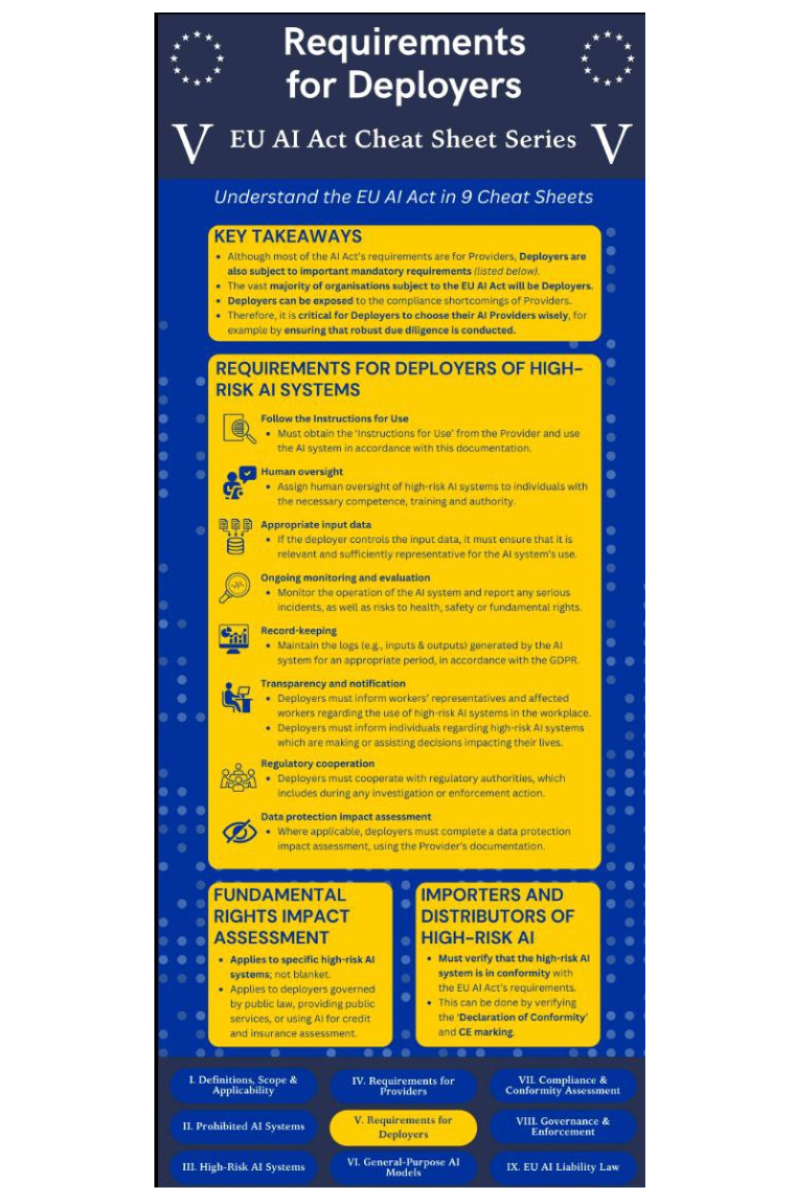

Key Takeaways for Deployers:

- Widespread Impact: The majority of organizations affected by the EU AI Act will be deployers, not providers.

- Shared Responsibility: Deployers can be held accountable for compliance issues originating from their AI providers.

- Due Diligence is Crucial: Careful selection and ongoing monitoring of AI providers is essential for deployers.

Core Requirements for Deployers of High-Risk AI Systems

- Adherence to Instructions

- Obtain and strictly follow the "Instructions for Use" provided by the AI system provider.

- Human Oversight

- Assign oversight of high-risk AI systems to qualified individuals with appropriate training and authority.

- Data Quality Management

- Ensure input data is relevant, representative, and sufficient for the AI system's intended use.

- Continuous Monitoring

- Regularly monitor system operations, report serious incidents, and assess potential risks to health, safety, and fundamental rights.

- Comprehensive Record-Keeping

- Maintain detailed logs of system inputs and outputs in compliance with GDPR requirements.

- Transparency and Communication

- Inform workers about the use and risks of high-risk AI systems in the workplace.

- Notify individuals affected by AI-assisted decisions.

- Regulatory Compliance

- Cooperate fully with regulatory authorities during investigations or enforcement actions.

- Data Protection Impact Assessment

- Conduct assessments using documentation provided by the AI system provider.

Additional Considerations

- Fundamental Rights Impact Assessment: Required for specific high-risk AI applications, particularly in public services, law enforcement, and financial sectors.

- Importers and Distributors: Must verify conformity of high-risk AI systems with EU AI Act requirements through proper documentation and CE marking.

The Broader Context of the EU AI Act

The Act is structured into nine key sections, covering everything from definitions and scope to governance and liability. Deployers should familiarize themselves with all aspects, paying particular attention to sections on high-risk AI systems, requirements for deployers, and compliance assessments.

Steps for Ensuring Compliance

- Conduct a thorough inventory of all AI systems in use within your organization.

- Identify high-risk AI systems based on the EU AI Act's criteria.

- Implement robust governance structures for overseeing AI deployment.

- Establish clear processes for monitoring, reporting, and addressing potential issues.

- Invest in training for staff involved in AI system deployment and oversight.

- Maintain open communication channels with AI providers to stay updated on system changes and potential risks.

- Regularly review and update your AI governance policies to reflect evolving regulations and best practices.

The EU AI Act represents a significant shift in the AI regulatory landscape, placing substantial responsibilities on deployers of high-risk AI systems. By understanding and proactively addressing these requirements, organizations can not only ensure compliance but also harness the benefits of AI technologies responsibly and ethically.

As the AI landscape continues to evolve, staying informed and adaptable will be key to successfully navigating the regulatory environment. Deployers who embrace these responsibilities will be well-positioned to lead in the ethical and effective use of AI technologies in the European market and beyond.

Certainly! To make the guide more detailed and comprehensive, we can add the following sections and information:

Detailed Breakdown of High-Risk AI Systems

To help deployers better understand their obligations, let's clarify what constitutes a high-risk AI system under the EU AI Act:

- AI systems used in critical infrastructure (e.g., transport)

- Educational or vocational training systems

- Safety components of products

- Employment, worker management, and access to self-employment

- Essential private and public services

- Law enforcement systems

- Migration, asylum, and border control management

- Administration of justice and democratic processes

Deployers should carefully assess whether their AI applications fall into these categories.

Specific Examples of Deployer Responsibilities

Let's illustrate some requirements with concrete examples:

- Human Oversight:

- Example: In an AI-driven recruitment system, ensure a human HR professional reviews and has the authority to override AI decisions.

- Input Data Management:

- Example: For a credit scoring AI, regularly update and verify the accuracy of customer financial data used by the system.

- Incident Reporting:

- Example: If an AI system used in healthcare misdiagnoses a condition, promptly report this to relevant authorities and affected parties.

Risk Management System

Deployers should implement a robust risk management system that includes:

- Identification and analysis of known and foreseeable risks

- Estimation and evaluation of risks that may emerge during operation

- Evaluation of other potentially arising risks based on data analysis

- Adoption of suitable risk management measures

Technical Documentation Requirements

Deployers should maintain documentation that includes:

- A general description of the AI system

- Detailed information on the system's elements

- The version(s) of relevant software or firmware

- Description of all changes made to the system through its lifecycle

Penalties for Non-Compliance

It's crucial to understand the potential consequences of non-compliance:

- Fines of up to €30 million or 6% of global annual turnover (whichever is higher) for severe violations

- Lesser fines for minor infractions

- Potential prohibition of AI system use in severe cases

Interaction with Other EU Regulations

Deployers should be aware of how the AI Act interacts with other EU regulations:

- General Data Protection Regulation (GDPR)

- Product Liability Directive

- Machinery Directive

- Medical Devices Regulation

Understanding these interactions is crucial for comprehensive compliance.

Timeline for Implementation

Provide a tentative timeline for the AI Act's implementation:

- Expected adoption: Late 2023 or early 2024

- Transition period: 24 months after adoption

- Full enforcement: Likely 2026

Deployers should start preparing well in advance of the enforcement date.

Case Studies:

Case Study: FinTech Innovations - Implementing Oversight for AI-Driven Credit Scoring

FinTech Innovations, a leading European financial services company, uses an AI-driven credit scoring system to assess loan applications. To comply with the EU AI Act, they implemented the following measures:

- Human Oversight: Established a dedicated "AI Ethics Committee" comprising senior loan officers, data scientists, and legal experts. This committee reviews all AI-generated credit scores, with the authority to override decisions.

- Transparency: Developed a clear, jargon-free explanation of how the AI system influences credit decisions, which is provided to all loan applicants.

- Data Quality: Implemented a rigorous data verification process, including regular audits of input data to ensure relevance and representativeness.

- Continuous Monitoring: Deployed an AI monitoring tool that flags unusual patterns or potential biases in credit scoring outcomes for immediate human review.

- Training: Conducted comprehensive training for all staff involved in the loan approval process on the AI system's functionality, limitations, and potential biases.

- Documentation: Created a detailed log of all AI-driven decisions, including the rationale behind any human interventions, ensuring full auditability.

These measures not only ensured compliance with the EU AI Act but also improved the overall fairness and accuracy of FinTech Innovations' credit scoring process.

Case Study: HealthAI Solutions - Ensuring Transparency in AI Diagnostic Tools

HealthAI Solutions, a healthcare technology provider, offers AI-powered diagnostic tools to hospitals across Europe. To align with the EU AI Act's transparency requirements, they:

- Explainable AI: Developed a new version of their diagnostic AI that provides clear, understandable explanations for its conclusions, allowing healthcare professionals to trace the logic behind each diagnosis.

- Patient Communication: Created patient-friendly reports that explain in simple terms how AI contributed to their diagnosis, empowering patients to understand and discuss their treatment.

- Healthcare Provider Training: Launched a comprehensive training program for healthcare providers using their AI tools, focusing on interpreting AI outputs and understanding the system's limitations.

- Real-time Performance Metrics: Implemented a dashboard that displays the AI system's performance metrics in real-time, including accuracy rates and any detected anomalies.

- Collaborative Diagnosis Protocol: Established a protocol requiring human medical professionals to review and confirm all AI-generated diagnoses before communication to patients.

- Incident Reporting System: Developed a streamlined system for healthcare providers to report any concerns or discrepancies in AI diagnoses, with a dedicated team to investigate and address issues promptly.

These initiatives not only met the EU AI Act's requirements but also significantly enhanced trust in HealthAI Solutions' products among healthcare providers and patients.

Case Study: PrecisionManufacture Corp - Adapting AI Quality Control Systems

PrecisionManufacture Corp, a large manufacturing company, uses AI for quality control in its production lines. To meet the new standards set by the EU AI Act, they:

- Risk Assessment: Conducted a comprehensive risk assessment of their AI quality control system, identifying potential impacts on product safety and worker interaction.

- Adaptive AI Model: Developed a more transparent AI model that can explain its decision-making process for flagging defective products, allowing for easier auditing and verification.

- Human-in-the-Loop Integration: Implemented a system where AI flags potential defects, but final decisions are made by trained quality control specialists, ensuring human oversight.

- Data Management: Established a robust data governance framework to ensure that the AI system is trained on up-to-date, representative data from all production lines.

- Continuous Monitoring and Reporting: Set up an automated system to monitor the AI's performance, generating weekly reports on accuracy, false positives/negatives, and any detected biases.

- Worker Engagement: Launched an initiative to educate workers about the AI system, including how it works, its limitations, and how to report concerns or anomalies.

- Compliance Documentation: Created comprehensive technical documentation of the AI system, including its development process, testing procedures, and ongoing performance metrics.

- Regulatory Cooperation: Established a dedicated team to liaise with regulatory authorities, ensuring open communication channels for inspections or inquiries.

By implementing these measures, PrecisionManufacture Corp not only ensured compliance with the EU AI Act but also improved the overall efficiency and reliability of their quality control processes.

These case studies demonstrate practical approaches to implementing the EU AI Act's requirements across different sectors, highlighting the potential for improved processes and trust-building alongside regulatory compliance.

Disclaimer: This cheat sheet provides a general overview of the requirements for deployers of high-risk AI systems under the EU AI Act. For detailed guidance and legal advice, consult with a professional.