Google Exposes UK Government Censorship Demands

Tech Giant Accuses Labour Government and OFCOM of Threatening Free Speech Through Online Safety Act

Executive Summary

In a significant escalation of the ongoing transatlantic dispute over digital censorship, Google has publicly challenged the UK's Labour government and communications regulator OFCOM over what it characterizes as an overreach in content moderation requirements. The tech giant argues that OFCOM's interpretation of the Online Safety Act introduces a new category of "potentially illegal" content that Parliament never intended to capture, effectively requiring platforms to suppress legal speech.

This dispute arrives at a critical moment in US-UK relations, with the White House having suspended negotiations on a £31 billion technology prosperity agreement citing concerns over Britain's approach to regulating American tech firms and their AI products.

Background: The Online Safety Act

The Online Safety Act, passed in October 2023, represents the UK's most significant attempt to regulate online content. Enforced by OFCOM, the independent media regulator, the legislation aims to protect users from harmful content including abuse, harassment, fraudulent activity, and hate offenses. Platforms face fines of up to 10% of their annual global revenue or £18 million—whichever is greater—for non-compliance.

The act came under particular scrutiny following the summer 2024 Southport killings, when three young girls were fatally stabbed at a Taylor Swift-themed dance class. Misinformation spread rapidly on social media, falsely claiming the attacker was a Muslim asylum seeker. The actual perpetrator was British-born Axel Rudakubana, with no connection to Islam or immigration. This misinformation contributed to far-right riots across multiple English cities, with mosques, asylum seeker accommodations, and immigration centers targeted.

OFCOM has since cited these events as justification for expanding its regulatory approach, particularly around content that spreads quickly during crisis situations.

Google's Position: A Threat to Free Expression

In its formal response to OFCOM's latest consultation, published in mid-December 2025, Google issued what observers describe as a "scathing criticism" of the regulatory approach. The company's key objections center on several fundamental concerns.

The "Potentially Illegal" Content Problem

OFCOM's proposals would require platforms to exclude posts flagged as "potentially illegal" from recommender systems (such as news feeds and algorithmic suggestions) until human moderators have reviewed them. Google contends this approach "appears to introduce a new category of 'potentially' illegal content that was not intended by Parliament to be captured."

The company argues this will "necessarily result in legal content being made less likely to be encountered by users, impacting users' freedom of expression, beyond what the [Online Safety] Act intended." In essence, Google is accusing OFCOM of regulatory overreach—creating obligations through guidance that exceed what the law actually requires.

Undermining User Rights

Google explicitly warned that OFCOM's proposals risk "undermining users' rights to freedom of expression." The company's position is that the regulatory framework, as currently interpreted, creates perverse incentives for platforms to over-moderate content. With potential fines reaching 10% of global revenue, platforms have strong financial motivation to err on the side of removing or suppressing content rather than risk regulatory action.

Economic Consequences

In earlier submissions to OFCOM, Google criticized the enforcement funding mechanism, stating: "The use of the worldwide revenue approach... risks stifling UK growth, and consequently affecting the quality and variety of services offered to UK users, by potentially driving services with low UK revenue out of the UK, or stopping companies from launching services in the UK."

OFCOM's Defense

OFCOM has pushed back against Google's characterization. A spokesperson stated: "There is nothing in our proposals that would require sites and apps to take down legal content. The Online Safety Act requires platforms to have particular regard to the importance of protecting users' right to freedom of expression."

The regulator justified its approach by pointing to the potential for rapid harm during crisis situations: "If illegal content spreads rapidly online, it can lead to severe and widespread harm, especially during a crisis. Recommender systems can exacerbate this. To prevent this from happening, we have proposed that platforms should not recommend material to users where there are indicators it might be illegal, unless and until it has been reviewed."

OFCOM acknowledged that many technology companies already operate systems to suppress "borderline" content, adding: "We recognise that some content which is legal and may have been engaging to users may also not be recommended to users as a result of this measure."

Transatlantic Implications: The $40 Billion Deal Suspension

This dispute does not exist in isolation. The White House has suspended implementation of the £31 billion ($40 billion) "Tech Prosperity Deal" agreed during President Trump's September 2025 state visit to Britain. American officials have expressed frustration with the Online Safety Act's potential impact on US technology firms.

According to sources familiar with the negotiations quoted by The Telegraph: "Americans went into this deal thinking Britain were going to back off regulating American tech firms but realized it was going to restrict the speech of American chatbots." The reference to AI chatbots relates to Technology Secretary Liz Kendall's December 2025 announcement that the government planned to "impose new restrictions on chatbots" to close perceived loopholes in the law.

Vice President JD Vance has previously accused Britain of pursuing a "dark path" on free expression, while State Department officials have indicated the administration is "monitoring developments in the UK with great interest" regarding free speech concerns.

Broader Context: The Global Censorship Debate

Google's public challenge to OFCOM represents a broader shift in Silicon Valley's approach to government content regulation. The company has recently moved away from fact-checking commitments in the European Union and announced it would allow previously banned YouTube accounts related to COVID-19 and 2020 US election misinformation to apply for reinstatement.

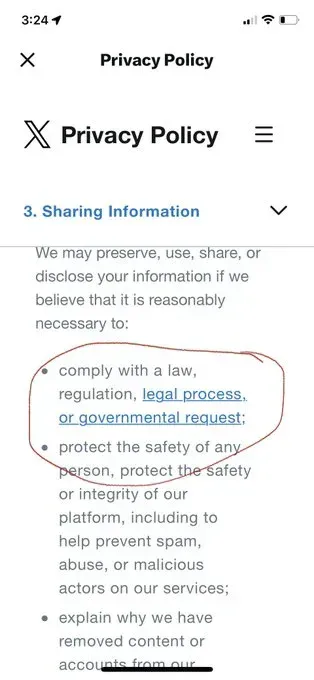

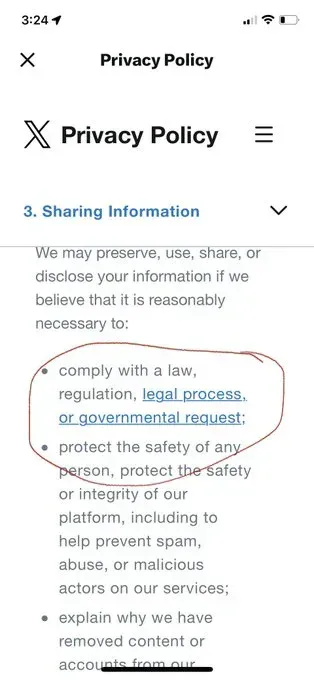

This positioning aligns Google more closely with Elon Musk's X (formerly Twitter), which has been vocal in its opposition to the Online Safety Act. X has claimed that "free speech will suffer" under the British regulatory framework.

The U.S. State Department's 2024 Human Rights Practices report, published in August 2025, explicitly criticized the Online Safety Act, stating the law "authorized UK authorities, including the Office of Communications (Ofcom), to monitor all forms of communication for speech they deemed 'illegal.'" The report characterized enforcement following the Southport attacks as an example of government censorship, noting that "censorship of ordinary Britons was increasingly routine, often targeted at political speech."

Critical Analysis: Where Does Truth Lie?

Several aspects of this dispute warrant careful examination from a cybersecurity and digital rights perspective.

The "Potentially Illegal" Standard

Google's core complaint—that OFCOM has introduced a content category Parliament never authorized—raises legitimate concerns. When regulators can effectively expand statutory definitions through guidance documents, it creates uncertainty for platforms and potentially chills legitimate speech. The Free Speech Union and other advocacy groups had warned of exactly this outcome before the act's implementation.

The Over-Moderation Incentive

With fines reaching 10% of global revenue, platforms face asymmetric risk. The cost of over-moderation (reduced user engagement, potential criticism from free speech advocates) is far less than the cost of under-moderation (massive regulatory fines, potential criminal liability). This structural incentive virtually guarantees that legal content will be suppressed.

The Southport Precedent

OFCOM's reliance on the Southport riots as justification for expanded powers deserves scrutiny. While misinformation clearly contributed to the unrest, the solution of pre-emptively suppressing content pending human review raises its own concerns. Who decides what constitutes "indicators" of potentially illegal content? How quickly can human review occur at scale? What happens to time-sensitive political speech during elections or other critical moments?

The Irony of Google's Position

It should not escape notice that Google itself has a documented history of complying with government content removal requests worldwide, including removing content at the request of authoritarian governments. The company's sudden emergence as a free speech champion in the UK context reflects commercial interests as much as principled commitment. Nevertheless, the validity of an argument does not depend on the purity of the messenger's motives.

Implications for Organizations

For cybersecurity professionals and organizational leaders, this dispute highlights several important considerations:

Content moderation risk: Organizations operating platforms in the UK should anticipate continued regulatory uncertainty. Compliance frameworks must be flexible enough to adapt to evolving OFCOM guidance while documenting good-faith efforts to balance safety and expression.

Cross-border data considerations: The US-UK tech deal suspension signals that digital regulation is becoming a significant trade barrier. Organizations with transatlantic operations should monitor how this dispute affects data sharing, service availability, and regulatory harmonization efforts.

AI governance: The extension of the Online Safety Act to AI chatbots introduces new compliance requirements for organizations deploying conversational AI systems. Risk assessments should consider potential liability for AI-generated content.

Crisis communications: OFCOM's focus on "crisis response protocols" suggests organizations should develop clear policies for how their platforms will handle content during breaking news events, balancing the legitimate need to prevent harm with the risks of over-censorship.

Conclusion

Google's public challenge to OFCOM represents a significant moment in the ongoing global debate over platform regulation and free expression. The company's accusations—that the UK government is using vague regulatory guidance to suppress legal speech—echo concerns raised by civil liberties organizations, opposition politicians, and the US government itself.

Whether one views this as principled defense of digital rights or self-interested resistance to regulation depends largely on one's prior assumptions about platform power and government authority. What is clear is that the balance between online safety and free expression remains contested, and the UK's approach is emerging as a flashpoint in transatlantic relations.

For organizations operating in this space, the message is clear: regulatory uncertainty is the new normal, and compliance strategies must be built for flexibility rather than stability.

Sources

GB News: Google slams Labour over online free speech (December 21, 2025)

Reuters: US pauses implementation of $40 billion technology deal with Britain (December 15, 2025)

Wikipedia: Online Safety Act 2023

OFCOM: Statement: Protecting people from illegal harms online

US State Department: 2024 Country Reports on Human Rights Practices: United Kingdom

CNBC: UK considers tougher Online Safety Act after riots (August 2024)

Amnesty International UK: UK: X created a 'staggering amplification of hate' during the 2024 riots (August 2025)

Disclaimer:This article is provided for informational purposes only and does not constitute legal advice. Organizations should consult qualified legal counsel regarding compliance with the Online Safety Act and related regulations.