Global Child Safety Legislation Wave: July-August 2025 Compliance Guide

Executive Summary

The summer of 2025 marked a watershed moment for online child safety legislation, with major regulatory frameworks taking effect across the UK, EU, and gaining significant momentum in the United States. This compliance guide examines the wave of legislation that came into force during July and August 2025, analyzing the immediate impacts on platforms, businesses, and users worldwide.

Key Developments Summary

UK Online Safety Act 2023 - Phase 2 Implementation

Effective Date: July 25, 2025

Status: Fully Enforced

EU Digital Services Act - Child Protection Guidelines

Effective Date: July 14, 2025

Status: Guidelines Published

US Legislation Pipeline

KOSA/COPPA 2.0: Reintroduced May 2025, awaiting House consideration

State-Level Actions: Louisiana lawsuit filed August 14, 2025

UK Online Safety Act: The Catalyst

What Changed on July 25, 2025

The UK's Online Safety Act 2023 entered its most significant enforcement phase, requiring platforms to implement comprehensive child protection measures. The first Protection of Children Codes of Practice for user-to-user and search services came into force, marking the completion of 'Phase 2' of Ofcom's implementation.

Immediate Compliance Requirements

Age Assurance Mandates:

- Platforms are now required to use highly effective age assurance to prevent children from accessing pornography, or content which encourages self-harm, suicide or eating disorder content

- Providers had to complete their first children's risk assessments by July 24, 2025

Content Categories Under Restriction:

- Primary Priority Content: Pornographic content

- Priority Content: Bullying content

- Non-Designated Content: Content that promotes depression, hopelessness and despair

Platform Response and Implementation

Immediate Industry Reaction: Some websites and apps introduced age verification systems for users in response to the July 25, 2025 deadline set by Ofcom. These include pornographic websites, but also other websites and services such as Bluesky, Discord, Tinder, Bumble, Feeld, Grindr, Hinge, Reddit, X, and Spotify.

Verification Methods Deployed:

- Government-issued ID uploads

- Face scans to third-party digital identity company Yoti to access content labelled 18+

- Email address analysis technology

- Third-party vendor verification services

Enforcement Actions

Ofcom has emphasised that it is "ready to enforce against any company which does not comply with age-check requirements by the deadline" and has already announced that it is formally investigating four providers of pornography sites who may not have implemented highly effective age assurance.

Unintended Consequences

Content Censorship Concerns: The day after the UK's Online Safety Act came into force, protests outside hotels housing asylum seekers were effectively erased from public view for youngsters on platforms including X, raising concerns about over-broad content filtering.

VPN Usage Surge: Users increasingly turned to VPNs to bypass geolocation restrictions, prompting discussions about potential VPN usage limitations.

An official statement regarding Roblox: pic.twitter.com/nVJ30lUqaG

— Schlep (@RealSchlep) August 14, 2025

EU Digital Services Act: Child Protection Framework

July 14, 2025 Guidelines

On July 14, 2025, the European Commission published its final guidelines on the protection of minors under the Digital Services Act, establishing a comprehensive framework for child safety across EU member states.

Key Provisions

Risk Assessment Requirements:

- Platforms must conduct systematic risk assessments for child safety

- The Commission has made clear that it intends to use the Guidelines as a "significant and meaningful" benchmark when assessing in-scope providers' compliance with Article 28(1) DSA

Stakeholder Consultation Process: The Commission published the results of three consultations with various stakeholders, including a public consultation that ran from May 13 to June 15, 2025, and a call for evidence that ran from July 31 to September 30, 2024.

Implementation Timeline

The guidelines form part of a broader regulatory trend, with the Commission emphasizing coordination with other jurisdictions to strengthen online protection measures for children globally.

United States: Federal and State Action

Federal Legislation Status

KOSA (Kids Online Safety Act):

- Blumenthal reintroduced the bill in the 119th Congress (2025-2026) in May 2025

- On May 14, 2025, KOSA was reintroduced in the Senate with some minor changes from the version that passed the chamber last year

- Previous Senate passage: July 30, 2024, on a 91–3 vote

COPPA 2.0 (Children and Teens' Online Privacy Protection Act):

- COPPA 2.0 was introduced to expand the age range covered by COPPA to minors under 17

- The Children and Teens' Online Privacy Protection Act was recently reintroduced in the Senate

Key Legislative Provisions

KOSA Requirements:

- Establish a "duty of care" for covered platforms, requiring them to "exercise reasonable care" in preventing/mitigating various harms, including the sexual exploitation and abuse of children

- Require covered platforms to put many features in place, enabled by default, to safeguard minors, including restricted communications and restricted public access to personal data

COPPA 2.0 Expansion:

- Bans online companies from collecting personal information from users under 17 years old without their consent. It bans targeted advertising to children and teens and creates an eraser button for parents and kids to eliminate personal information online

State-Level Developments: Louisiana Lawsuit

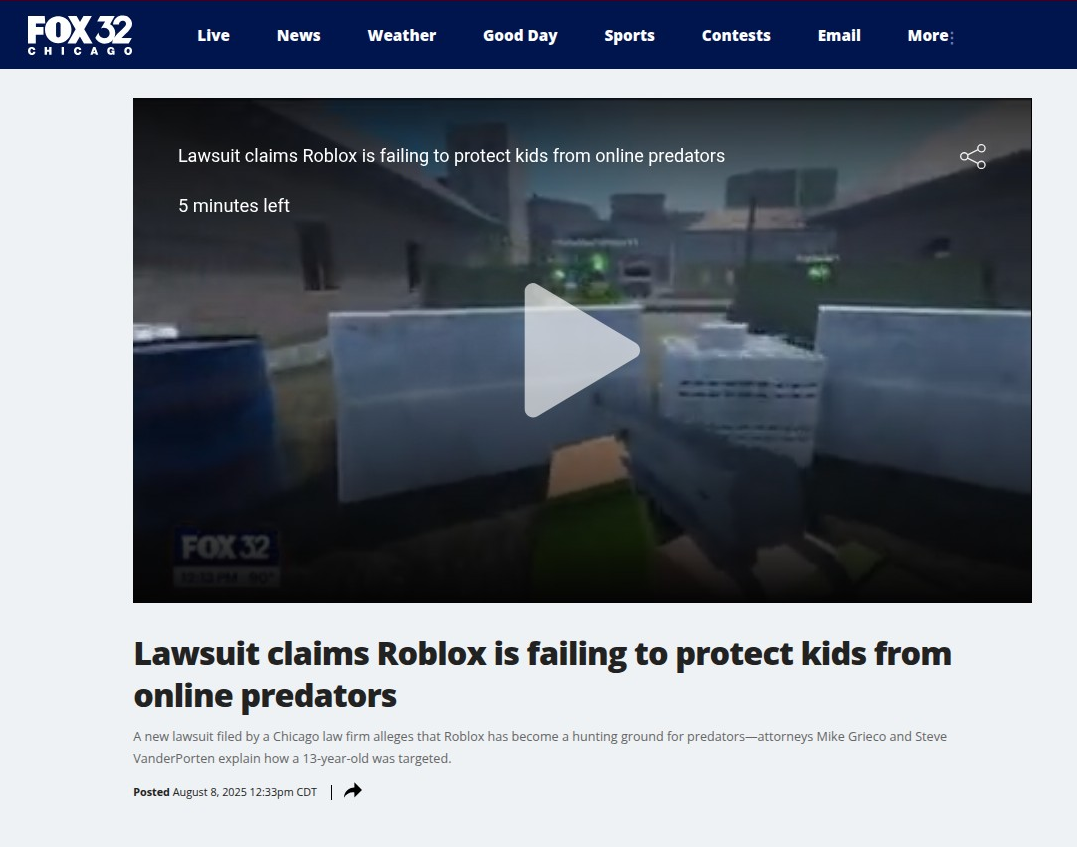

August 14, 2025 - Major Legal Action: Louisiana Attorney General Liz Murrill filed a child protection lawsuit against Roblox, accusing the gaming platform of enabling child exploitation and failing to protect young users.

Lawsuit Allegations: Roblox has and continues to facilitate distribution of child sexual abuse material and the sexual exploitation of Louisiana's children, fails to implement basic safety controls to protect child users from predators, and knowingly and intentionally fails to provide notice to parents and child users of its extreme dangers.

Specific Claims:

- Games with names like "Escape to Epstein Island, Diddy Party, and Public Bathroom Simulator Vibe" are often filled with sexually explicit material and simulated sexual activity

- A suspect was actively using the online platform Roblox, posing as a 14-year-old girl while using voice-altering technology

Political Coalition Building: U.S. Congressman Ro Khanna contacted both Schlep and fellow Roblox creator KreekCraft, launching a petition titled "Stand with Us to Protect Kids and Save Roblox" in response to the Roblox controversy.

Compliance Impact Analysis

Immediate Business Implications

Age Verification Technology Market: The legislation has created an immediate demand for robust age verification solutions, with companies like Yoti, Persona, and Kids Web Services (KWS) seeing increased adoption.

Platform Architecture Changes:

- Algorithm modifications to prevent harmful content recommendation to minors

- Default privacy setting adjustments for underage users

- Enhanced reporting mechanism implementations

Global Harmonization Trends

Cross-Border Enforcement: Ofcom went further by sending letters to try to enforce the UK legislation on U.S.-based companies such as the right-wing platform Gab, indicating extraterritorial application of national laws.

Regulatory Convergence: The Guidelines form part of a broader trend in the EU and UK as regulators seek to strengthen online protection measures for children.

Critical Compliance Considerations

For Platform Operators

Risk Assessment Requirements:

- Immediate Action: Conduct comprehensive child safety risk assessments

- Ongoing Monitoring: Implement regular review cycles

- Documentation: Maintain detailed compliance records

Technical Implementation:

- Age Assurance Systems: Deploy effective age verification technology

- Content Filtering: Implement robust content moderation for child users

- Algorithm Adjustments: Modify recommendation systems to protect minors

For Businesses Using Platforms

Marketing Compliance:

- Review advertising targeting practices for users under 17

- Implement additional consent mechanisms for minor user data

- Audit current data collection practices

Data Governance:

- Implement "eraser button" functionality for parent and child data deletion requests

- Enhanced parental control mechanisms

- Transparency reporting requirements

Enforcement Landscape

Regulatory Authority Actions

UK Ofcom:

- Actively checking compliance immediately upon the Children's Safety COPs coming into force

- Four formal investigations launched into pornography site compliance

EU Commission:

- Using DSA guidelines as primary compliance benchmark

- Coordinating with member state regulators for enforcement

US State Attorneys General:

- Louisiana lawsuit establishing precedent for state-level action

- Murrill believes Louisiana is the first state to sue Roblox

Penalties and Consequences

UK Sanctions:

- Fines up to £18 million or 10% of annual turnover

- Platform blocking capabilities for non-compliant services

EU Enforcement:

- Significant penalties under DSA framework

- Potential service suspension for systemic non-compliance

US Legal Exposure:

- Courts may fine violators of COPPA up to $50,120 in civil penalties for each violation

- State-level lawsuits seeking damages and operational restrictions

Industry Response and Adaptation

Major Platform Changes

Social Media Adaptations:

- Enhanced default privacy settings for minor accounts

- Restricted direct messaging capabilities

- Algorithm modifications to limit harmful content exposure

Content Platform Adjustments:

- Age-gated access to mature content

- Parental dashboard implementations

- Enhanced reporting mechanisms

Technology Sector Innovation

Age Verification Solutions: The regulatory requirements have accelerated innovation in privacy-preserving age verification technologies, including:

- Biometric age estimation

- Zero-knowledge proof systems

- Decentralized identity verification

Looking Forward: Q4 2025 and Beyond

Anticipated Developments

UK Phase 3 Implementation: The remainder of 2025 will be busy for Ofcom as it seeks to achieve various milestones within 'Phase 3' of its OSA implementation, which focuses on 'categorised' services.

US Legislative Progress:

- KOSA/COPPA 2.0 House consideration expected

- Additional state-level lawsuits anticipated

- Potential federal enforcement agency guidance

EU Expansion:

- Member state implementation of DSA child protection requirements

- Enhanced coordination with UK and US frameworks

Strategic Recommendations

For Compliance Teams:

- Immediate Audit: Assess current child safety measures against new requirements

- Technology Investment: Implement robust age verification and content filtering

- Process Enhancement: Establish ongoing compliance monitoring systems

- Legal Preparation: Prepare for potential enforcement actions and litigation

For Business Leaders:

- Budget Planning: Allocate resources for compliance technology and processes

- Risk Assessment: Evaluate exposure across multiple jurisdictions

- Stakeholder Communication: Develop transparent communication about child safety measures

- Innovation Opportunity: Consider child safety as a competitive differentiator

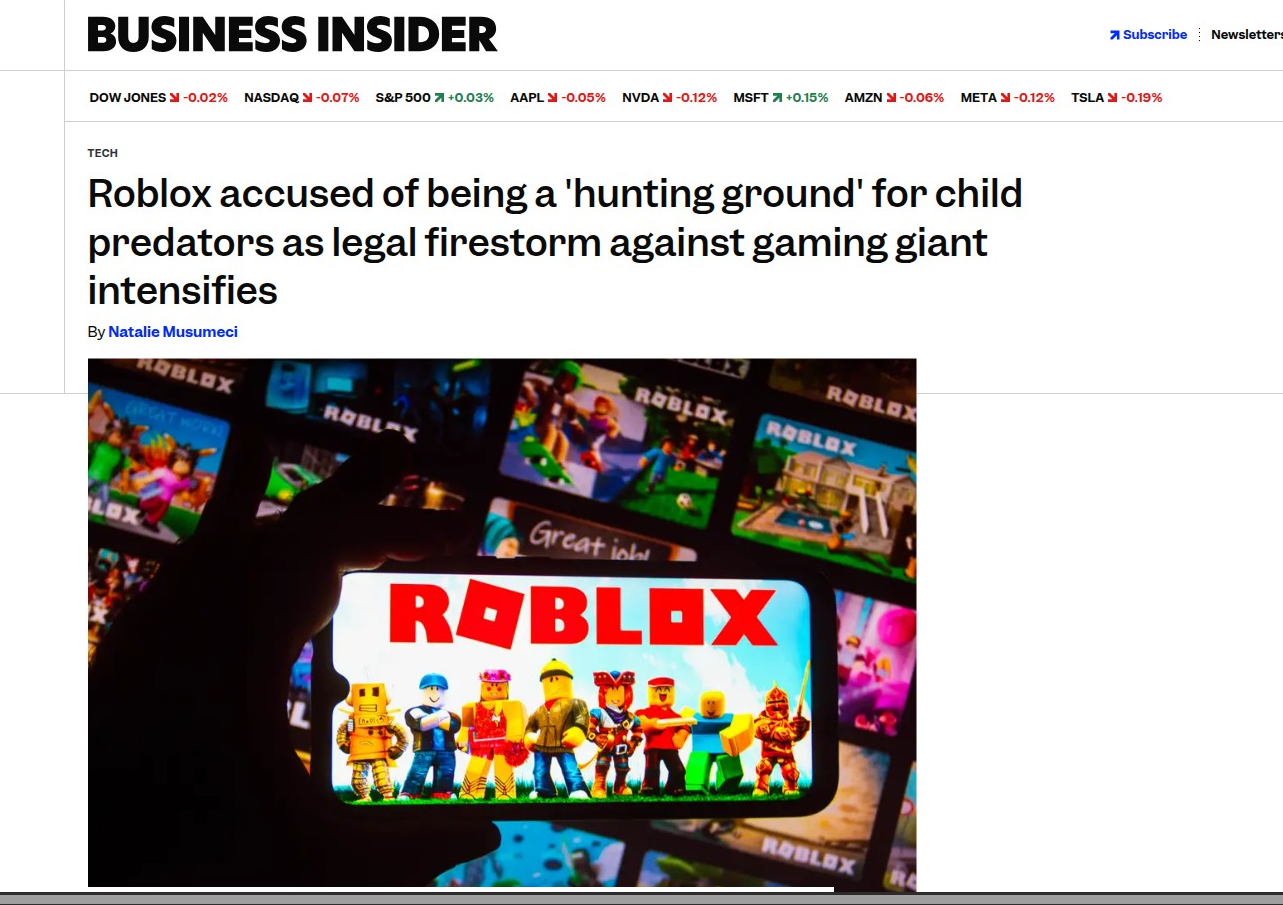

Case Study: Roblox - A Pattern of Systemic Safety Failures

Background: Long-Standing Safety Concerns

The Roblox controversy involving Schlep represents only the most recent chapter in a documented pattern of child safety failures dating back to 2018. This case study illustrates the escalating legal and regulatory risks facing platforms that fail to implement adequate child protection measures.

Timeline of Major Incidents

2020 - The Clinton McElroy Case: Clinton McElroy was arrested for trading Robux (Roblox's virtual currency) for explicit images from an 8-year-old child, according to police reports. This early case highlighted vulnerabilities in Roblox's virtual economy and user verification systems.

2022 - Arnold Castillo (DoctorRofatnik): A 22-year-old Roblox developer behind Sonic Eclipse Online groomed a 15-year-old girl from Indiana via the platform, paying her for art and sending gifts. He arranged for an Uber to transport her to New Jersey, where he sexually assaulted her over 8 days. Arrested in May 2022, he pleaded guilty and was sentenced to 15 years in prison. Notably, Roblox had banned his account in 2020 after earlier predatory messages surfaced, yet failed to prevent the subsequent escalation.

2022 - Shane Penczak Conviction: Shane Penczak was sentenced to 13 years for exploiting a 13-year-old boy via Robux transactions and platform communications.

May 2025 - California Kidnapping Case: A 13-year-old girl was groomed, kidnapped, and raped by a predator she met on Roblox. The family filed a lawsuit against Roblox for negligence, claiming the platform failed to protect their daughter.

2025 - Multiple Active Cases:

- A 9-year-old boy was extorted for explicit content as part of one of 8+ ongoing lawsuits alleging platform failures

- A 14-year-old girl was groomed through Roblox, leading to an attempted assault

- Louisiana Attorney General's case cites a suspect using voice-altering technology to pose as a 14-year-old girl while actively using Roblox

Statistical Evidence of Growing Problem

NCMEC Reports Surge: Reports to the National Center for Missing and Exploited Children involving Roblox surged dramatically from 2,973 in 2022 to 13,316 in 2023 - a 348% increase in a single year, indicating poor moderation effectiveness despite increased awareness.

Pattern Recognition: The documented cases show consistent patterns:

- Predators using Roblox's chat and private messaging systems to initiate contact

- Virtual currency (Robux) transactions used as grooming tools

- Platform's delayed response to early warning signs

- Failure to prevent escalation from online grooming to physical harm

Legal Landscape Evolution

Federal Lawsuit Patterns: Multiple law firms are now bringing coordinated federal lawsuits against Roblox, with Chicago law firm attorneys Mike Grieco and Steve VanderPorten representing multiple families. These cases allege Roblox has become a "digital hunting ground" for child abusers.

State Attorney General Actions: Louisiana AG Liz Murrill's August 14, 2025 lawsuit represents the first state-level action, potentially setting precedent for other states to follow. The lawsuit specifically targets:

- Failure to implement basic safety controls

- Knowingly facilitating distribution of child sexual abuse material

- Failure to provide adequate warnings to parents and children

Business Impact Assessment:

- Multiple ongoing federal lawsuits across at least nine states

- State attorney general enforcement action in Louisiana

- Congressional attention from Representatives Ro Khanna and potentially Ted Cruz

- Potential federal enforcement under KOSA/COPPA 2.0 if passed

Platform Response Analysis

Reactive vs. Proactive Measures: Roblox's response pattern shows consistent reactive rather than proactive safety measures:

- Account bans often occur only after law enforcement involvement

- Safety protocol enhancements typically follow public exposure of incidents

- Company statements emphasize cooperation with law enforcement rather than prevention

The Schlep Controversy in Context: Against this backdrop, Roblox's aggressive legal action against Schlep appears particularly problematic from a public relations and legal liability perspective. The company's decision to ban a user who helped achieve six arrests while allowing the underlying predator problems to persist highlights potential misaligned priorities.

Compliance Implications for Other Platforms

Risk Assessment Lessons:

- Early Warning Systems: Platforms must implement proactive detection rather than reactive reporting

- Virtual Economy Controls: In-game currencies and transactions require enhanced monitoring for grooming behaviors

- Age Verification: Robust age verification becomes critical for platforms with significant minor user populations

- Law Enforcement Cooperation: Platforms should work with rather than against legitimate safety advocates

Legal Precedent Concerns: The Roblox situation demonstrates how accumulated safety failures can result in:

- Multi-jurisdictional legal exposure

- State attorney general enforcement actions

- Federal congressional attention

- Coordinated plaintiff litigation strategies

- Significant reputational damage

Regulatory Response Acceleration

Legislative Momentum: The Roblox cases have directly influenced the urgency around:

- KOSA/COPPA 2.0 passage in Congress

- State-level protective legislation

- International regulatory coordination (UK OSA, EU DSA)

Enforcement Priority Shifts: Regulators are increasingly viewing child safety as a top enforcement priority, with Roblox serving as a cautionary example of the consequences of inadequate self-regulation.

Conclusion

The July-August 2025 legislative wave represents the most significant expansion of online child safety regulation in global internet history. The convergence of UK enforcement, EU guidelines, and US federal momentum, combined with aggressive state-level action, creates a new compliance paradigm requiring immediate attention and ongoing adaptation.

Organizations operating in the digital space must treat child safety compliance not as a regulatory burden, but as a fundamental operational requirement in the modern internet ecosystem. The cases of Roblox and other platforms demonstrate that failure to proactively address these requirements can result in significant legal, financial, and reputational consequences.

The regulatory landscape will continue evolving rapidly through 2025 and beyond, requiring organizations to maintain agile compliance frameworks capable of adapting to new requirements across multiple jurisdictions. Success in this environment demands not just meeting minimum legal requirements, but establishing child safety as a core business principle and competitive advantage.

This compliance guide will be updated as new developments emerge. For the most current information, organizations should consult with qualified legal counsel and monitor regulatory authority announcements in relevant jurisdictions.