Digital Compliance Alert: UK Online Safety Act and EU Digital Services Act Cross-Border Impact Analysis

Executive Summary: Two major digital regulatory frameworks have reached critical implementation phases that demand immediate compliance attention from global platforms. The UK's Online Safety Act entered its age verification enforcement phase on July 25, 2025, while escalating tensions between US officials and EU regulators over the Digital Services Act highlight growing concerns about extraterritorial censorship effects.

UK Online Safety Act: Age Verification Requirements Now in Effect

What Changed on July 25, 2025

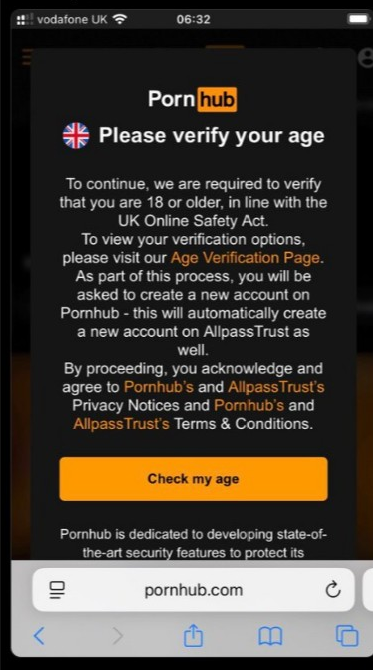

As of July 25, 2025, all sites and apps that allow pornography will need to have strong age checks in place, to make sure children can't access that or other harmful content. This represents the most significant change to how adults access online content in the UK since the internet's mainstream adoption.

Key Requirements:

- Gone are the days when you could merely click "I am over 18" to prove your age, because sites like Pornhub will now require age verification as per the Online Safety Act

- Platforms face fines up to £18 million (€20 million) or 10 per cent of their global revenue

- Services that publish their own pornographic content (defined as 'Part 5 Services') including certain Generative AI tools, must begin taking steps immediately to introduce robust age checks

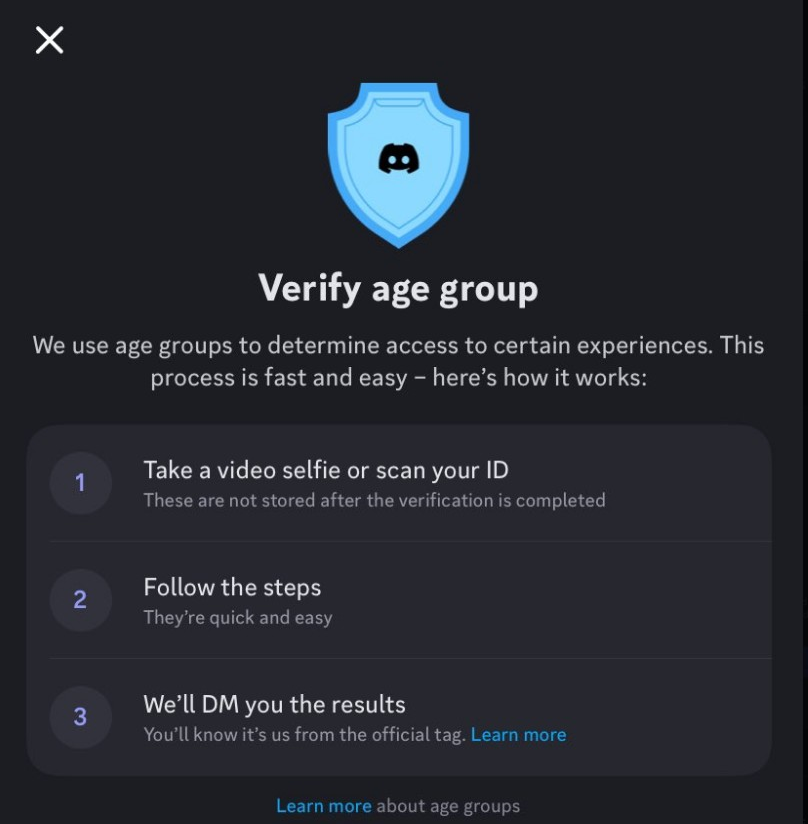

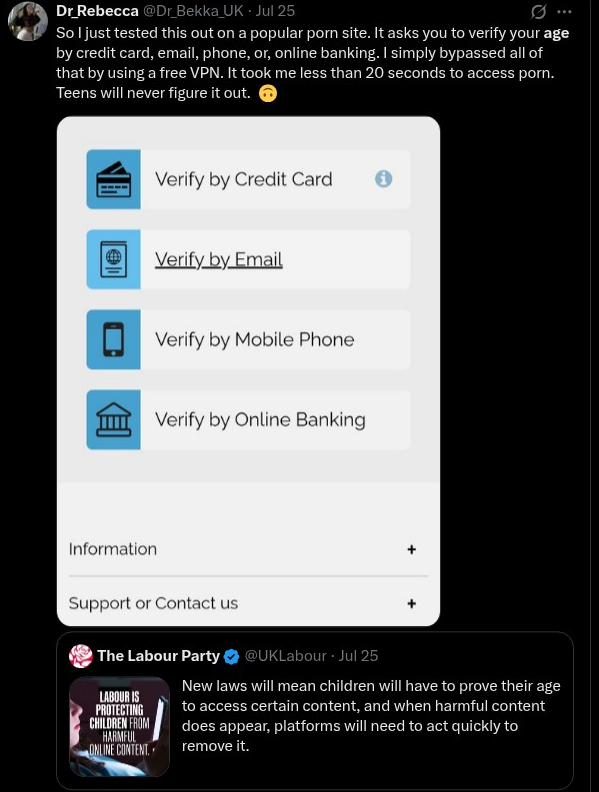

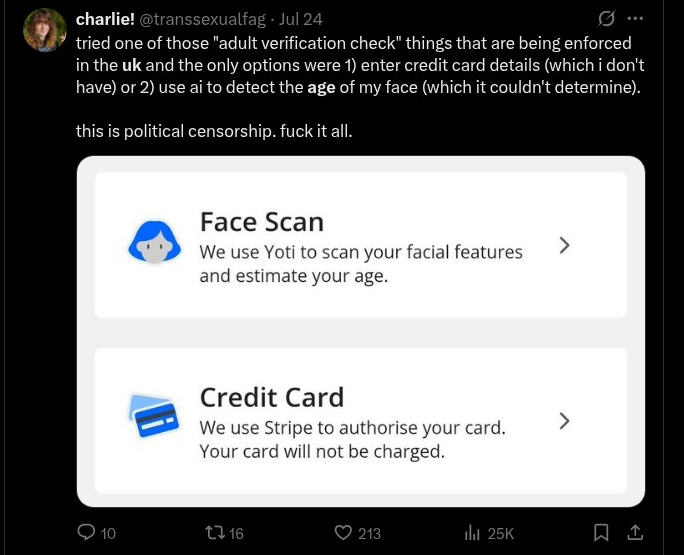

Approved Age Verification Methods

The UK communications regulator Ofcom has approved several verification methods:

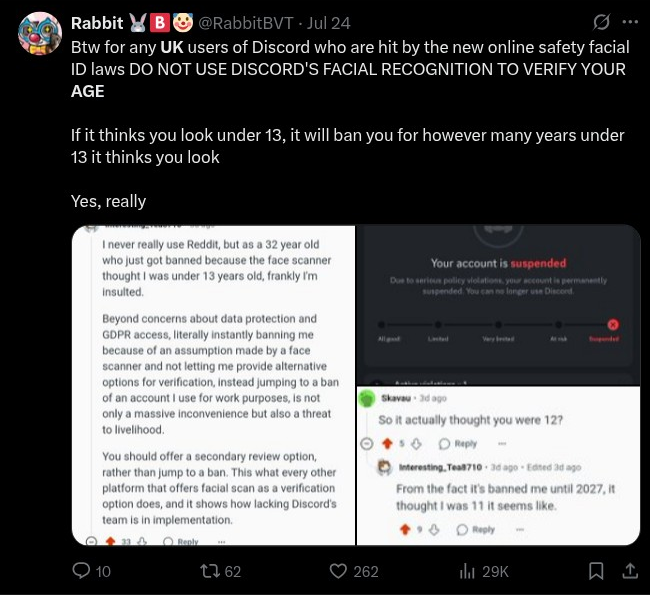

- Facial age estimation - Using photo or video analysis

- Financial verification - Credit card, banking, or open banking checks

- Government ID matching - Photo ID compared against selfies

- Mobile network operator checks - Using SIM registration and account data, they are able to confirm that the mobile is assigned to an adult

- Digital identity wallets - Containing proof of age

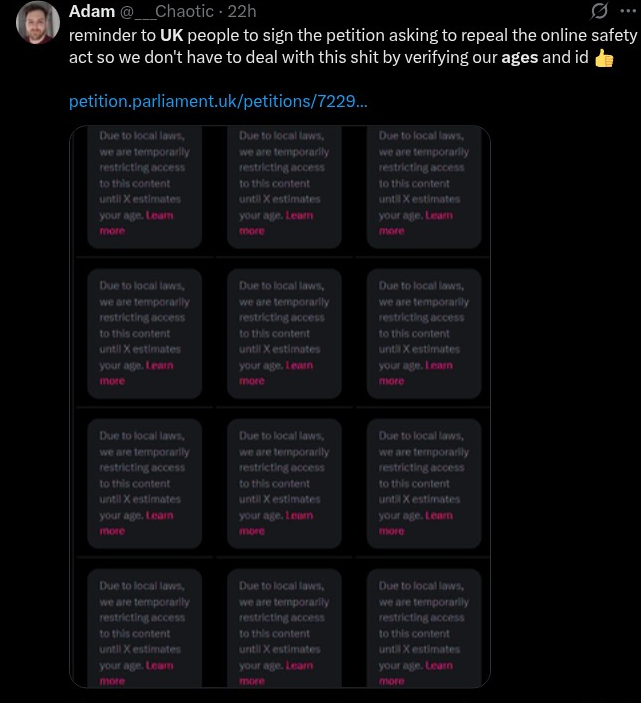

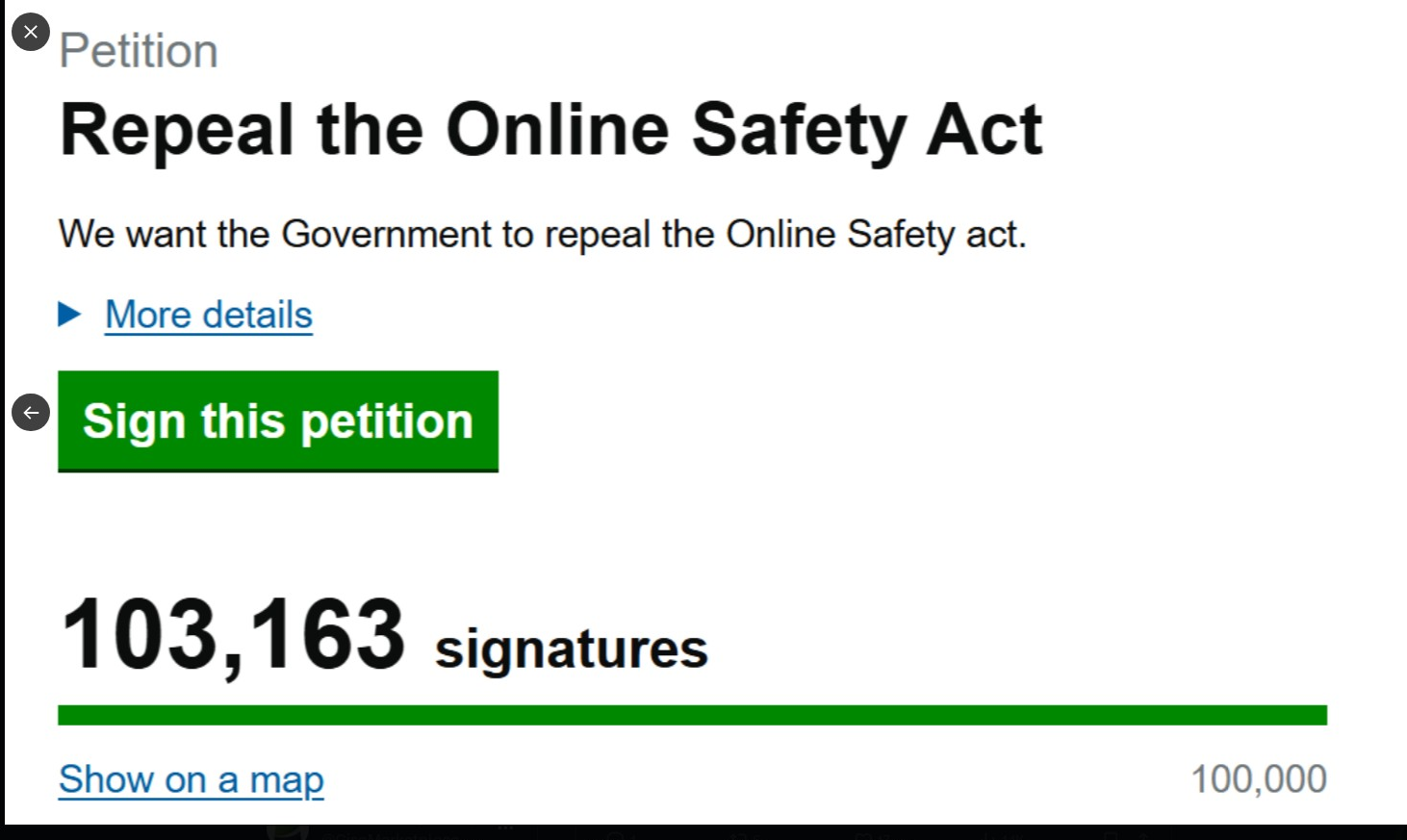

reminder to UK people to sign the petition asking to repeal the online safety act so we don't have to deal with this shit by verifying our ages and id 👍https://t.co/imR0tWRBA4 pic.twitter.com/ORQEvyDKhM

— Adam (@___Chaotic) July 25, 2025

Platform Responses and Compliance Strategies

Major Platform Actions:

- Pornhub: When you go onto the website it prompts you 'to verify that you are 18 or older, in line with the UK Online Safety Act' using third-party verification through AllpassTrust

- Reddit: Already implemented verification through Persona requiring government ID

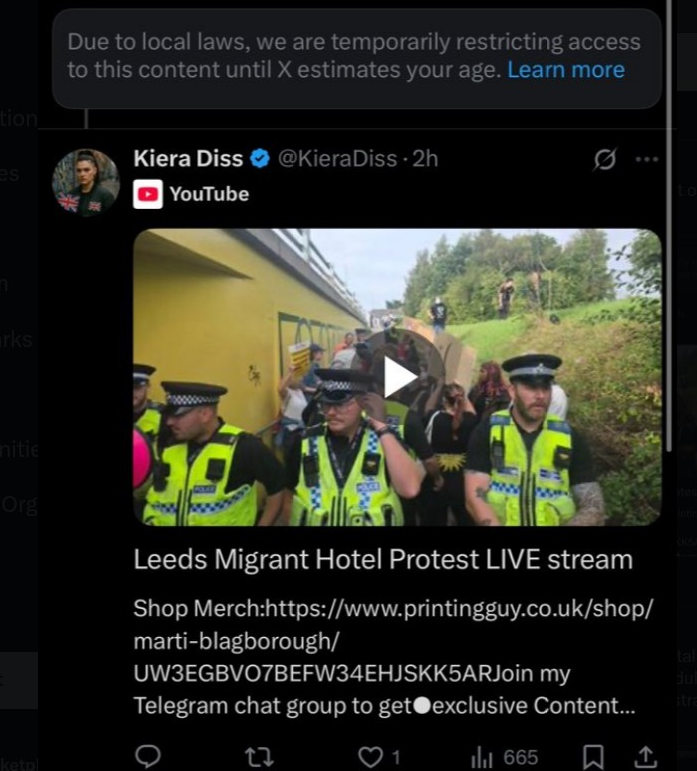

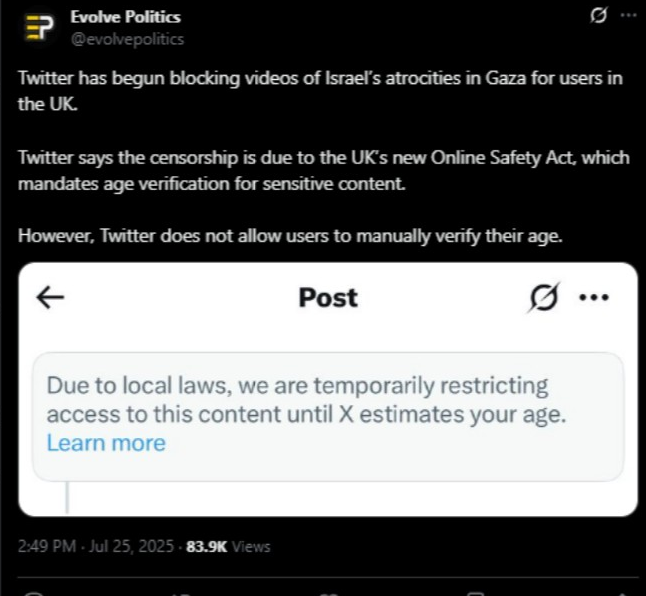

- X (Twitter): Planning facial age estimation, ID verification, and email-based age estimation

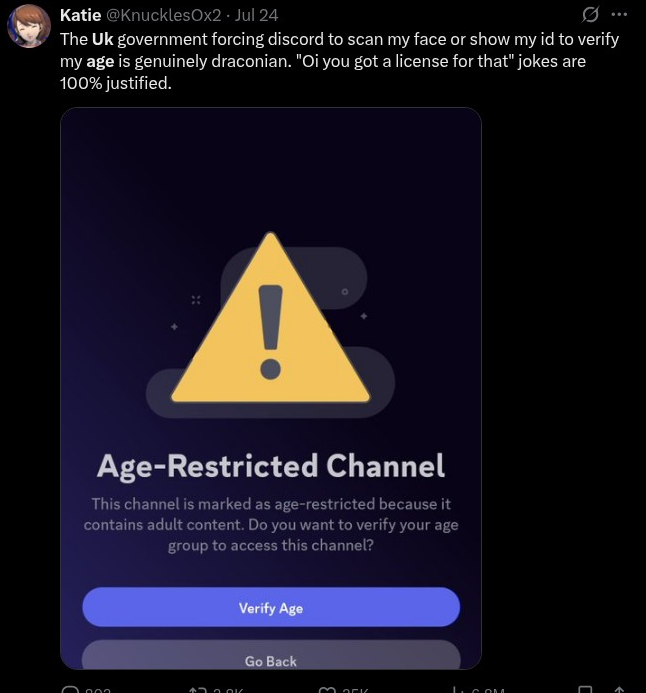

- Discord, Grindr, Hinge: All implementing age verification systems

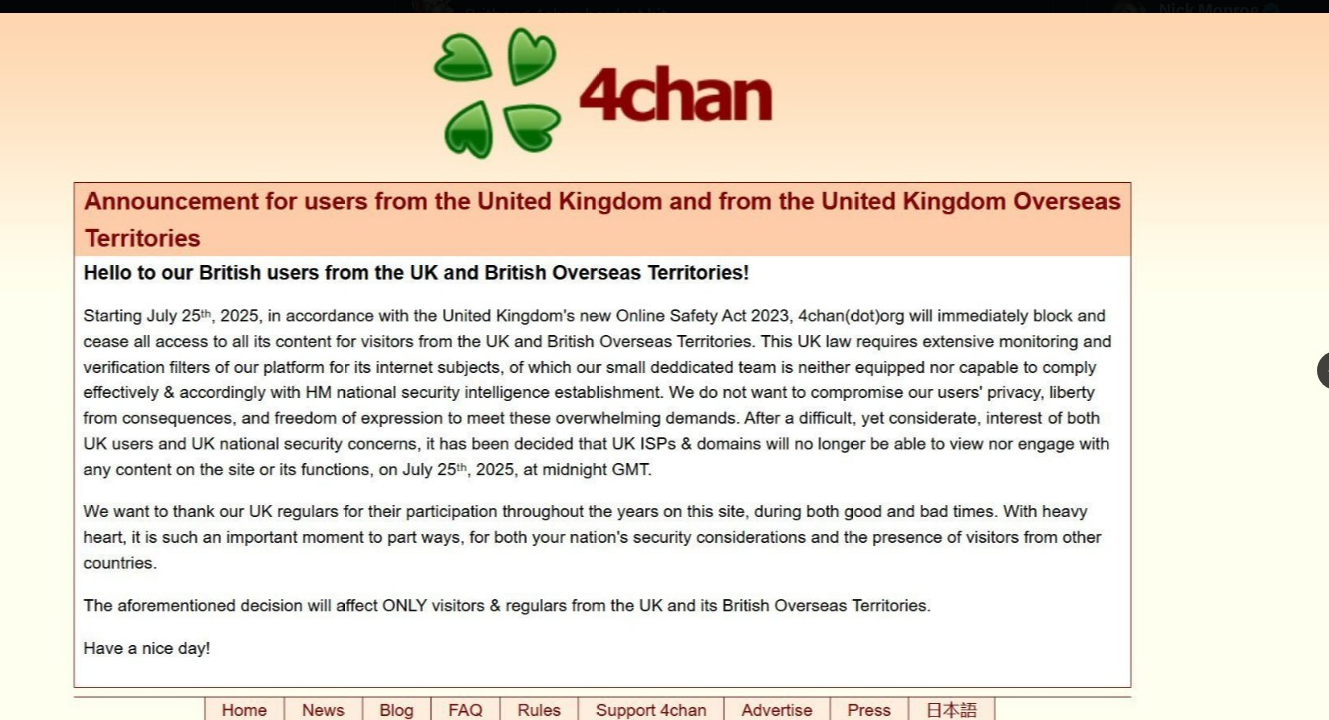

Platform Withdrawals: Some platforms chose market exit over compliance:

- Bellesa: "It is unfortunately no longer possible for us to remain compliant to United Kingdom law while providing access to our content here, and have made the difficult decision to disable Bellesa.co in United Kingdom"

- BitChute: Discontinuing services for UK residents

Broader Scope Beyond Adult Content

The Act's reach extends far beyond pornographic content. Any site that allows users to share content or interact with each other is in scope of the Online Safety Act, including:

- Social media platforms (Facebook, Instagram, TikTok)

- Video sharing platforms (YouTube)

- Dating applications

- Online forums and messaging services

- File sharing and cloud storage services

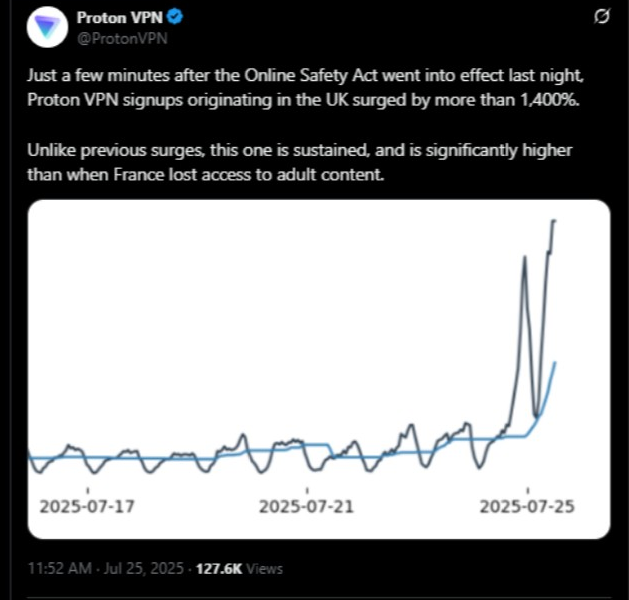

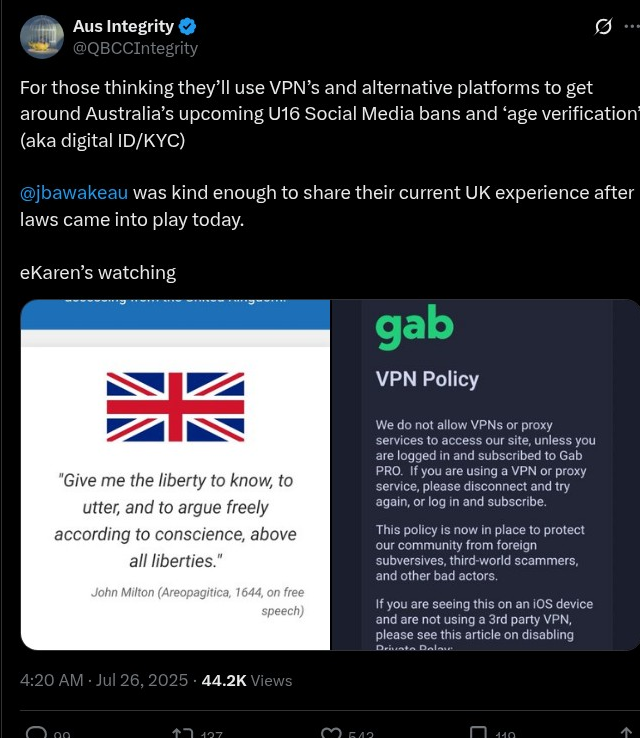

VPN Usage and Circumvention Concerns

It's legal to use a VPN to bypass age verification unless a specific platform's terms of service state differently. The July 2025 UK Online Safety Act doesn't mention anything about individual users being prohibited from using VPNs for bypassing age verification. However, Ofcom has said platforms must not host, share, or permit content encouraging the use of VPNs to bypass age checks.

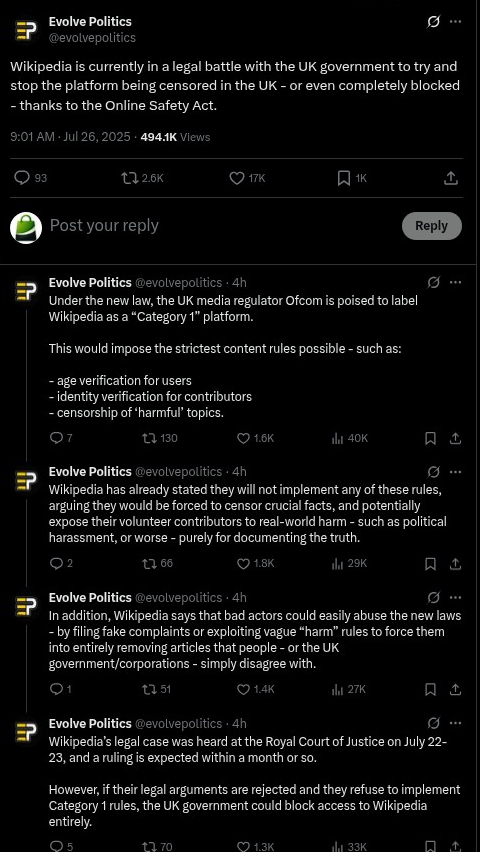

Wikipedia's Legal Challenge

The Wikimedia Foundation is seeking a judicial review of the Online Safety Act (OSA), claiming that its "Categorisation Regulations" potentially places Wikipedia and its staff and users under the most series Category 1 classification. This challenge highlights concerns that the Act's broad scope could impact educational and informational platforms beyond its intended targets.

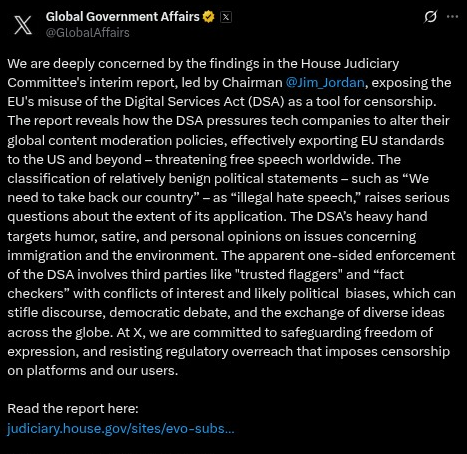

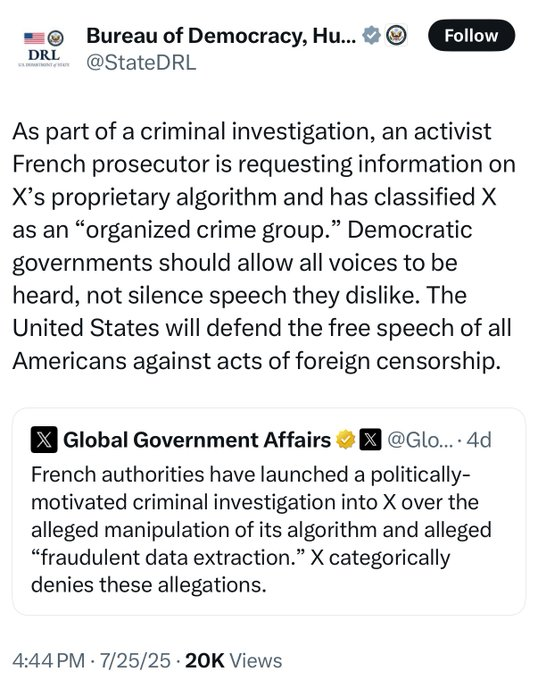

EU Digital Services Act: US Political Tensions Escalate

Congressional Investigation Findings

The US House Judiciary Committee released a comprehensive report on July 25, 2025, titled "The Foreign Censorship Threat: How the European Union's Digital Services Act Compels Global Censorship and Infringes on American Free Speech".

THE EU CENSORSHIP FILES

— Rep. Jim Jordan (@Jim_Jordan) July 25, 2025

“We need to take back our country” = ILLEGAL “hate” speech in Europe.

New secret docs show how EU regulators are pushing Big Tech behind closed doors to censor conservatives.

Thread: pic.twitter.com/Og8VGlAwyM

Key Congressional Findings:

- Global Policy Impact: The DSA is forcing companies to change their global content moderation policies. Nonpublic materials obtained by the Committee from the May 2025 workshop make clear that Commission regulators expect platforms to change their worldwide terms and conditions to comply with DSA obligations

- Political Speech Targeting: The DSA is being used to censor political speech, including humor and satire. Documents produced to the Committee under subpoena show that European censors target core political speech that is neither harmful nor illegal

- Definitional Concerns: The Commission's workshop labeled a hypothetical social media post stating "we need to take back our country"—a common, anodyne political statement—as "illegal hate speech" that platforms are required to censor

Brussels Effect on Global Content Moderation

Major social media platforms generally have one set of terms and conditions that apply worldwide. This means that the DSA requires platforms to change content moderation policies that apply in the United States, and apply EU-mandated standards to content posted by American citizens.

The "Brussels Effect" phenomenon means EU regulations often become de facto global standards. The General Data Protection Regulation (GDPR), implemented in the EU in 2018, reshaped data privacy on a global scale. Many companies chose to apply its stringent rules worldwide, rather than create region-specific policies.

DSA Enforcement Mechanisms

Financial Penalties: Platforms that fall foul of the DSA face ruinous fines of up to six per cent of their global annual turnover

Compliance Pressure: With 'systemic risks' so poorly defined in law, platforms are left to second guess what might constitute a compliance breach. No company wants to be the first regulatory test case

Current EU Enforcement Actions

In December 2023, the Commission opened formal infringement proceedings against the VLOP X on several grounds, including suspected breaches of the obligations concerning data access to researchers. In July 2024, Commission issued preliminary findings that X is in breach of the DSA on dark patterns, transparency of advertising and on access to data by researchers.

Additional ongoing proceedings target:

- TikTok (multiple provisions under investigation)

- AliExpress and Meta (proceedings opened March/May 2024)

- Temu (proceedings started October 2024)

Cross-Border Compliance Implications

Overlapping Regulatory Pressure

Organizations operating in both jurisdictions face compound compliance burdens:

- Content Moderation: EU DSA requirements for "systemic risk" mitigation may conflict with UK free speech expectations

- Age Verification: UK OSA requirements add operational complexity beyond EU baseline protections

- Data Handling: Both frameworks impose transparency and data access requirements with different technical specifications

Risk Assessment Framework

High-Risk Indicators:

- Platforms with >45 million monthly EU users (DSA VLOP designation)

- UK user-to-user services likely accessed by children (OSA Category 1 risk)

- Services hosting adult content or user-generated content

Compliance Priority Matrix:

- Immediate Action Required: Adult content platforms in UK jurisdiction

- High Priority: Social media platforms with significant EU/UK user bases

- Medium Priority: Educational platforms and specialized services

- Monitoring Required: Infrastructure and B2B services

Recommended Compliance Actions

For UK Online Safety Act:

- Conduct Children's Access Assessment - Deadline was April 16, 2025, but ongoing compliance required

- Implement Age Verification - If hosting adult content or likely accessed by children

- Review Content Policies - Ensure coverage of illegal content, self-harm, and harmful material

- Establish Transparency Reporting - Prepare for annual reporting requirements

For EU Digital Services Act:

- Assess VLOP/VLOSE Status - Determine if platform exceeds 45 million monthly EU users

- Conduct Risk Assessment - Identify systemic risks to civic discourse, electoral processes, public health

- Implement Mitigation Measures - Deploy technical and procedural safeguards

- Prepare Transparency Reports - Next deadline February 16, 2025 for annual reports

Cross-Border Strategy:

- Legal Review - Assess compatibility of UK and EU compliance measures

- Technical Implementation - Design systems accommodating both regulatory frameworks

- Policy Harmonization - Develop unified approach where possible, jurisdiction-specific measures where necessary

- Government Relations - Monitor evolving US-EU-UK regulatory tensions and diplomatic developments

Conclusion

The intersection of UK Online Safety Act enforcement and EU Digital Services Act implementation creates a complex regulatory landscape requiring sophisticated compliance strategies. Organizations must navigate age verification requirements, content moderation obligations, and extraterritorial regulatory effects while managing operational complexity and political tensions between major jurisdictions.

The coming months will likely see continued refinement of these frameworks through enforcement actions, court challenges, and diplomatic negotiations. Compliance teams should maintain agile approaches capable of adapting to rapid regulatory evolution while ensuring fundamental protections for users and platform operations.

Next Steps:

- Monitor Ofcom's Category 1 service designations (expected Summer 2025)

- Track US Congressional investigation developments

- Prepare for increased enforcement activity across both jurisdictions

- Assess platform-specific risk exposure and mitigation strategies

This analysis is based on publicly available information as of July 26, 2025. Regulatory requirements continue to evolve rapidly, and organizations should consult legal counsel for jurisdiction-specific compliance guidance.