Briefing on Global Digital Regulation and Surveillance Trends

Executive Summary

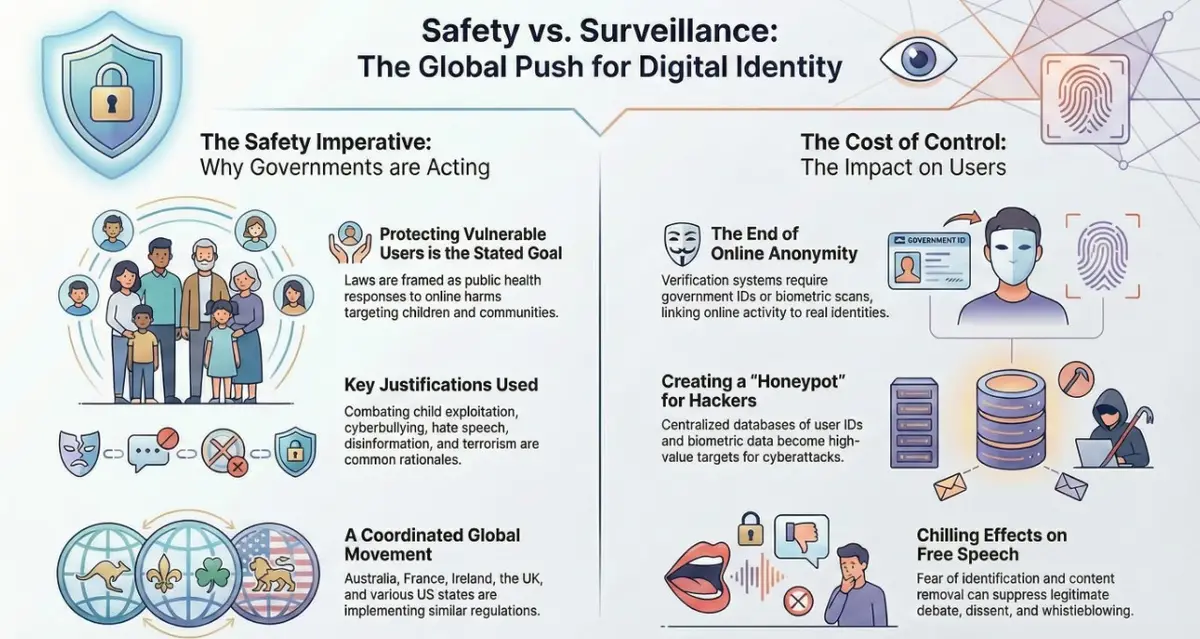

A global wave of digital regulation, ostensibly for child safety and combating hate speech and disinformation, is fundamentally reshaping the internet's architecture and principles. The predominant trends are the mandatory implementation of age and identity verification systems, the systematic erosion of online anonymity, and increased platform liability for user-generated content. Australia and the European Union are at the forefront of this movement, establishing comprehensive regulatory frameworks—such as Australia's multi-layered age verification regime and the EU's Digital Services Act—that are creating powerful precedents for other nations, including the United States.

These regulations are fostering the creation of interlocking surveillance infrastructures that leverage biometric data and centralized identity databases, raising significant concerns for privacy, cybersecurity, and free expression. The expansion of these systems is leading to direct transatlantic conflicts, with the U.S. Congress challenging the extraterritorial reach of Australian and EU laws, citing profound threats to American free speech principles. Online platforms are consequently caught between conflicting and often irreconcilable legal regimes, facing massive potential fines and complex technical hurdles, while a series of high-stakes legal battles have begun to question the constitutionality of these measures.

I. The Global Push for Age and Identity Verification

A coordinated international movement is underway to mandate age and identity verification as a prerequisite for accessing online services, particularly social media. Framed as a necessary measure to protect children, these laws require platforms to build and deploy comprehensive systems that check the age of all users, not just minors.

A. Australia's Multi-Layered Regime: The "World-First" Experiment

Australia has launched the world's most comprehensive digital age verification infrastructure through a phased, multi-layered approach:

- Layer 1: Social Media Minimum Age (SMMA) (Effective Dec 10, 2025): The Online Safety Amendment (Social Media Minimum Age) Act 2024 prohibits Australians under 16 from holding accounts on ten designated platforms (Facebook, Instagram, TikTok, X, Reddit, YouTube, Snapchat, Threads, Twitch, and Kick). Platforms face fines up to AUD $49.5 million per breach for non-compliance.

- Layer 2: Search Engine Age Verification (Effective Dec 27, 2025): The Internet Search Engine Services Online Safety Code requires search engines to implement age assurance for all logged-in users and apply maximum "safe search" filters by default for unverified or logged-out users. Full compliance is required by June 27, 2026.

- Layer 3: Additional Codes (Effective March 9, 2026): Six more online safety codes will extend these requirements to app distribution platforms, device providers, AI chatbots, and messaging services.

Platforms can use various verification methods, including photo ID scanning, AI-powered facial age estimation, credit card verification, and digital ID systems. However, early results show widespread circumvention by teens using VPNs, false birthdates, and migration to less-regulated apps, creating a "Whack-a-Mole" enforcement challenge.

B. The European Movement

Multiple European nations are pursuing similar restrictions, often explicitly following Australia’s model.

- France: A bill has been proposed to ban social media access for children under 15, escalating from a less-enforceable 2023 parental consent law.

- Denmark: A cross-party agreement was reached to ban social media for children under 15, with implementation expected in mid-2026.

- Other Nations: Italy, Spain, and Germany are all drafting or studying similar age-based restrictions, often planning to leverage national digital wallet systems for verification.

- EU-Wide Coordination: While resisting a blanket EU-wide ban, the European Commission is piloting an age verification app. In November 2025, the European Parliament voted for a two-tiered framework establishing age 13 as an absolute minimum and age 16 for unrestricted social media access, to be backed by the EU Digital Identity Wallet.

C. The United States' State-Level Patchwork

In the U.S., several states are enacting their own age verification laws, creating a complex and conflicting compliance landscape.

- Virginia (SB 854): Effective January 1, 2026, this law mandates "commercially reasonable" age verification for all social media users and imposes a strict one-hour daily usage limit on users under 16 (which parents can override).

- Other States: A Texas age verification law was blocked by a federal judge in December 2025 as "likely unconstitutional," while Louisiana and Utah have also pursued stringent verification requirements.

These laws face significant legal challenges. Tech industry group NetChoice has filed a federal lawsuit against Virginia's law, arguing it violates the First Amendment by conditioning access to protected speech on government-approved identification.

D. Global Adoption Status

The trend toward age verification is a global phenomenon, with numerous countries actively developing or implementing similar regulatory frameworks.

Country/Region | Status | Key Details |

Australia | Active | Social media ban for under-16s and search engine verification active since Dec 2025. |

United Kingdom | Active | Age verification enforcement began July 2025 under the Online Safety Act. |

European Union | In Progress | Parliament voted for a 13/16 age framework via EU Digital Wallet; Commission piloting app. |

France | Pending | Legislation to ban social media for under-15s proposed in Jan 2026. |

Brazil | Pending | Digital ECA requires age verification "at each access attempt"; effective March 2026. |

Malaysia | Announced | Ban on users under 16 via eKYC systems to begin Jan 1, 2026. |

New Zealand | Pending | Bill introduced in May 2025 proposes a 16-year age limit. |

Singapore | Announced | Plans to age-restrict social media, actively engaging with Australian counterparts. |

Virginia, USA | Enacted | Law mandating age verification and time limits for minors effective Jan 1, 2026; under legal challenge. |

Texas, USA | Enjoined | Age verification law blocked by a federal judge in Dec 2025. |

II. The Erosion of Anonymity and Expansion of Surveillance

The regulations being enacted extend far beyond child safety, creating the technical and legal foundation for a de-anonymized internet and expanding state surveillance capabilities.

A. From Child Safety to Universal Identification

The push for age verification for minors has become a Trojan horse for universal identity verification systems.

- Ireland's Proposal: During its EU Council presidency starting in July 2026, Ireland plans to champion mandatory identity verification for all social media users across the EU to end online anonymity, citing the need to combat bots and disinformation.

- Victoria, Australia's Proposal: Following a terror attack, the state of Victoria has proposed legislation that would compel social media platforms to identify any user accused of "vilification" (hate speech). If a platform cannot identify the user, it becomes legally liable for damages. This creates a powerful incentive for platforms to collect verified identity data from all users.

B. New State Surveillance Powers

Crisis events are being leveraged to pass sweeping legislation that expands police surveillance powers over public and digital spaces.

- New South Wales (NSW), Australia: In response to the December 2025 Bondi Beach terror attack, NSW passed an emergency anti-terror law that allows police to order the removal of face coverings at protests. This provision directly facilitates biometric data capture by the state's expansive facial recognition infrastructure.

- Victoria, Australia: The state's proposed anti-vilification law would remove prosecutorial oversight, allowing police to independently pursue criminal charges for speech-based offenses that carry penalties of up to five years in prison. The plan also includes a National Hate Crimes and Incidents Database.

C. The Cybersecurity and Privacy Catastrophe

These mandatory identification regimes are criticized by cybersecurity experts for creating unprecedented risks.

- Creation of "Honeypots": Centralized databases containing verified government IDs, biometric data, and detailed behavioral profiles linked to real-world identities become high-value targets for criminals and state-sponsored hackers. The historical record is rife with massive data breaches of far less sensitive information.

- Consequences of Breaches: A compromise of these databases could lead to identity theft at scale, sophisticated phishing campaigns, financial fraud, permanent doxxing, and political persecution.

- Threat to Protected Activities: The elimination of anonymity jeopardizes the safety of whistleblowers, security researchers reporting vulnerabilities, domestic abuse survivors, and political dissidents.

III. New Regulatory Frameworks and Enforcement Mechanisms

Governments are creating novel legal tools to enforce content moderation and identification requirements, fundamentally altering the relationship between states, platforms, and users.

A. The EU's Digital Services Act (DSA)

The DSA establishes powerful enforcement mechanisms that are already being used to shape online speech.

- The "Trusted Flagger" System: This system grants designated organizations priority status to flag "potentially illegal" content for expedited removal. The ambiguous standard of "potentially" illegal shifts the burden to platforms, creating immense pressure for preemptive content removal to avoid fines of up to 6% of global revenue.

- The "Brussels Effect": As platforms deploy systems to comply with the DSA, these European rules often become de facto global standards, exporting EU content norms worldwide.

B. Regulatory Censorship in Practice

The power of these new frameworks is being demonstrated through government actions that critics label "regulatory censorship."

- Poland's DSA Request: On December 29, 2025, Poland's deputy digital minister requested the EU Commission take "interim measures" to limit the spread of AI-generated TikTok videos promoting "Polexit." This targeted a specific political viewpoint, not just coordinated inauthentic behavior. TikTok removed the account after the request became public but before any formal investigation, demonstrating preemptive compliance under regulatory pressure.

- US COVID-19 Censorship Comparison: This dynamic mirrors the US government's pressure campaign on social media companies during the COVID-19 pandemic to suppress "malinformation," which included true but politically inconvenient facts, satire, and scientific debate. Multiple federal courts found the administration's actions violated the First Amendment.

C. Platform Liability and Corporate Behavior

Litigation and investigations reveal that platform business models can prioritize profit over user safety, while new laws seek to shift liability.

- U.S. Virgin Islands Lawsuit Against Meta: A groundbreaking lawsuit filed on December 30, 2025, alleges Meta knowingly profits from fraudulent advertising. A November 2024 Reuters investigation, cited in the complaint, revealed Meta internally projected that approximately $16 billion (10% of its 2024 revenue) would come from fraudulent ads. Internal documents showed Meta only banned advertisers with 95% certainty of fraud, treating regulatory fines as a manageable business expense.

- Victoria's Liability Model: The state's proposal makes platforms liable for civil damages in vilification cases if they cannot identify the accused user, directly tying a platform's financial risk to its ability to de-anonymize its users.

IV. Transatlantic Conflict and Constitutional Challenges

The global push for digital control has created significant friction between different legal and philosophical traditions, leading to diplomatic clashes and constitutional court battles.

A. The US Congress vs. Australia's eSafety Commissioner

The U.S. House Judiciary Committee has threatened to compel testimony from Australia's eSafety Commissioner, Julie Inman Grant, a U.S. citizen, over allegations of implementing a "global censorship regime." The committee's core concerns include:

- Extraterritorial Takedown Demands: eSafety's attempts to mandate the global removal of content, rather than geo-blocking it for Australian users.

- VPN Circumvention: eSafety's formal requests that platforms detail how they will mitigate user circumvention of Australian laws via VPNs, which the committee argues is a backdoor to demanding global enforcement.

- Coordinated Pressure: Internal correspondence shows eSafety directing American companies to publish pre-written compliance statements and demanding meetings to discuss non-compliance.

This confrontation highlights a fundamental clash between Australia's prioritization of online safety and the American constitutional principle of protecting free speech from government interference.

B. Legal and Constitutional Battles

The new wave of regulations is being challenged in courts around the world.

- Australia: Reddit Inc. and the rights group Digital Freedom Project have filed challenges in Australia's High Court against the under-16 social media ban, arguing it violates the implied freedom of political communication. A preliminary hearing is scheduled for February 2026.

- United States: The NetChoice lawsuit against Virginia's SB 854 is one of several First Amendment challenges to state-level age verification laws.

These cases will serve as critical tests of whether governments can legally mandate identity verification as a condition for accessing constitutionally protected speech online.

V. Conclusion: Key Themes and Future Outlook

The global regulatory landscape is at a pivotal crossroads. The drive to protect children and combat online harms is being used to justify the creation of a permission-based internet, fundamentally altering its original architecture of open access. This shift is characterized by the normalization of surveillance infrastructure, where mandatory identity verification becomes a standard feature of online life.

The justifications for these laws often appear disconnected from their practical effects. For example, the perpetrators of the Bondi Beach attack that spurred new surveillance laws in NSW and Victoria were already known to authorities; the failure was one of intelligence, not a lack of surveillance capability. The new laws build broad surveillance systems that affect all citizens while doing little to address the specific security gaps they claim to solve.

This trend is forcing a global reckoning between competing values: national sovereignty versus the borderless internet, child safety versus privacy and free expression. The outcomes of the ongoing legal, diplomatic, and legislative battles will determine whether the internet fractures into a series of national intranets with digital checkpoints at their borders, or whether principles of anonymity, privacy, and open discourse can be preserved.