Australia's World-First Social Media Ban: What's Really Happening on December 10, 2025

Australia is about to implement the world's first nationwide social media ban for users under 16, and the clock is ticking. With Meta already beginning to remove teenage accounts from Instagram and Facebook starting December 4, and the full law taking effect on December 10, 2025, this controversial legislation is reshaping how young Australians interact with the digital world.

The Law: What's Actually Being Banned

The Online Safety Amendment (Social Media Minimum Age) Act 2024, which passed through Parliament in November 2024, establishes a mandatory minimum age of 16 for social media platform accounts. Despite being commonly called a "ban," Australian officials prefer to characterize it as an "age restriction" or "delay" in accessing social media accounts.

The distinction is important: the law doesn't penalize young people or their families for accessing age-restricted platforms. Instead, it places the entire burden on social media companies, who face fines of up to AU$49.5 million (approximately $32 million USD) for systemic failures to prevent underage account creation and maintenance.

Which Platforms Are Affected?

As of November 21, 2025, Australia's eSafety Commissioner has identified the following platforms as age-restricted:

Banned for Under-16s:

- Threads

- X (formerly Twitter)

- TikTok

- Snapchat

- YouTube (regular version)

- Kick

- Twitch

Exempted Platforms:

- Messenger and Messenger Kids

- Discord

- YouTube Kids

- Google Classroom

- Roblox

- Steam and Steam Chat

- GitHub

- LEGO Play

- Kids Helpline

The exemptions focus on platforms whose primary purpose is education, health services, professional networking, or gaming rather than social interaction and content sharing.

The Timeline: How It's Rolling Out

The implementation has been swift and, critics say, rushed:

- November 28, 2024: The bill passed Parliament in just nine days, with the public given only one business day to make submissions

- December 10, 2024: The Act received Royal Assent, becoming law

- December 4, 2025: Meta begins removing under-16 users from Facebook and Instagram

- December 10, 2025: Full enforcement begins; platforms must have "reasonable steps" in place

- May 2025: eSafety Commissioner called for community consultation on implementation guidelines

- December 10, 2026: One-year grace period ends; platforms face full penalties for non-compliance

How Will It Work? The Age Verification Question

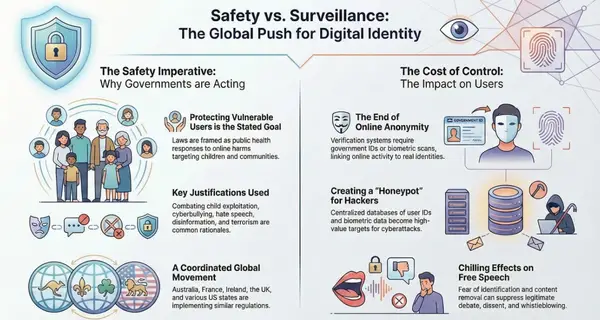

The mechanics of enforcement remain one of the most contentious aspects of the legislation. The law explicitly prohibits platforms from using government-issued identification, including digital IDs, for age verification. Instead, companies must develop their own "reasonable steps" to prevent underage access.

According to regulatory guidance released by the eSafety Commissioner, platforms are expected to use a "multilayered waterfall approach" that may include:

- Age estimation technology: Facial scanning and biometric analysis to estimate user age

- Age inference: Using existing data patterns to determine likely age ranges

- Behavioral analysis: Monitoring account activity for signs of underage use

- Account deactivation: Removing accounts identified as belonging to users under 16

- Re-registration prevention: Blocking attempts to create new accounts after removal

Importantly, the government has stated it is "not asking platforms to verify the age of all users," recognizing that blanket verification may be unreasonable. Some young people may retain access if facial scanning estimates they're over 16, highlighting the potential for significant error margins in enforcement. The privacy and accuracy concerns with biometric age verification have been extensively documented, with error rates of up to 30% in some trials.

What Happens to Existing Accounts?

Meta has already begun notifying affected users to download their contacts, photos, and memories before their accounts are deleted. The company started this process on November 20, 2025, ahead of the December 4 removal date.

According to regulatory guidance, platforms are expected to:

- Provide users with simple methods to download their account information before removal

- Offer formats that allow data transfer to other services

- Consider allowing users to reactivate accounts when they turn 16

- Maintain accessible review mechanisms for users over 16 who are mistakenly restricted

However, it remains unclear whether platforms will preserve user content or allow account reactivation once users reach the minimum age.

The Government's Case: Why This Ban?

Prime Minister Anthony Albanese has been vocal about his rationale for the legislation, describing social media as a "scourge" on young people's mental health. The government's key arguments include:

Mental Health Protection

Officials cite research linking excessive social media use to increased rates of anxiety, depression, body image issues, and sleep disruption among adolescents. The Australian Institute of Health and Welfare reports that depression or anxiety among 15-34-year-olds surged from approximately 9% in 2009 to 22% in 2022.

Brain Development Concerns

Adolescence represents a critical period for brain development, making young people particularly susceptible to the negative effects of online environments. Studies suggest that excessive social media use can interfere with healthy cognitive development.

Cyberbullying and Online Predators

The legislation aims to protect children from harassment, harmful content, and potential exploitation by reducing their exposure to unmoderated social interactions.

Data Privacy

By restricting access for younger users, the government hopes to mitigate privacy violations and exploitation of personal data by technology companies that children may not fully understand. Meta has previously faced significant GDPR fines for Instagram's handling of children's data, including a €405 million penalty for revealing phone numbers and email addresses of children aged 13-17.

Public Support

A YouGov poll found that 77% of Australians supported the legislation, and the policy enjoys bipartisan support from both major parties, with the opposition Coalition promising to implement the ban within 100 days if elected.

The Backlash: A Rush to Regulate?

Despite public support, the legislation has faced intense criticism from multiple quarters, including child welfare advocates, mental health professionals, privacy experts, technology companies, and young people themselves.

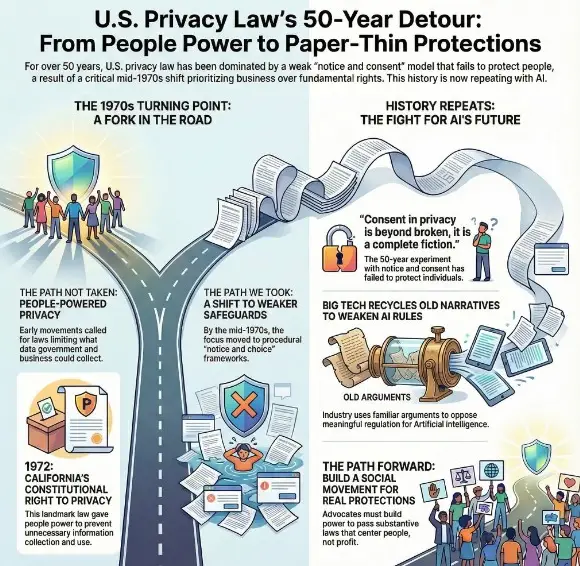

Privacy Concerns for All Australians

Perhaps the most significant concern centers on privacy implications. To comply with the law, platforms must implement age verification systems that could require all Australian users—not just children—to prove their age.

Associate Professor Katharine Kemp from UNSW Law & Justice warns that verification alternatives "can be wildly inaccurate or present security concerns." She points to algorithms that analyze user content and messages, or use biometric information like face scans, as creating "major privacy concerns for all internet users, including children."

Faith Gordon, an associate professor at the Australian National University's School of Law, notes that Australian authorities have "no transparent framework" for auditing tech companies' age verification systems, creating a "real risk of privacy violations." Given recent high-profile data breaches and the common methods behind major data breaches, many Australians are understandably concerned about providing sensitive personal information to social media companies.

The Consultation Controversy

Critics have lambasted the legislative process as rushed and inadequate. The bill passed through both houses of Parliament in just nine days, with the public given only one business day to submit feedback. Over 15,000 submissions were received by the Senate Environment and Communications Legislation Committee, which reported findings in less than a week.

The Greens party labeled the process "rushed and reckless," while numerous stakeholders reported being unable to contribute meaningfully. Crucially, young people themselves—the primary subjects of the legislation—had very limited opportunities to provide input, contradicting Article 12 of the Convention on the Rights of the Child, which guarantees children's right to be heard on matters affecting them.

Leo Puglisi, an 18-year-old journalist and founder of youth news service 6 News Australia, told a Senate inquiry that young people "deeply care" about the ban and its implications. He argued that preventing 15-year-olds from accessing news and political information on social media doesn't make sense, particularly when youth engagement with current events increasingly occurs through digital platforms.

Isolation and Access to Support

Mental health professionals and child welfare advocates have raised serious concerns about unintended consequences. Senator David Shoebridge warned that "mental health experts agreed that the ban could dangerously isolate many children who used social media to find support."

Social media serves as a vital lifeline for marginalized young people, including LGBTQ+ youth in regional areas, teenagers dealing with mental health challenges, and those seeking information about sensitive health topics. A director of a mental health service noted that "73% of young people across Australia accessing mental health support did so through social media."

UNICEF Australia, while acknowledging the need to address online safety, believes the proposed changes won't fix the problems young people face online. The organization argues that the focus should be on improving social media safety rather than simply delaying access, similar to debates around children's privacy protections in the United States under COPPA.

The Australian Human Rights Commission has expressed "serious reservations" about the ban, stating that it "goes too far regarding its excessive impact on beneficial uses of some social media by children and the privacy of all Australian internet users."

Will It Even Work?

Many experts question whether the ban will achieve its stated objectives. Critics argue that:

Technological Workarounds Are Inevitable: Young people have already discussed using VPNs to set up accounts in other countries, borrowing family members' accounts, or creating fake profiles. One 14-year-old TikTok creator told the Australian Broadcasting Corporation, "Everyone's going to get around it." The challenges with age verification systems have been well-documented across multiple jurisdictions implementing similar measures.

It Addresses Symptoms, Not Causes: Rather than making platforms safer for everyone, the ban simply restricts access. As one concerned parent noted, young people "don't even need to have any accounts to access harmful content online. Shouldn't we be focusing on removing the harmful content?"

Potential Dark Web Migration: Faith Gordon warns that teenagers may drift toward "far less regulated corners of the internet, including encrypted networks or the dark web, simply to stay connected. These spaces carry significantly higher risks, from exposure to harmful content to exploitation, and they are far harder for parents, educators and regulators to monitor."

Undermines Parental Authority: The legislation prevents parents from giving consent for their children to use social media, removing family decision-making from the equation entirely.

Industry Pushback

Technology companies have uniformly criticized the legislation, though their motivations are obviously complicated by commercial considerations.

Meta has argued for "legislation that empowers parents to approve app downloads and verify age," allowing families rather than government to decide which platforms teens can access. The company suggests implementing age verification at the app store level rather than individual platforms, calling it "a more accurate, and privacy-preserving system." Given concerns about Meta's extensive data collection practices, many privacy advocates remain skeptical of the company's proposed alternatives.

Google warned in July 2025 that it would sue the Australian government if YouTube was included in the ban, though the platform was ultimately added to the list of age-restricted services.

Elon Musk's X sought to delay implementation in September, questioning the law's compatibility with other Australian regulations.

An advocacy group for digital companies called the legislation "a 20th Century response to 21st Century challenges."

Australia's Broader Tech Confrontation

This legislation fits within Australia's broader pattern of aggressive technology regulation. The country has earned a reputation for confronting Silicon Valley giants, previously forcing tech companies to pay for news content used on social media platforms.

The ban represents part of a comprehensive approach to online safety. Australia's eSafety Commissioner has been empowered with significant regulatory authority, including the ability to order removal of harmful content and issue transparency notices requiring platforms to disclose their safety measures.

This world-first legislation is being watched closely by other nations. The European Union is considering similar age restrictions, while the United Kingdom has implemented mandatory age checks for websites likely to contain adult content, and Brazil has passed the world's most comprehensive child protection law requiring age verification on virtually all internet services. The opposition in Australia has organized an open letter signed by 140 experts specializing in child welfare and technology, though their concerns center more on privacy implications than the principle of age restrictions.

What Happens Next?

As December 10, 2025 approaches, several key questions remain unanswered:

Implementation Details: Exactly how platforms will implement age verification without government IDs remains unclear, despite regulatory guidance from the eSafety Commissioner. The broader Australian Digital ID system being implemented alongside these social media restrictions adds another layer of complexity to the age verification landscape.

Enforcement Mechanisms: How authorities will monitor compliance and what evidence will be required to demonstrate "reasonable steps" is still being determined.

Appeal Processes: Procedures for users incorrectly identified as underage to restore their access are still being developed.

Content Preservation: Whether young people will lose years of creative content, photos, and social connections permanently remains uncertain.

International Coordination: How platforms will handle Australian users versus those in other countries, particularly given the ease of VPN use, is an open question.

The Broader Debate: Safety vs. Rights

At its core, Australia's social media ban crystallizes a fundamental tension in modern society: how to protect children from genuine online harms without restricting their rights to information, expression, and participation in digital life.

Proponents argue that social media platforms were designed by and for adults, aggressively marketed to children without adequate safety measures. They compare the restriction to age limits on alcohol, cigarettes, and driving—reasonable protections during developmental stages.

Professor Michael Salter from UNSW Arts, Design and Architecture, one of the world's leading authorities on child sexual exploitation and abuse, supports this view: "Social media was made by adults, for adults, and aggressively marketed to children. A social media 'ban' is no different to the age 'bans' that we apply to alcohol, cigarettes or driving a car."

Critics counter that this analogy fails because social media has become integral to modern communication, education, and civic participation in ways that alcohol and cigarettes never were. They argue for a more nuanced approach focused on platform accountability and improved safety features rather than blanket restrictions.

The Australian Human Rights Institute notes that less restrictive alternatives exist that could achieve child protection goals without significant negative impacts on other human rights, including freedom of expression and access to information.

A Test Case for the World

Australia's experiment with age-based social media restrictions represents uncharted territory. With Meta already removing teenage accounts, approximately 2.4 million young Australians will lose access to major social platforms, Senator Shoebridge notes, "just as school holidays start."

Whether this legislation will effectively protect children's mental health and wellbeing, or whether it will drive teenagers toward less regulated corners of the internet while violating privacy rights, remains to be seen. The implementation will be closely watched by governments, technology companies, child welfare advocates, and families worldwide, particularly as global privacy regulations continue to evolve with increasingly strict enforcement mechanisms.

What's certain is that Australia has decisively rejected the status quo of unregulated youth social media access. Whether this represents a necessary correction or governmental overreach will likely be debated for years to come, as the real-world impacts on young people's lives, mental health, social connections, and digital rights become clear.

For now, Australian teenagers under 16 have just days to download their digital memories before this unprecedented experiment in online age restrictions begins.

Note: This situation is rapidly evolving. Check the Australian eSafety Commissioner's website (esafety.gov.au) for the most current information on affected platforms and implementation details.

Links Added by Section

Introduction

- compliancehub.wiki - Australia's Groundbreaking eSafety Laws comprehensive analysis

- Link: https://www.compliancehub.wiki/australias-groundbreaking-esafety-laws-a-comprehensive-analysis-of-the-social-media-minimum-age-ban/

- Context: Comprehensive analysis of the legislation and its implications

The Law Section

- compliancehub.wiki - Australia's Bold Experiment: World's First Under-16 Ban

- Link: https://www.compliancehub.wiki/australias-bold-experiment-the-worlds-first-under-16-social-media-ban/

- Context: Detailed explanation of the age restriction vs. ban characterization

Privacy Concerns Section

- breached.company - Recent high-profile data breaches

- Link: https://breached.company/10-latest-global-cybersecurity-breaches-hacks-ransomware-attacks-and-privacy-fines-2025/

- Context: Supporting concerns about data security when providing personal information to platforms

- breached.company - Common methods behind major data breaches

- Link: https://breached.company/the-most-common-methods-behind-major-data-breaches/

- Context: Further context on why data breach concerns are valid

Government's Case - Data Privacy

- breached.company - Meta's GDPR fines for children's data

- Link: https://breached.company/10-latest-global-cybersecurity-breaches-hacks-ransomware-attacks-and-privacy-fines-2025/

- Context: €405 million penalty for Instagram's handling of children's data

Government's Case - Public Support

- compliancehub.wiki - 77% public support polling data

- Link: https://www.compliancehub.wiki/australias-bold-experiment-the-worlds-first-under-16-social-media-ban/

- Context: YouGov polling showing overwhelming public support

Age Verification Implementation

- myprivacy.blog - Biometric age verification privacy and accuracy concerns

- Link: https://www.myprivacy.blog/australias-digital-revolution-age-verification-and-id-checks-transform-internet-use/

- Context: Error rates up to 30% in biometric age verification trials

Criticism Section - Will It Work?

- myprivacy.blog - Global age verification challenges

- Link: https://www.myprivacy.blog/the-global-age-verification-disaster-how-privacy-dies-in-the-name-of-safety/

- Context: Documentation of challenges with age verification systems across jurisdictions

Criticism Section - UNICEF Position

- compliancehub.wiki - COPPA compliance guide

- Link: https://www.compliancehub.wiki/childrens-online-privacy-protection-act-coppa-a-comprehensive-guide/

- Context: Comparison to US children's privacy protections debate

Industry Pushback - Meta's Position

- myprivacy.blog - Facebook/Meta privacy settings and data collection

- Link: https://www.myprivacy.blog/a-comprehensive-guide-to-facebook-meta-privacy-settings/

- Context: Context on Meta's data collection practices

Australia's Broader Tech Confrontation

- compliancehub.wiki - Global child safety legislation compliance guide

- Link: https://www.compliancehub.wiki/global-child-safety-legislation-wave-july-august-2025-compliance-guide/

- Context: UK's mandatory age checks implementation

- compliancehub.wiki - Brazil's comprehensive child protection law

- Link: https://www.compliancehub.wiki/brazils-digital-eca-the-worlds-most-comprehensive-child-protection-law-requires-age-verification-on-every-access/

- Context: World's most comprehensive child protection law requiring age verification

Test Case for the World

- compliancehub.wiki - GDPR 2025 updates and cross-border enforcement

- Link: https://www.compliancehub.wiki/gdpr-2025-updates-navigating-cross-border-transfers-and-stricter-breach-reporting/

- Context: Evolution of global privacy regulations with strict enforcement

What Happens Next

- myprivacy.blog - Australia's broader Digital ID system

- Context: Comprehensive Digital ID system being implemented alongside social media restrictions