Analysis of Online Age Verification Mandates

Executive Summary

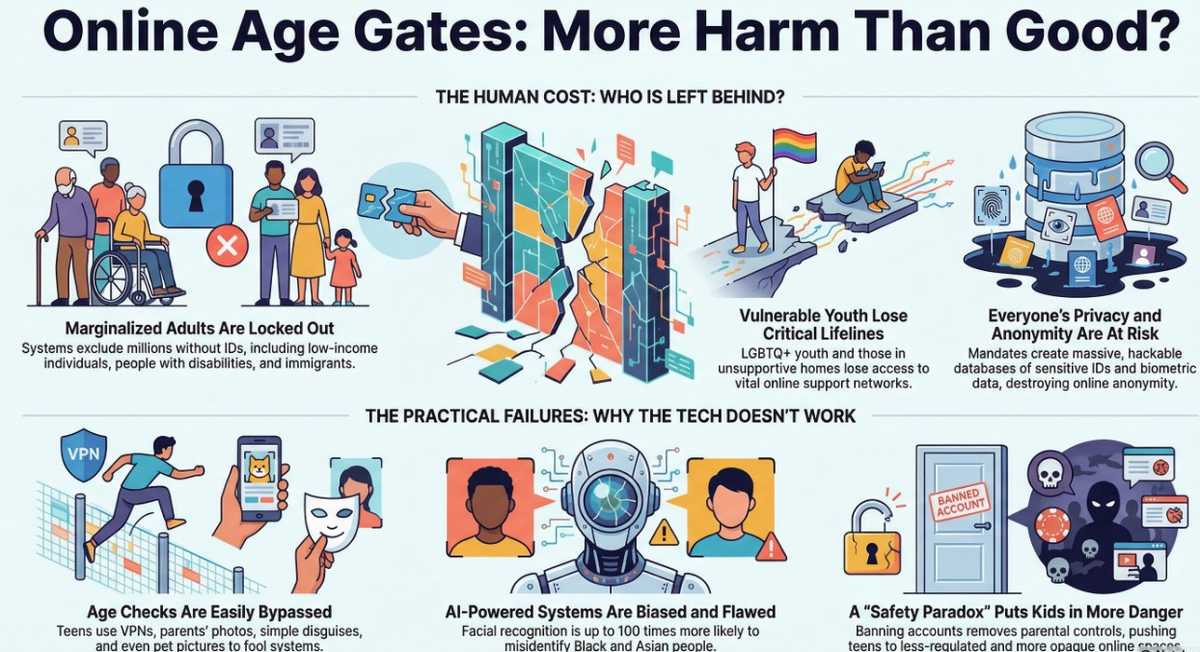

A global legislative trend is emerging to mandate online age verification, ostensibly to protect children from harm. Spearheaded by laws like Australia’s Social Media Minimum Age Act (SMMA), these regulations require online services to verify user ages, often through ID checks or biometric scans. However, a comprehensive analysis of these systems reveals that they are built on technically flawed, biased, and easily circumvented technologies. Rather than protecting users, these mandates create severe risks to privacy and security, systematically discriminate against marginalized communities, undermine free expression, and can paradoxically expose children to greater harm.

The core technologies—document verification and facial age estimation—exclude millions of adults who lack proper identification and exhibit significant racial and gender bias, with higher error rates for people of color and women. Teens have proven adept at bypassing these measures with simple workarounds, from using parents’ photos to inexpensive disguises. Consequently, these laws impose a heavy burden on society while offering a false sense of security.

The mandates create vast repositories of sensitive personal data, making them prime targets for hackers and increasing the risk of identity theft. They disproportionately harm vulnerable populations, including LGBTQ+ youth who lose access to vital support networks, abuse survivors who rely on anonymity for safety, and individuals in foster care. By banning accounts, these laws also disable existing parental controls, driving youth to less-regulated corners of the internet. From an economic standpoint, these regulations disproportionately burden U.S. technology firms and risk creating a fragmented global internet through "regulatory contagion." A more effective approach involves prioritizing "Safety by Design" principles, robust parental controls, and privacy-preserving, device-level verification standards over flawed and dangerous access prohibitions.

I. The Global Trend Towards Age Verification

Across the globe, governments are increasingly passing legislation that forces online platforms to verify the age of their users. By the end of 2025, half of the U.S., the UK, and Australia have enacted laws requiring users to upload an ID or scan their face to access "sexual content" or create social media accounts. While promoted as a straightforward measure to protect children, these laws create complex, discriminatory, and dangerous systems for all internet users.

Case Study: Australia's Social Media Minimum Age Act (SMMA)

Effective December 10, 2025, Australia's SMMA serves as a prominent example of this regulatory approach. The Act amends the Online Safety Act 2021 with the following key provisions:

- Minimum Age Requirement: Requires covered online services to take "reasonable steps" to prevent Australians under 16 from holding accounts. This applies to both new and existing accounts.

- Broad and Ambiguous Scope: Defines an "age-restricted social media platform" as any service whose "sole purpose" or "significant purpose" is to enable online social interaction. Eight of the ten services initially considered "in scope" are U.S.-owned, including Facebook, Instagram, Reddit, Snapchat, YouTube, and X.

- Discretionary Ministerial Power: Grants the Australian Minister for Communications the authority to designate or exempt services through legislative rules without mandates for transparent criteria or independent review, raising concerns that political visibility, rather than evidence-based risk assessment, will drive enforcement.

- Severe Penalties: Imposes substantial civil penalties for non-compliance, with major violations incurring fines of up to AUD $49.5 million (approximately USD $32.2 million).

II. Technical Flaws and Pervasive Ineffectiveness

The technologies underpinning age verification mandates are fundamentally flawed, suffering from systemic biases and being remarkably easy for determined users to circumvent.

Systemic Biases in Verification Technology

Verification Method | Identified Flaws and Biases |

Document-Based | Assumes universal access to government-issued IDs. In the U.S., approximately 15 million adult citizens lack a driver's license, and 2.6 million have no government-issued photo ID. An additional 34.5 million adults do not have an ID with their current name and address. This disproportionately affects Black adults (18% without a driver's license), Hispanic Americans, people with disabilities, and lower-income individuals. |

AI-Based Facial Estimation | Research consistently shows these algorithms are less accurate for people with Black, Asian, Indigenous, and Southeast Asian backgrounds. A 2019 study by the National Institute of Science and Technology (NIST) found that Asian and African American people were up to 100 times more likely to be misidentified than White men. The technology also performs worse on transgender individuals and cannot classify non-binary genders. While one provider, Yoti, claims its model is most accurate for the 13-18 age bracket, it acknowledges an error margin and that accuracy decreases with age. |

Pervasive Circumvention by Youth

The implementation of Australia's SMMA was met with immediate and widespread workarounds by teenage users, demonstrating the technical ineffectiveness of the ban. Methods reported include:

- Using a parent's or older sibling's account or photo for verification scans. A 13-year-old named Isobel bypassed Snapchat's checks in under five minutes using her mother's photo.

- Successfully passing verification using non-human images, including photos of a golden retriever.

- Employing simple disguises, such as inexpensive party masks (e.g., a $22 "old man" mask) or makeup, to fool facial estimation tools.

- Utilizing Virtual Private Networks (VPNs) to obscure their location, though platforms may be required to counter this. This may push teens toward insecure, free VPNs that pose malware and data theft risks.

- Sharing tips and tricks on forums like Reddit for evading checks.

III. Societal Harms and Unintended Consequences

Far from being a harmless inconvenience, age verification mandates introduce significant societal risks that impact privacy, equity, and free expression.

Creation of New Privacy and Security Vulnerabilities

Mandating age verification necessitates the creation of massive repositories of sensitive user data, which experts warn will become prime targets for hackers. This is not a theoretical risk; verification companies like AU10TIX and platforms like Discord have already suffered high-profile data breaches, exposing users' most sensitive information. This process escalates the danger of identity theft, blackmail, and other privacy violations. Cyber-safety experts also warn of a rise in "prove-your-age" scams designed to trick users into handing over personal data.

Exclusion and Discrimination of Marginalized Communities

The reliance on flawed technologies structurally excludes and endangers marginalized communities.

- People Without IDs: Individuals lacking valid documentation due to poverty, disability, or immigration status are locked out of essential online services.

- Communities of Color: Biased algorithms disproportionately misclassify and block users from Black, Asian, and Indigenous backgrounds.

- Transgender and Non-Binary People: For the 43% of transgender Americans whose IDs do not reflect their name or gender, mandates create an impossible choice: out themselves by providing documents with deadnames and incorrect gender markers, or lose online access.

- People with Disabilities: Facial recognition systems often fail to recognize faces with physical differences and "liveness detection" can exclude those with limited mobility.

Erosion of Anonymity and Free Speech

At their core, age verification systems are surveillance systems that risk eliminating online anonymity. This protection is critical for:

- Domestic abuse survivors hiding from abusers.

- Journalists, activists, and whistleblowers protecting sources and organizing without fear of retaliation.

- Citizens under authoritarian regimes accessing banned information.

In the U.S., these laws are challenged on First Amendment grounds because they are both over-inclusive (blocking adults from lawful speech) and under-inclusive (failing to stop minors), thereby restricting more speech than is necessary.

IV. The "Safety Paradox": How Mandates Can Harm Children

Despite the stated intention of protecting youth, age verification mandates often create perverse outcomes that make children less safe.

Loss of Vital Lifelines and Information

For many young people, the internet is a critical resource. Age-gating laws threaten to cut them off from:

- Support Networks: For LGBTQ+ youth, particularly those with unsupportive families, online communities are often a lifeline providing identity-affirming support and mental health resources. Requiring parental consent severs these connections.

- Essential Information: In U.S. states with "abstinence-only" education, the internet is a key source for information about health, sexuality, and gender.

- Educational Resources: Homeschoolers, who rely heavily on the internet for their curriculum, research, and social connection, are severely impacted.

- Vulnerable Youth: The laws fail to account for youth in foster care or group homes who cannot obtain parental or guardian consent, effectively locking out some of the most vulnerable minors.

Disabling Parental Controls and Encouraging Risky Behavior

A critical flaw in account-ban models like the SMMA is that they undermine existing safety tools. Parental controls for filtering content or setting time limits require a linked account to function. By forcing teens to browse anonymously or use workarounds to evade age gates, the laws strip away these safeguards.

This creates a "safety paradox," a concern articulated by Dr. Catherine Page Jeffery of the University of Sydney, who noted that if young people migrate to less regulated platforms and become more secretive, they are less likely to talk to parents about concerning material they encounter. Early reports from Australia indicate teens are already moving to services like Yope and Lemon8, which sit outside the initial regulatory spotlight.

V. Economic and Regulatory Implications

The push for age verification carries significant consequences for the global digital economy and may set a dangerous precedent for internet governance.

Disproportionate Impact on U.S. Companies

The SMMA's framework, which targets the "most popular" services, is viewed as a de facto barrier to market access that disproportionately burdens U.S.-based companies. This approach penalizes services that have invested heavily in safety features while leaving less-regulated competitors untouched. Industry bodies in Australia have warned that this forces major services to act as "internet police," limiting the market to companies large enough to absorb significant compliance liability.

Risk of "Regulatory Contagion"

Australia is actively promoting its model in multilateral forums like the UN. This has led to "regulatory contagion," with countries including New Zealand, Indonesia, Malaysia, Papua New Guinea, and several EU member states considering similar frameworks. This trend threatens to create a fragmented global internet with a patchwork of contradictory and burdensome regulations that stifle innovation, limit free expression, and serve as a pretext for targeting foreign businesses.

VI. Alternative Frameworks and Policy Recommendations

Critics and industry stakeholders have proposed several alternative approaches that prioritize safety without incurring the severe harms of blanket age-verification mandates.

- Prioritize "Safety by Design": This principle, promoted by Australia's own eSafety Commissioner, advocates for shifting focus from prohibitive access bans to enhancing platform safety through better default protections, robust and voluntary parental controls, and more effective content moderation.

- Advocate for Device-Level and Privacy-Preserving Standards: Rather than requiring every website to verify age, a more secure and efficient method is verification at the device, operating system, or app store level. This model, supported by companies like Reddit and Snapchat, prevents users from having to repeatedly submit sensitive data to countless third parties, thus minimizing data silos and security risks.

- Condition Mandates on Mature Privacy Protections: No age-verification mandate should be imposed until decentralized, privacy-preserving standards are fully established. Regulations must prohibit any requirement that forces private entities to create centralized identity databases.

- Focus on Education and Digital Literacy: Instead of a one-size-fits-all regulatory approach, policymakers should empower parents and young users with the education and tools needed to navigate the digital world safely. This recognizes that balancing technology’s risks and benefits is unique to each family.