10 Areas for U.S.-Based Privacy Programs to Focus on in 2025

This past year was another jam-packed one for privacy teams. With an onslaught of new and updated state laws, regulatory guidance, and enforcement actions, it has been difficult to stay on top of every development. However, distilling these legal, regulatory, and litigation trends into concrete focus areas and actionable steps is crucial for building and maintaining an effective privacy program in 2025.

Below are ten key areas U.S.-based organizations should keep on their radar in the year ahead, along with practical measures to strengthen compliance efforts and reduce regulatory risk.

1. Tracking and Targeted Advertising

Why it matters:

Regulators continue to focus on whether companies accurately describe their tracking and consumer choice practices, including disclosures for advertising and analytics. Eight additional states will soon join California and Colorado in requiring browser signals to be honored as an opt-out of “sales” and targeted advertising. Meanwhile, legal demands and lawsuits persist under wiretap laws (e.g., the California Invasion of Privacy Act) and data privacy laws (such as the California Consumer Privacy Act).

Key considerations and steps:

- Map the data: Validate your understanding of all the ways data is shared for targeted advertising — including via pixels, tracking technologies, list uploads, and server-to-server integrations.

- Confirm data classification: Test any assumptions that data is “anonymous” or “deidentified” when used with advertising and analytics tools.

- Align notices and consents: Ensure privacy notices, cookie banners, and preference management pages accurately match your tracking practices.

- Obtain necessary consents: If sensitive data is shared or if you have concerns about wiretap laws, secure opt-in consent or implement opt-out processes as required by law.

- Assess and govern tracking tech: Formalize a tracking technology assessment process to identify, classify, and limit data passed to third parties, and properly implement opt-out mechanisms for “sales” and targeted ads.

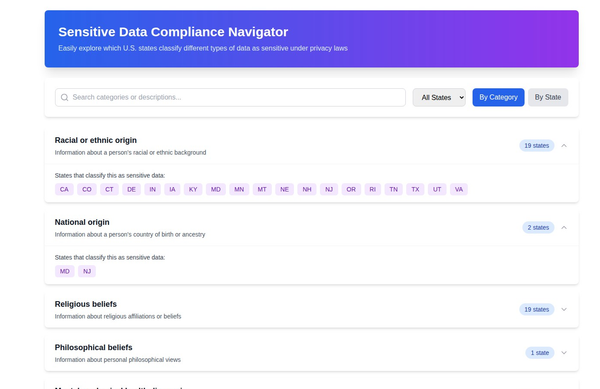

2. Sensitive Data Collection, Consent, and Use

Why it matters:

Several state laws have broadened definitions of “sensitive personal data” and now require explicit consent for processing. Enforcement actions have highlighted the importance of obtaining opt-in consent for sharing location and other “inferentially sensitive” data. In 2025, new laws in Minnesota (Consumer Data Privacy Act) and Maryland (Online Data Privacy Act) will impose even stricter rules:

- Minnesota: Will require small businesses to obtain opt-in consent before sensitive data can be sold.

- Maryland: Will prohibit sensitive data processing unless it is strictly necessary for a consumer-requested product or service, and selling sensitive data will be prohibited.

Next steps:

- Review definitions: Ensure your privacy program (policies, procedures, assessments) reflects expanded definitions of sensitive data.

- Obtain opt-in consents: Confirm your consent mechanisms meet or exceed legal requirements.

- Validate necessity: In Minnesota, verify that sensitive data use is “strictly necessary” and in Maryland, cease any prohibited sales of sensitive data.

- Post required notices: Pay attention to specific notice requirements, such as those in Texas, and align with each state’s unique obligations.

3. Data Protection Assessments

Why it matters:

Ten states now require data protection assessments (DPAs) before certain personal data processing activities begin. The California Privacy Protection Agency (CPPA) has proposed robust additional requirements that may be finalized this year, potentially extending assessment obligations to cover extensive profiling, certain AI training, and other automated decision-making. Minnesota’s new law also includes unique requirements around alignment with internal policies on privacy topics.

What to do:

- Trigger points: Confirm your organization’s processes will kick off data protection assessments whenever a project involves processing in scope of these laws.

- Address new requirements: Validate assessment content to cover new and proposed elements in Minnesota and California.

- Regulator-ready documentation: Be prepared to share these assessments if requested, noting that you may need to tailor how you protect attorney-client privilege or work-product protections.

4. AI and Automated Decision-Making

Why it matters:

If your company uses AI or automated decision-making technologies, 2025 will bring stricter governance, assessment, and consumer rights obligations. Colorado’s AI Act takes effect in early 2026, and California may finalize regulations for automated decision-making technology (ADMT).

- Colorado AI Act: Requires an AI risk management program, annual assessments for certain use cases, targeted disclosures to consumers, new individual rights, website information about AI uses and risk management, and discrimination incident reporting.

- California’s Proposed Regulations: Would impose new obligations for entities using ADMT in profiling, public monitoring, or AI training contexts, including additional consumer notices and new opt-out and access rights.

Action items:

- Identify in-scope use cases: Map where AI and ADMT are used for high-impact decisions or profiling.

- Enhance AI governance: Formalize existing risk management practices and ensure they produce details needed for assessments and disclosures.

- Plan for new rights: Update processes for handling consumer opt-outs, access requests, and transparency requirements related to AI.

- Incident response: Ensure your incident response plan includes AI-related “discrimination” or “bias” notifications where required by law.

5. Biometric Data Processing

Why it matters:

States are actively passing new biometric privacy laws, and regulators continue to bring enforcement actions under existing laws, such as Texas’ biometric law and Illinois’ Biometric Information Privacy Act (BIPA). Colorado’s new biometric privacy law goes into effect July 2025 and imposes both familiar and novel requirements, including:

- Specific content requirements for pre-processing notices.

- Annual reviews to confirm continued necessity of biometric retention.

- Tighter timelines for deletion.

- Incident response and breach notification protocols specific to biometric data.

- Prohibitions on conditioning services on biometric consent (unless strictly necessary).

- Extra employment-related requirements for biometric use.

How to prepare:

- Locate biometric data: Identify all places in your organization where consumer or employee biometric data is collected, used, or shared.

- Stop prohibited disclosures: Comply with Colorado’s July 2025 effective date and cease disallowed disclosures.

- Notice and consent: Put robust notices and consent mechanisms in place, including in employment contexts.

- Retention policies: Define how long you keep biometric data and ensure timely deletion once it is no longer necessary.

- Individual rights: Prepare to fulfill requests for specific details such as how biometric data was obtained, why it’s processed, and to whom it was disclosed.

6. Minor Data Collection and Use

Why it matters:

The rising tide of child and teen privacy laws — from amendments to California’s Age-Appropriate Design Code and Connecticut’s privacy law to new laws in Maryland and New York — places more scrutiny on data concerning minors under age 18. Organizations may be in scope even if they do not intentionally or knowingly process minors’ data.

- New York Child Data Protection Act (June 2025): Restricts personal data processing of minors absent “informed consent” and requires signaling mechanisms for withdrawing consent.

- Maryland Online Data Privacy Act (October 2025): Prohibits sales of minors’ personal data or use for targeted advertising.

- Colorado Privacy Act Amendments (October 2025): Will require consent for targeting, sales, profiling, or collecting location data from minors. Also demands new assessments for minors’ data.

Practical steps:

- Scope assessment: Don’t assume you’re out of scope. Understand the ages of your customers and whether minors are “reasonably expected” to use your services.

- Adapt default settings: Ensure privacy-by-default practices align with new child data requirements.

- Strengthen consent workflows: Develop or refine methods for obtaining parental or minor consent, as required.

- Update data governance: Validate processes for data minimization, access requests, and potential data deletion upon learning a user is a minor.

7. Data Products, Services, and Collection Methods

Why it matters:

Multiple new and existing laws and regulations target “data brokers,” or organizations that obtain personal data indirectly and then disclose or sell it to third parties. Companies may qualify as a data broker even if they have direct relationships with some data subjects — particularly if they also sell or share data gathered from other sources.

- California Data Broker Regulations: Under newly adopted rules, an entity can be deemed a data broker if it “sells” data collected indirectly for targeted advertising.

- California Delete Act (2026): Will require data brokers to register, honor a state-run deletion system, and comply with additional data management obligations.

- New Federal Privacy Law (“Protecting Americans’ Data From Foreign Adversaries Act”): Expands oversight on data-sharing arrangements, requires robust due diligence, and restricts sharing data with certain entities.

Compliance actions:

- Identify personal data flows: Determine if you collect personal data from sources other than the individuals themselves.

- Map data sharing: Evaluate if and how data is sold, shared, or disclosed for targeted advertising or other business offerings.

- Check exemptions: Determine whether exclusions apply based on data type or context.

- Register and comply: If in scope, complete required registrations and be prepared for data broker obligations under state laws, including the upcoming California Delete Act.

- Screen third parties: Ensure you have a process to vet business customers or partners. End data transfers that might violate federal or state laws.

8. Consumer-Facing UI and Flows

Why it matters:

Prohibitions on “dark patterns” — manipulative or deceptive interface designs — have multiplied in state privacy laws and garnered regulatory attention. In 2024 and 2025, we have seen several investigations and guidance on user interfaces that hamper or mislead users about their privacy choices.

Suggested approach:

- Conduct a proactive review: Examine your primary customer journeys, including cookie consent flows, preference centers, and registration forms.

- Revisit historical approvals: Check any long-standing UI design practices that might inadvertently frustrate opt-outs or consents, and update as necessary.

- Train teams: Ensure product, UX, and legal teams understand the new regulatory expectations.

- Incorporate UI into privacy assessments: Add design review checkpoints to your data protection assessment process.

9. Documented Privacy Program Policies and Procedures

Why it matters:

A robust set of written policies and procedures is the hallmark of a mature privacy program — and a frequent ask during regulatory investigations. Under Minnesota’s new law, data controllers must have formal privacy program documentation spanning multiple topics, such as:

- Identification of the privacy officer or responsible individual.

- Fulfillment of data subject rights requests.

- Security, data inventory maintenance, and data minimization.

- Retention policies and processes to prevent data from being kept longer than necessary.

- Processes to identify and remediate compliance violations.

Recommended actions:

- Gap assessment: Review your existing privacy policies, procedures, and standard operating documents to confirm they address these topics.

- Prepare for regulator review: Ensure your policies and procedures are consistent with your actual practices.

- Update training and roles: Make sure all relevant teams know what’s in these policies so they can operationalize them.

10. Calibrate Data Collection Practices

Why it matters:

In October 2025, Maryland’s law will prohibit the collection of personal data unless it is “reasonably necessary and proportionate” to provide a product or service a consumer specifically requests. This is a stricter standard than the more general notion of collecting data for “legitimate business purposes.”

What to do:

- Review data intake procedures: Update internal privacy policies and forms that define why and when you collect personal data.

- Embed necessity checks: Incorporate questions into your privacy assessment process: “Is this data truly needed for the user-requested product or service?”

- Conduct data audits: Remove or anonymize any data not tied to a clearly defined, consumer-requested purpose.

- Train stakeholders: Ensure business, marketing, and product teams understand the new requirement and adjust their data-gathering practices accordingly.

Conclusion

As privacy regulations proliferate and enforcement becomes more exacting, U.S. privacy programs must be nimble and thorough. From expanding definitions of sensitive data and biometric identifiers to new obligations around AI, automated decision-making, and child data protections, 2025 is shaping up to be a high-stakes year for compliance.

By focusing on these ten areas, privacy teams can align their policies and procedures, enhance consumer trust, and keep pace with evolving regulatory expectations. The key is to be proactive: audit and refine data practices, implement user-friendly consent and opt-out mechanisms, invest in meaningful privacy assessments, and document every step with clear, accessible policies. Taking these actions now will help ensure your privacy program remains robust — and ready — for what the future holds.